The pursuit of general intelligence demands progress beyond models grounded solely in language and the physical world, requiring a robust ability to reason with structured data. Xingxuan Zhang, Gang Ren, and Han Yu, along with their colleagues, address this challenge with LimiX, a new model that treats structured data as a comprehensive joint distribution, enabling it to tackle a wide range of tabular tasks through a unified approach. LimiX achieves impressive performance across ten diverse benchmarks, consistently outperforming established methods like gradient-boosting trees and other recent foundation models without requiring task-specific adjustments or extensive training. This breakthrough demonstrates a significant step towards more versatile and adaptable artificial intelligence, offering a single model capable of excelling at classification, regression, data imputation, and even data generation.

Latent Diffusion Models (LDMs) represent a powerful approach to generative modelling, but their application to structured data remains challenging. LimiX addresses this gap by treating structured data as a joint distribution over variables and missingness, enabling it to tackle a wide range of tabular tasks through query-based conditional prediction with a single model. The system is pretrained using masked joint-distribution modelling with an episodic, context-conditional objective, allowing the model to predict for query subsets conditioned on dataset-specific contexts, and supporting rapid, training-free adaptation at inference. Researchers evaluate LimiX across ten large structured-data benchmarks, encompassing broad regimes of sample size, feature dimensionality, class number, categorical-to-numerical feature ratio, missingness, and sample-to-feature ratios.

Total Variation Distance Bounds for Language Models

This document presents theoretical guarantees for a model performing masked language modelling. The core aim is to establish bounds on the total variation (TV) distance, a measure of the difference between the model’s predicted distribution and the true data distribution; a smaller distance indicates greater accuracy. Researchers demonstrate that, under specific conditions, the model’s ability to generalize to unseen data is limited by its performance on training data, plus additional factors. The analysis centres on the use of masking, where parts of the input are hidden to encourage the model to learn robust representations.

Key concepts include total variation distance, empirical loss, generalization error, and masking. The work also utilizes concepts like conditional probability, the union bound, and Kullback-Leibler divergence. The document establishes that the model satisfies approximate tensorization of entropy with respect to the mask set, linking generalization ability to performance on masked inputs. These theoretical guarantees provide insights into the model’s limitations and potential for improvement. The use of masking suggests robustness to noise and missing data, while the bounds on TV distance offer insights into generalization ability. The complexity of the model also plays a role, highlighting the importance of balancing performance and overfitting. In essence, this work provides a rigorous foundation for understanding masked language models, demonstrating that generalization error can be bounded by empirical loss under certain conditions.

LimiX Excels at Unified Tabular Data Learning

Researchers present LimiX, a new foundation model for structured data that achieves state-of-the-art performance across a wide range of tabular learning tasks. LimiX uniquely treats structured data as a joint distribution, enabling it to address classification, regression, missing value imputation, and data generation through a single, unified approach. The team pretrained LimiX using a novel context-conditional masked modeling objective, where the model learns to predict masked data subsets conditioned on dataset-specific contexts, facilitating rapid adaptation without task-specific training. Experiments demonstrate that LimiX consistently surpasses strong baseline models, including gradient-boosting trees, deep tabular networks, recent tabular foundation models, and automated ensembles, across ten structured-data benchmarks.

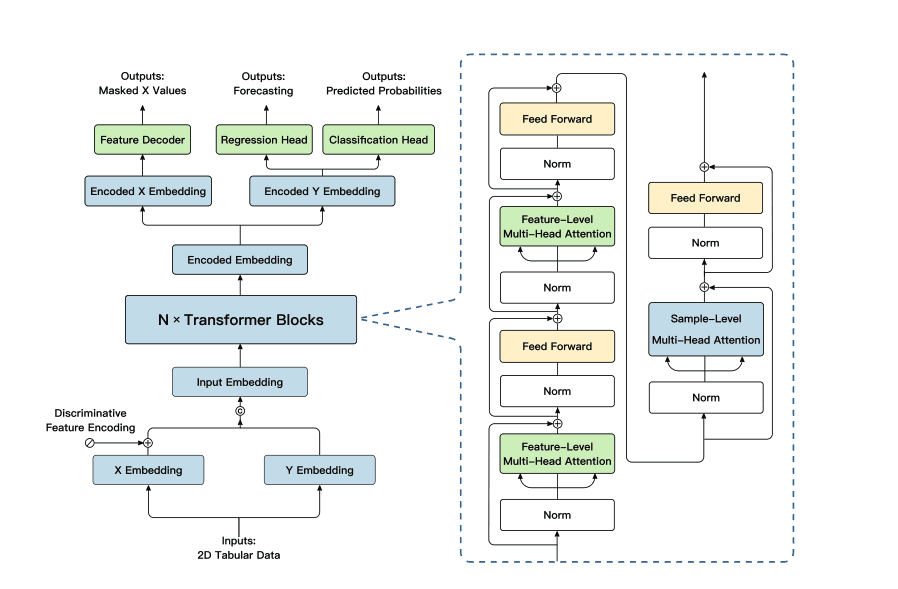

The model delivers substantial gains in predictive performance, often outperforming even AutoGluon on benchmarks such as OpenML-CC18, TabArena, and TALENT-REG. LimiX achieves this success by employing a 12-layer transformer architecture with asymmetric attention, featuring two feature-level passes and one sample-level pass to better capture feature interactions. A key innovation lies in the discriminative feature encoding, which introduces learnable low-rank column identifiers to enhance the model’s ability to discern feature origins. This encoding, combined with the context-conditional masked modeling, allows LimiX to effectively model the joint distribution of the data, enabling it to perform diverse tasks with a single model and a unified inference interface.

Unified Modelling of Data and Missingness

This work introduces LimiX, a new approach to handling structured data that treats it as a joint distribution of variables and missingness. The researchers demonstrate that this method allows for effective prediction across a range of tabular tasks, including classification, regression, and data generation, using a single model. LimiX consistently outperforms existing techniques, such as gradient-boosting trees and other tabular foundation models, across diverse datasets and conditions. The significance of this research lies in providing a unified framework for structured data analysis, which is crucial for evidence-based decision-making in fields like finance, healthcare, and logistics.

By effectively modelling both data and missingness, LimiX offers improved reliability and accuracy compared to methods that focus solely on observed values. The authors acknowledge that their current evaluation focuses on specific tabular benchmarks and that further research is needed to assess performance on more complex, real-world datasets. Future work could explore the application of LimiX to out-of-distribution data and investigate its potential for causal inference.

👉 More information

🗞 LimiX: Unleashing Structured-Data Modeling Capability for Generalist Intelligence

🧠 ArXiv: https://arxiv.org/abs/2509.03505