Researchers from the University of Hamburg have developed a new approach to quantum algorithm optimization, which could enhance the efficiency of quantum machine learning (QML). The team’s method, based on trainable Fourier coefficients of Hamiltonian system parameters, mitigates the issue of ‘barren plateaus’ – large regions in the parameter space with vanishing gradients that hinder training. The new ansatz demonstrated faster and more consistent convergence, and its variance decayed at a nonexponential rate with the number of qubits, indicating the absence of barren plateaus. This could make it a promising candidate for efficient training in QML technologies.

Quantum Machine Learning and Barren Plateaus

Quantum machine learning (QML) is a promising application of near-term quantum computation devices. However, variational quantum algorithms, a class of algorithmic approaches within QML, have been shown to suffer from barren plateaus due to vanishing gradients in their parameter spaces. Barren plateaus are increasingly large regions in the parameter space with exponentially vanishing gradients, which hinder training. The general scaling behavior and emergence of barren plateaus is largely not understood, and the dependence of barren plateaus on the details of variational quantum algorithms has been an active field of research in recent years.

New Approach to Quantum Algorithm Optimization

Lukas Broers and Ludwig Mathey from the Center for Optical Quantum Technologies, Institute for Quantum Physics, and The Hamburg Center for Ultrafast Imaging at the University of Hamburg have presented a new approach to quantum algorithm optimization. This approach is based on trainable Fourier coefficients of Hamiltonian system parameters. The researchers’ ansatz is exclusive to the extension of discrete quantum variational algorithms to analog quantum optimal control schemes and is nonlocal in time.

Viability of the New Ansatz

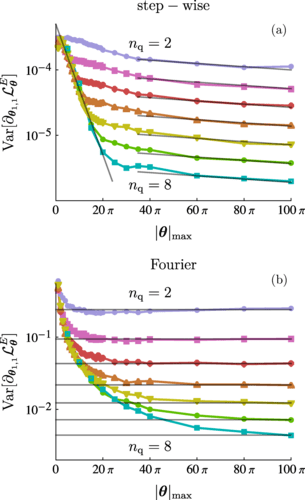

The researchers demonstrated the viability of their ansatz on the objectives of compiling the quantum Fourier transform and preparing ground states of random problem Hamiltonians. Compared to the temporally local discretization ansätze in quantum optimal control and parametrized circuits, their ansatz exhibits faster and more consistent convergence. They uniformly sampled objective gradients across the parameter space and found that in their ansatz, the variance decays at a nonexponential rate with the number of qubits, while it decays at an exponential rate in the temporally local benchmark ansatz. This indicates the mitigation of barren plateaus in their ansatz.

Fourier Coefficients in Quantum Algorithm Optimization

In their proposed parametrization ansatz for quantum algorithm optimization, the researchers directly control the Fourier coefficients of the system parameters of a Hamiltonian. This method is nonlocal in time and is exclusive to analog quantum protocols as it does not translate into discrete circuit parametrizations, which are conventionally found in variational quantum algorithms. The researchers compared their ansatz to the common optimal control ansatz of stepwise parametrizations for the example objectives of compiling the quantum Fourier transform and minimizing the energy of random problem Hamiltonians.

The potential of the New Ansatz in Quantum Machine Learning

The researchers’ Fourier-based ansatz results in solutions with higher fidelity and superior convergence behavior. They demonstrated that their ansatz exhibits nonexponential scaling behavior with respect to the number of qubits in the objective gradient variance, which suggests the absence of barren plateaus. They concluded that their ansatz is a promising candidate for efficient training and avoiding barren plateaus in variational quantum algorithms, and thus, for the success of near-term quantum machine learning technologies.

This article, titled “Mitigated barren plateaus in the time-nonlocal optimization of analog quantum-algorithm protocols”, was authored by Lukas Broers and Ludwig Mathey and published on January 22, 2024. The article can be accessed through the DOI link https://doi.org/10.1103/physrevresearch.6.013076.