Researchers Jiachen Zhu et al., including prominent figures like Yann LeCun and Kaiming He, have developed Dynamic Tanh (DyT), an alternative to normalization layers in Transformers. Presented at CVPR 2025, DyT is a direct replacement for Layer Norm or RMSNorm, inspired by the observation that layer normalization produces tanh-like mappings. The study demonstrates that Transformers using DyT achieve comparable or superior performance across diverse tasks and models, challenging the notion that normalization is indispensable in neural networks.

The paper investigates replacing normalization layers in Transformer models with a novel approach called Dynamic Tanh (DyT). Normalization is critical in stabilizing training by maintaining activations within a specific range and acting as a regularizer to prevent overfitting. DyT aims to replicate these benefits without relying on batch statistics, offering potential advantages in computational efficiency and model flexibility.

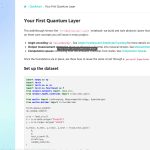

DyT processes inputs through three steps: scaling with a learnable parameter alpha, applying the tanh function, and linearly transforming the output using learned weight and bias parameters. This adaptive approach allows DyT to adjust activation transformations flexibly across different layers and tasks. The implementation is straightforward as a PyTorch module, facilitating practical experimentation.

The study tested DyT across various domains, including vision (Vision Transformers), speech (wav2vec 2.0), and language modeling (LLaMA). Results indicated that models using DyT performed comparably to or better than those with traditional normalization methods. A potential advantage of DyT is reduced computational intensity due to the absence of batch statistics calculations, which could benefit large models or real-time applications.

While DyT shows promise, several considerations remain. The computations involved in scaling, applying tanh, and linear transformation may offset the savings from eliminating batch statistics, necessitating a detailed cost comparison. Additionally, the impact on overfitting is an important consideration, as normalization introduces noise through batch statistics, acting as a regularizer. Without this, additional regularization techniques might be necessary to maintain model stability.

Theoretical aspects, such as gradient flow differences between tanh and normalization, are worth exploring. Tanh’s derivatives can lead to vanishing gradients, potentially affecting training dynamics compared to normalization’s role in maintaining stable gradients. Furthermore, sensitivity to initialization or specific hyperparameters remains an area for further investigation.

In conclusion, DyT presents a promising approach for enhancing Transformer models with potential efficiency and flexibility gains. While empirical results are encouraging, further research is needed to understand its theoretical underpinnings and practical implications fully.

More information

External Link: Click Here For More