Scientists are tackling the challenge of accurately interpreting motion in videos, a crucial element for applications ranging from action recognition to robotic navigation! Nattapong Kurpukdee and Adrian G. Bors, both from the Department of Computer Science at the University of York, present a novel two-stream temporal transformer designed to extract both spatial and temporal information from video footage! Their research demonstrates the power of transformer networks , renowned for their self-attention mechanisms , to identify and relate key features within both video content and optical flow data, representing movement! This innovative approach achieves excellent results on standard human activity datasets, potentially advancing the field of video understanding and opening doors to more sophisticated video-based technologies.

Joint Optical Flow and Temporal Transformers excel at

Scientists have demonstrated a new two-stream transformer video classifier capable of extracting both spatial and temporal information from video content and optical flow! This breakthrough research introduces a novel methodology for identifying self-attention features across joint optical flow and temporal frame domains, representing their relationships within a transformer encoder mechanism. The team achieved excellent classification results on three well-known video datasets of human activities, signifying a substantial advancement in video understanding technologies. This work establishes a powerful new approach to motion representation, crucial for applications ranging from action recognition to robotic guidance systems.

The study unveils a transformer-based architecture that efficiently fuses scene and movement features from video through a self-attention mechanism. Researchers leveraged the intrinsic ability of transformers to detect similarities in data, jointly modelling video features derived from both frame content and corresponding optical flow. Experiments show that this approach effectively captures the nuances of motion, improving the accuracy of video action classification. The proposed model processes video by first extracting features from both the raw frames and the calculated optical flow, representing movement information, a critical step in discerning actions within video sequences.

This innovative methodology contrasts with traditional video classification techniques relying on Convolutional Neural Networks (CNNs), and even recent advancements utilising multi-stream processing with RestNets. While these methods have yielded good results, the team’s transformer-based approach surpasses them on benchmark datasets like UCF101, HMDB51, Kinetic-400, and Something-Something V2. The research proves the effectiveness of using a transformer encoder to identify and represent relationships between spatial and temporal features, offering a more robust and accurate method for video analysis. Furthermore, the use of neural networks for optical flow estimation enhances performance compared to traditional hand-crafted methods.

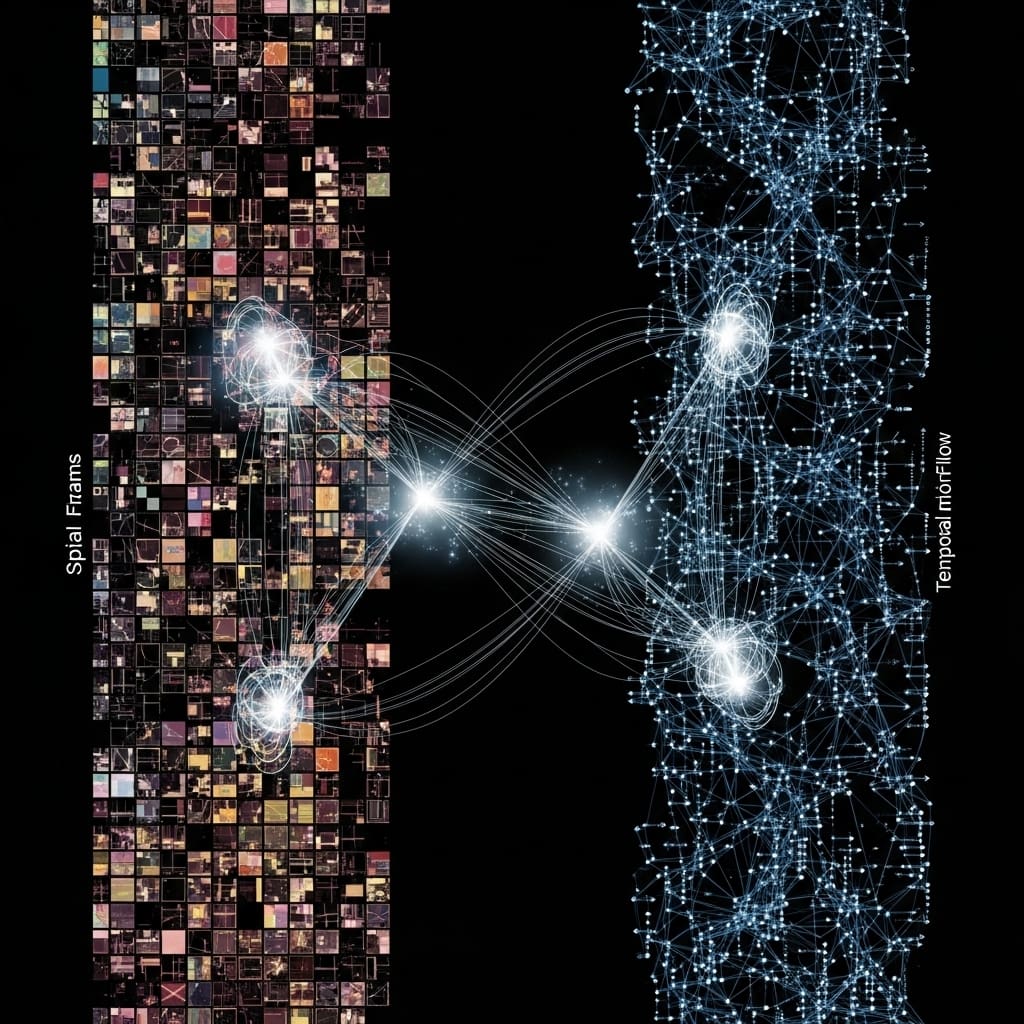

The core of this work lies in the development of a two-stream temporal transformer, as illustrated in Figure 1, where raw frames and optical flow are fed into the transformer neural network. Extracted features are then processed by the transformer encoder, culminating in classification via a Multi-Layer Perceptron (MLP). This streamlined process allows for efficient extraction and utilisation of representation features for video classification, addressing the challenges posed by variable video capturing conditions and the need for substantial training resources. The research opens exciting possibilities for real-time video analysis and intelligent systems capable of interpreting complex human actions with greater precision.

Two-Stream Transformers for Spatio-Temporal Video Analysis achieve state-of-the-art

Scientists developed a novel two-stream transformer video classifier to enhance video understanding and action recognition capabilities! This research pioneers a method for extracting both spatio-temporal information from video content and movement data from optical flow, effectively capturing a comprehensive view of video dynamics. The study employs a transformer encoder to identify self-attention features across the combined optical flow and temporal frame domains, representing the relationships between these features for improved classification accuracy! Researchers engineered a system that processes video through two distinct streams: one analysing raw frame content and the other dedicated to optical flow estimation, revealing motion patterns.

Optical flow was generated using state-of-the-art neural networks, surpassing the performance of traditional, hand-crafted methods in motion estimation! The team then fused these two streams of information, feeding both frame features and optical flow features into a transformer neural network for joint processing. This innovative approach allows the model to intrinsically detect similarities within the data, leveraging the transformer’s self-attention mechanism to model video features efficiently. Experiments employed three well-known video datasets, UCF101, HMDB51, and Kinetic-400, to rigorously evaluate the proposed methodology.

The system delivers features extracted from both streams to the transformer encoder, which learns to represent the relationships between spatial and temporal information. Following the encoder, a Multi-Layer Perceptron (MLP) classifies the video into predefined action categories, providing a final classification output. This configuration enables the model to achieve excellent classification results, demonstrating its effectiveness in discerning human activities within video sequences. The study harnessed the power of transformers, achieving superior results compared to Convolutional Neural Networks (CNNs) on standard video benchmarks.

By jointly modelling video features from content and movement via self-attention, the research demonstrates a significant advancement in video action classification. This method achieves improved performance by effectively integrating spatial and temporal information, addressing the challenges posed by variable video capturing conditions and the need for substantial training resources! The work’s success highlights the potential of transformer-based architectures for future video analysis applications.

👉 More information

🗞 Two-Stream temporal transformer for video action classification

🧠 ArXiv: https://arxiv.org/abs/2601.14086