Unmanned aerial vehicles (UAVs) currently lack the ability to navigate complex environments using natural language instructions, a limitation that researchers Chih Yao Hu, Yang-Sen Lin, and Yuna Lee, from their respective institutions, now address with a novel framework called See, Point, Fly (SPF). This innovative system achieves universal UAV navigation without requiring any training, a significant advancement over existing methods that treat the task as a text generation problem. Instead, SPF interprets instructions as a spatial grounding task, effectively translating vague commands into a series of annotated waypoints on images, and converting these into precise 3D movement commands for the UAV. Demonstrating a substantial performance leap, SPF achieves a new state-of-the-art result in simulated environments, exceeding previous methods by a considerable margin, and also outperforms competing systems in real-world evaluations, paving the way for more intuitive and versatile drone operation.

Drone Navigation via Large Language Models

This research details an innovative system for autonomous drone navigation using Large Language Models (LLMs). The core idea is to enable drones to understand and execute instructions given in natural language, translating them into precise flight commands. Experiments covered a diverse range of tasks, including navigation, obstacle avoidance, long-horizon planning, reasoning, and search/follow, demonstrating the versatility of the proposed approach. The system was tested in both simulated and real-world environments, providing a comprehensive evaluation of its performance. The research demonstrates that LLMs can effectively control drones, interpreting instructions and guiding flight paths.

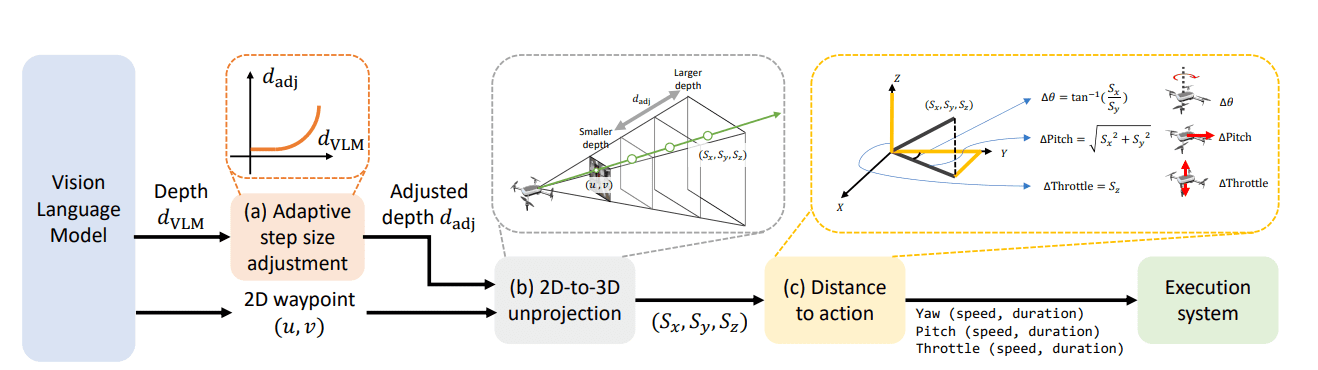

A key finding is that an adaptive step-size controller significantly improves task completion time and success rates compared to a fixed-step-size controller, suggesting that dynamically adjusting the drone’s movement based on the environment and task requirements is crucial for efficient navigation. The choice of Gemini 2. This innovation reframes action prediction not as text generation, but as the annotation of 2D waypoints directly onto input images, harnessing the VLM’s inherent spatial understanding. SPF achieves high success rates, reaching 93. 9% in simulated tasks and 92. 7% in real-world scenarios, while remaining adaptable to different VLMs and compatible with standard hardware.

The method prompts the VLM to identify key locations within an image based on a given instruction, marking 2D waypoints that represent desired flight paths. These waypoints, grounded in the visual scene, are then transformed into 3D displacement vectors, providing precise action commands for the UAV. SPF adaptively adjusts the traveling distance between waypoints, optimizing the flight path for dynamic environments and targets, enabling continuous replanning and tracking of moving objects. The core innovation lies in reframing action prediction as a 2D spatial grounding task, leveraging the capabilities of vision-language models (VLMs) to decompose vague instructions into iterative annotations of 2D waypoints directly on images. These predicted 2D waypoints are then transformed into 3D displacement vectors, serving as actionable commands for the UAV. Experiments demonstrate that SPF achieves a new state-of-the-art performance on a challenging simulation benchmark, surpassing the previous best method by a substantial margin of 63%.

Real-world evaluations further confirm SPF’s effectiveness. The system frames UAV control as a structured visual grounding task, where the VLM predicts a probability distribution over feasible waypoint plans. This approach bypasses the need for task-specific training data and complex policy optimization by reframing action prediction as a process of grounding two-dimensional waypoints within images, then converting these points into three-dimensional movement commands. The system achieves closed-loop control, allowing UAVs to track moving targets in dynamic environments. SPF achieves high success rates while remaining adaptable to different vision-language models and compatible with standard hardware.

The lightweight adaptive controller compensates for latency inherent in vision-language model processing, ensuring smooth flight paths. Researchers acknowledge limitations stemming from inaccuracies in vision-language models, particularly with small or distant targets, and the potential for imprecise depth estimation. Future work will focus on improving the robustness of perception, refining grounding mechanisms, reducing system latency, and exploring strategies for more efficient exploration.

👉 More information

🗞 See, Point, Fly: A Learning-Free VLM Framework for Universal Unmanned Aerial Navigation

🧠 ArXiv: https://arxiv.org/abs/2509.22653