The Turing Machine is a cornerstone concept, introduced by British mathematician and computer scientist Alan Turing in the mid-20th century. This abstract device, capable of performing any computation possible by a modern computer, served as a foundation for understanding the limits and potentials of computational processes.

One of Turing’s intriguing problems is the P vs NP problem, which questions whether there exists a polynomial-time algorithm for solving NP-complete problems. Despite decades of research, no solution has been discovered, highlighting the complexity of computability theory and the practical challenges in solving these hardest problems using current algorithms and technology.

Another challenge Turing presented was the Halting Problem, which asks whether it is possible to determine if a given program will run forever or eventually halt. Turing proved that no general algorithm can solve this problem for all programs, underscoring the complexity of computability theory and the inherent limitations in our understanding of what is effectively calculable.

The Church-Turing Thesis, a fundamental principle in computability theory, states that a Turing Machine can compute any effectively calculable function, yet the boundary between what is effectively calculable and what is not remains unclear. This raises questions about the capabilities of quantum computers and whether they can solve problems beyond the reach of classical computers, a topic still under debate among researchers.

Another contribution from Turing, the Turing Test, serves as an essential benchmark for evaluating the capabilities of AI systems today. It measures a machine’s ability to imitate human conversation, providing insights into the development and progress of artificial intelligence. Despite significant advancements in computer science, mathematics, and artificial intelligence since Turing’s time, his ideas continue to inspire researchers and practitioners in these fields, underscoring the enduring relevance of his work on computability theory and the Turing Machine.

Alan Turing’s Life And Achievements

In computational theory, few figures loom as large as Alan Turing, the British mathematician whose groundbreaking work in the mid-20th century continues to shape our understanding of computation and artificial intelligence today. Born on June 23, 1912, in London, England, Turing’s life was marked by a profound intellect and an unyielding curiosity that led him to make seminal contributions to the fields of mathematics and computer science.

Turing is best known for his development of the Turing Machine, a theoretical device designed to mimic any other abstract machine capable of following instructions. This thought experiment, introduced in 1936, laid the foundations for modern computability theory and provided a formal definition of what is now known as an algorithm. The Turing Machine remains a cornerstone of computer science education and serves as a testament to Turing’s genius.

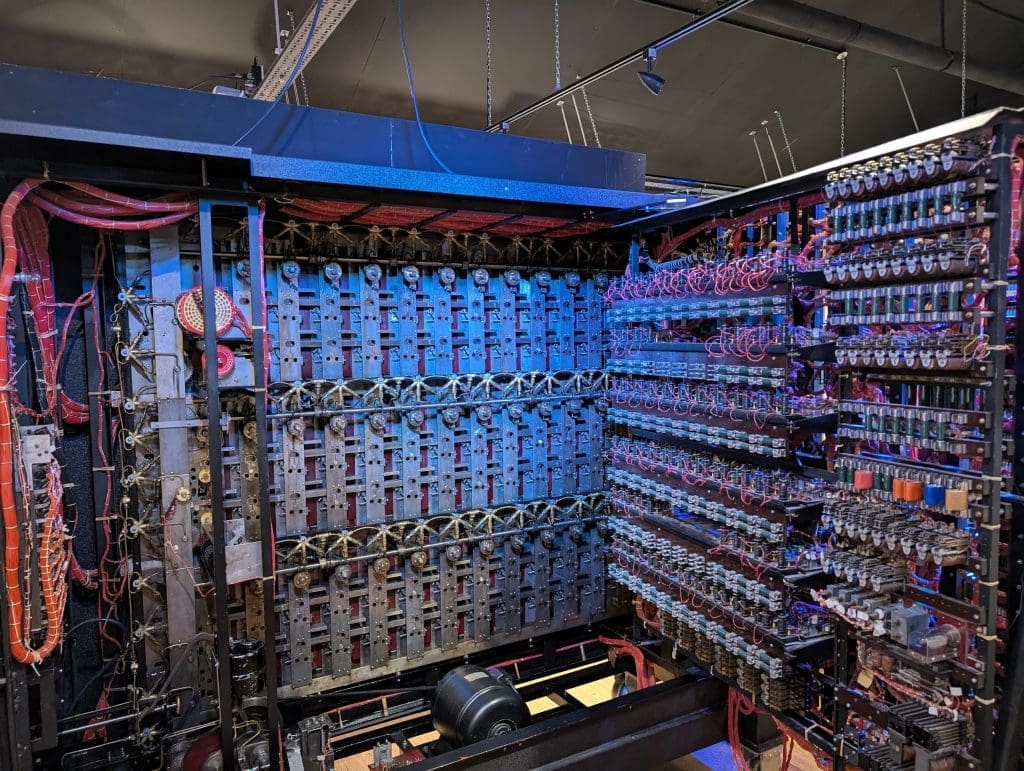

In addition to his work on the Turing Machine, Turing made significant contributions to cryptography during World War II. Working at Bletchley Park, he played a crucial role in breaking the German Enigma code, a highly sophisticated encryption system used by the German military. His work at Bletchley Park is estimated to have shortened the war by two to four years and saved millions of lives.

Turing’s life was tragically cut short when he was convicted of homosexuality in 1952, a crime under British law at the time. He was given a choice between prison and chemical castration; Turing chose the latter. Two years later, he died from cyanide poisoning, which is believed to have been self-inflicted. In 2013, Queen Elizabeth II granted Turing a posthumous royal pardon, acknowledging the injustice that had been done to him.

Today, Alan Turing’s legacy lives on through his groundbreaking work in mathematics and computer science. His contributions have shaped the course of technological development and continue to inspire generations of scientists and engineers. As we look towards the future of artificial intelligence and computational theory, it is essential to remember the man who first defined what it means for a machine to compute.

The Concept Of Computable Numbers

The concept of computable numbers, a fundamental pillar in the field of computer science, traces its roots to the groundbreaking work of Alan Turing and his Turing Machine. In essence, computable numbers are real numbers that can be computed by a Turing Machine within a finite number of steps.

Turing Machines, introduced in 1936, are abstract machines that manipulate symbols on a strip of tape according to a set of rules. They serve as the theoretical foundation for modern-day computers and provide a framework for understanding computability. The concept of computable numbers is closely tied to this machine, as it defines the realm of numbers that can be processed by such a device.

Computable numbers are not limited to integers or rational numbers but encompass a broader set. Any real number that can be approximated with arbitrary precision using a finite sequence of rational numbers is considered computable. This includes algebraic numbers, such as the square root of two, and even some transcendental numbers, like pi.

Another influential concept in computer science is the Church-Turing thesis, which posits that any effectively calculable function can be computed by a Turing Machine. This thesis strengthens the notion that computable numbers represent the boundary between what is computationally feasible and what lies beyond.

It’s essential to note that not all real numbers are computable. Numbers that cannot be approximated by a finite sequence of rational numbers, such as Chaitin’s constant or random real numbers, fall outside the realm of computability. These numbers are said to be “non-computable” or “transcendent.”

In summary, computable numbers provide a mathematical framework for understanding what can and cannot be computed by a Turing Machine. This concept has profound implications for computer science, mathematics, and our understanding of the limits of computation.

Turing Machine Model Explanation

The Turing Machine, conceived by the British mathematician Alan Turing in 1936, is a fundamental concept in computer science and mathematics that defines computability. This thought experiment serves as a model for any mechanical general-purpose computing device.

The Turing Machine consists of a tape divided into cells, each containing a symbol from a finite alphabet. A read/write head moves along the tape, reading and writing symbols according to a set of rules in a table called the transition function. The machine can be in one of infinitely many states, which also affect its actions.

Turing demonstrated that any algorithmic process could be simulated by a Turing Machine, thereby establishing the Church-Turing thesis. This thesis asserts that any effectively calculable function is computable by a Turing Machine, thus defining the boundary between what can and cannot be computed by an algorithm.

The Turing Machine’s simplicity belies its profound impact on modern computing. It laid the foundation for the development of practical computers, such as the ENIAC (Electronic Numerical Integrator And Computer), which was completed in 1945. Today, the principles of the Turing Machine are still relevant, with concepts like Turing completeness being used to determine the computational power of programming languages and systems.

The Turing Machine’s influence extends beyond computer science. It has been applied in various fields, including linguistics, philosophy, and biology, where it helps understand the limits of automation and computation. For instance, in biology, the concept of a Turing Machine is used to model pattern formation and self-replication in biological systems.

Universal Turing Machine Concept

The Universal Turing Machine, a theoretical construct proposed by the British mathematician Alan Turing in 1936, is a cornerstone of modern computer science and computability theory. This machine, an abstract model of a mechanical device capable of performing any well-defined computation, is named after its creator and provides a foundation for understanding the limits and capabilities of computation.

The Universal Turing Machine consists of a tape divided into cells, each containing a symbol from a finite alphabet. A read/write head moves along this tape, reading and writing symbols according to a set of rules encoded in the machine’s state table. The machine can be configured to perform any computation that is algorithmically solvable by transitioning between states based on the current symbol under the head and the action specified in the state table.

One of the most significant implications of the Universal Turing Machine is the Church-Turing thesis, which posits that any effectively calculable function can be computed by a Turing machine. This thesis unifies various models of computation, such as lambda calculus and recursive functions, under the umbrella of Turing machines, thereby providing a coherent framework for understanding computability.

Another crucial aspect of the Universal Turing Machine is its role in demonstrating the existence of unsolvable problems. By showing that there exists a Turing machine that can simulate any other Turing machine, Turing proved that there are problems that no Turing machine can solve in a finite amount of time. This result, known as the halting problem, has far-reaching implications for computer science and mathematics, as it highlights the inherent limitations of computation.

The Universal Turing Machine continues to be an essential tool for understanding the foundations of computer science and computability theory. Its abstract nature allows for a wide range of applications, from theoretical studies of computational complexity to practical implementations in digital computers. As we continue to push the boundaries of what is computationally possible, the Universal Turing Machine remains a guiding light, illuminating the path towards understanding the limits and potential of computation.

Church-Turing Thesis And Its Implications

The Church-Turing Thesis, proposed by the pioneering mathematicians Alonzo Church and Alan Turing in the mid-20th century, has profoundly shaped the field of computer science. This thesis posits that a Turing Machine can compute any effectively calculable function, thereby defining the boundaries of computability.

A Turing Machine, named after Alan Turing, is an abstract model of a mechanical computing device. It consists of a tape divided into cells, a read-write head, and a finite set of states. The machine reads a symbol on the cell under the head, performs a transition to another state, and writes a new symbol while moving one cell to the left or right.

The Church-Turing Thesis extends this concept by suggesting that a Turing Machine can simulate any algorithmic process. This implies that there is no fundamental difference between computational problems that can be solved by a machine and those that cannot. In essence, it sets the limits of what is computationally possible.

The implications of the Church-Turing Thesis are far-reaching. It underpins the design of modern computers and programming languages, as they are all based on the principles of Turing Machines. Moreover, it has led to the development of complexity theory, which studies the resources required to solve computational problems.

However, the Church-Turing Thesis is not a mathematical theorem but an informal hypothesis. Despite numerous attempts to prove or disprove it, no definitive proof has been found. Yet, its practical relevance and the lack of counterexamples have led most computer scientists to accept it as a fundamental truth.

Early Algorithms And Their Role In Turing’s Work

The concept of an algorithm can be traced back to ancient Greece, where mathematicians like Euclid used step-by-step procedures to solve mathematical problems. However, it was Turing who formalized the notion of an algorithm in the context of computability. In his seminal paper “On Computable Numbers, with an Application to the Entscheidungsproblem” , Turing introduced a set of rules that could be followed mechanically to solve any mathematical problem that a human could conceive.

These early algorithms were designed to manipulate symbols on a tape according to a specific set of instructions, known as the transition function. The transition function dictated how the machine would move its read/write head and change the symbols on the tape based on the current state and the symbol under the head. This simple yet powerful mechanism allowed Turing’s machine to simulate any conceivable computation.

Turing’s work on early algorithms was instrumental in defining the limits of computability, as well as providing a practical framework for understanding how computers could be designed and programmed. His ideas laid the groundwork for the development of modern digital computers and continue to influence research in computer science and artificial intelligence today.

Turing’s work on early algorithms was further refined by subsequent researchers, leading to the creation of more efficient and powerful computing models. For instance, John von Neumann’s architecture, which is still the basis for most modern computers, built upon Turing’s ideas by introducing the concept of a stored program and separating the memory and processing units.

In conclusion, Alan Turing’s development of early algorithms played a crucial role in shaping the field of computational theory and paved the way for the creation of modern digital computers. His work continues to inspire researchers and practitioners in computer science and artificial intelligence today.

Turing’s Test: Artificial Intelligence Measurement

The Turing Test is based on a simple premise: if a machine can convincingly imitate a human in a natural language conversation, then it can be considered intelligent. However, this test raises several questions about what constitutes intelligence and how it can be accurately measured.

One of the key challenges lies in the subjectivity of human judgment. The test relies on human evaluators to determine whether a machine’s responses are indistinguishable from those of a human. This introduces a degree of variability, as different evaluators may have different interpretations and expectations.

To address this issue, researchers have proposed variations of the Turing Test, such as the Diagnostic Test and the Reflective Test. The Diagnostic Test focuses on a machine’s ability to diagnose its own errors, while the Reflective Test assesses a machine’s capacity for self-awareness and introspection.

Despite these advancements, the Turing Test remains a controversial topic in AI research. Critics argue that it fails to capture certain aspects of human intelligence, such as creativity, common sense, and emotional intelligence. They propose alternative tests, like the Loebner Prize, which focus on these areas.

In conclusion, the Turing Test continues to be a significant milestone in the field of AI, serving as a touchstone for measuring machine intelligence. However, its limitations and controversies underscore the complexities involved in defining and quantifying human-like intelligence in machines.

Historical Context Of Turing Machine Development

The Turing Machine was born out of Turing’s quest to address the Entscheidungsproblem, or the decision problem, posed by German mathematician David Hilbert. Turing aimed to determine whether there existed a general algorithm for solving all mathematical problems that could be expressed within formal systems.

The Turing Machine was designed as a simple abstract machine capable of manipulating symbols on a tape according to a table of rules, or transition function. This device could simulate any conceivable computer by using a finite number of states and a set of instructions. In essence, the Turing Machine served as a universal computing model, demonstrating that all computational problems could be reduced to a series of symbol manipulations.

The significance of the Turing Machine extends beyond its role in defining computability. It also played a crucial part in the development of modern cryptography and computer science. Turing’s work on the Enigma machine during World War II, for instance, contributed significantly to the Allied forces’ ability to decipher encrypted German communications.

The Turing Machine’s influence continues to be felt today, as it forms the basis for understanding the limits and capabilities of computation. Its principles underpin the design of contemporary computers, algorithms, and programming languages, making it an indispensable cornerstone in the field of computer science.

Impact On Modern Computing And Technology

The Turing Machine, introduced in 1936, defined the concept of computability, outlining the fundamental principles that govern the processing of information in a machine. This thought experiment paved the way for the creation of practical computing devices, such as the first electronic computer, ENIAC, which was built in the late 1940s.

The Turing Machine’s impact on modern technology extends beyond computers. Its principles have been instrumental in the development of algorithms, programming languages, and artificial intelligence (AI). Algorithms, a series of instructions that a computer follows to solve a problem or perform a task, are essentially an extension of the Turing Machine’s operation.

Furthermore, the Turing Machine has played a crucial role in the development of AI. The concept of computability, as defined by the Turing Machine, forms the basis for the development of decision-making algorithms used in AI systems. These algorithms enable AI to process and analyze vast amounts of data, learn from it, and make decisions based on that learning.

Lastly, the Turing Machine has also influenced the field of cryptography. The concept of unbreakable codes, as proposed by Turing, led to the development of modern encryption techniques used to secure digital communications and protect sensitive information.

Unsolved Problems In Turing’s Legacy

One such problem is the question of whether there exists a universal algorithm capable of solving all decidable problems. Known as Hilbert’s Entscheidungsproblem, this conundrum was posed by David Hilbert in 1928 and remains unanswered. Turing’s work provided a foundation for understanding the limits of computability, but the existence of such an algorithm remains elusive.

Another unsolved problem lies in the realm of intractable problems. These are problems that can be stated precisely but require an impractical amount of time or resources to solve using current algorithms and technology. The P vs NP problem, first proposed by Stephen Cook in 1971, is a prime example. This problem asks whether there exists a polynomial-time algorithm for solving NP-complete problems, which are the hardest problems in the NP class. If such an algorithm could be found, it would revolutionize computational theory, but despite decades of research, no solution has been discovered.

The Church-Turing Thesis, a fundamental principle in computability theory, also raises questions. This thesis states that any effectively calculable function can be computed by a Turing Machine. However, the boundary between what is effectively calculable and what is not remains unclear. For instance, the question of whether quantum computers can solve problems beyond the reach of classical computers is still a topic of debate.

The Halting Problem, first posed by Turing himself, further illustrates the complexity of computability theory. This problem asks whether it is possible to determine if a given program will run forever or eventually halt. Turing proved that no general algorithm can solve this problem for all programs, but the implications of his proof continue to resonate in modern computer science.

Lastly, the question of whether there exists a universal programming language remains unanswered. While many programming languages have been developed, none has emerged as truly universal, capable of expressing every conceivable algorithm. This problem highlights the inherent limitations of our current understanding of computability and suggests that new insights are still needed to fully grasp Turing’s legacy.

Turing’s Contributions To Logic And Mathematics

In 1936, Turing published a seminal paper titled “On Computable Numbers, with an Application to the Entscheidungsproblem” in the Proceedings of the London Mathematical Society. In this paper, he introduced the concept of a Turing Machine, a theoretical device capable of performing any computation that can be carried out by a modern computer. The machine consists of a tape divided into squares, each containing a symbol from a finite alphabet, and a read/write head that moves along the tape according to a set of rules.

Turing’s work on computability theory also introduced the concept of an “effective procedure,” which is a method for solving a problem using a finite number of well-defined steps. This idea was crucial in defining what problems can be solved by a computer and what problems are inherently unsolvable.

In 1937, Turing published another influential paper titled “Systems of Logic Based on Ordinary Language” in the Proceedings of the London Mathematical Society. In this paper, he introduced the concept of a “Turing Test,” a test for artificial intelligence that measures a machine’s ability to imitate human conversation. The Turing Test remains an important benchmark for evaluating the capabilities of AI systems today.

Turing’s work on computability theory and the development of the Turing Machine have had far-reaching implications for computer science, mathematics, and artificial intelligence. His ideas continue to inspire researchers and practitioners in these fields, and his legacy remains an important part of the history of computing.