A new reference-free framework, DIM-CIM, assesses diversity and generalisation in text-to-image models without needing existing image datasets. Analysis using the COCO-DIMCIM benchmark reveals that increasing model size often reduces default-mode diversity, and demonstrates a strong correlation (0.85) between training data diversity and model output.

The capacity of text-to-image (T2I) generative models to produce high-fidelity outputs is increasingly well established, yet a critical aspect – the diversity of generated content and its ability to accurately reflect requested attributes – remains a significant challenge. Researchers are now focusing on quantitative methods to assess these qualities without relying on pre-defined image datasets. A new framework, detailed in a paper by Revant Teotia, Candace Ross, Karen Ullrich, Sumit Chopra, Adriana Romero-Soriano, Melissa Hall, and Matthew Muckley, introduces a reference-free evaluation approach termed DIM-CIM (Does-it/Can-it Measurement of Diversity and Generalization). The team, spanning FAIR at Meta (New York and Montreal labs), the Courant Institute for Mathematical Sciences at NYU, and Mila – the Quebec AI Institute, present their work in the article “DIMCIM: A Quantitative Evaluation Framework for Default-mode Diversity and Generalization in Text-to-Image Generative Models”.

Assessing Diversity and Generalisation in Text-to-Image Generation with DIM-CIM

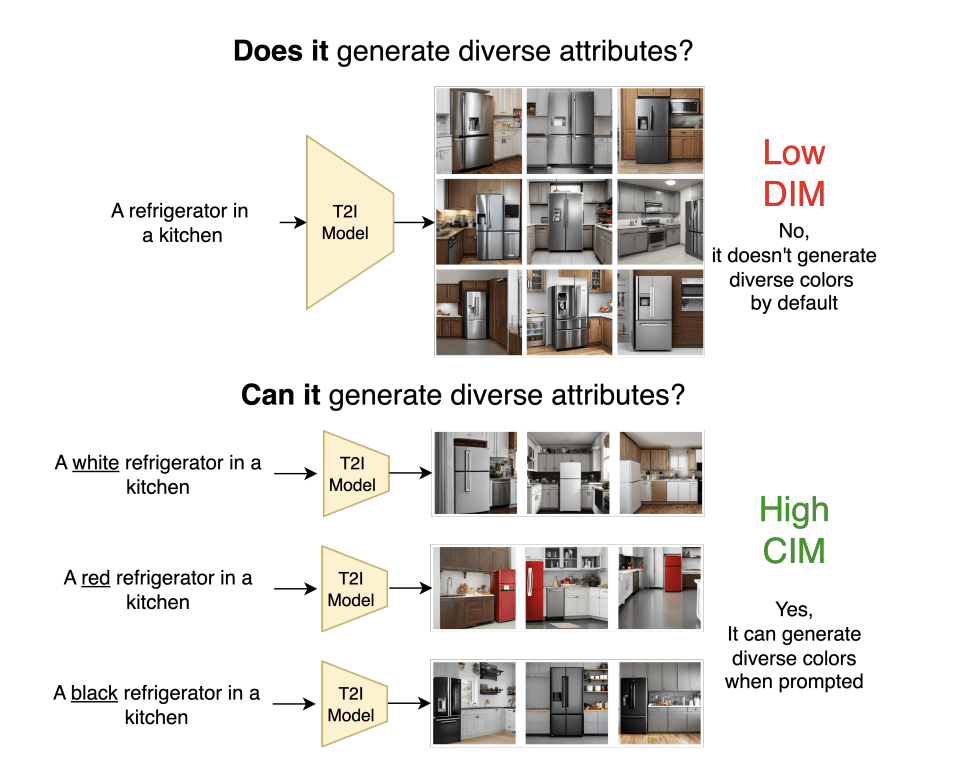

Researchers have introduced DIM-CIM (Does-it/Can-it), a novel framework designed to rigorously evaluate the diversity and generalisation capabilities of text-to-image (T2I) generative models. Current automated metrics often rely on comparisons to pre-existing image datasets, or lack the granularity to assess specific facets of diversity, limiting comprehensive model evaluation. DIM-CIM circumvents these limitations with a reference-free approach, assessing both a model’s ability to generate images with expected attributes and its capacity to produce diverse attributes for a given concept, offering a more nuanced understanding of performance.

The framework utilises a newly constructed benchmark, COCO-DIMCIM, built upon the established Common Objects in Context (COCO) dataset. This enables systematic testing across a range of attributes including colour, breed, size, age, activity, environment, and accessories. Researchers prompt image generation with variations of a core concept – for example, “a dog on the beach” – to comprehensively evaluate model performance across diverse parameters and identify potential weaknesses. Analysis using COCO-DIMCIM reveals a trade-off between generalisation and default-mode diversity in widely-used T2I models, prompting further investigation into the relationship between model capacity and output characteristics.

Specifically, increasing model size from 1.5 billion to 8.1 billion parameters improves the ability to generate diverse attributes, but simultaneously reduces the consistency with which expected attributes are produced. This highlights a complex interplay between scale and control. The finding suggests that simply increasing model size does not necessarily equate to improved overall performance, and careful consideration must be given to maintaining diversity across all attribute types. Further investigation with DIM-CIM identifies specific failure cases, revealing instances where models struggle to consistently adhere to explicit instructions.

Models frequently generate images correctly with generic prompts, but struggle when explicitly asked for particular attributes, indicating a potential weakness in the model’s ability to accurately interpret and apply specific instructions. This suggests a need for improved prompt engineering techniques and more refined model training strategies to enhance the precision and reliability of generated images. The research also establishes a strong correlation between the diversity present within the training images used to build a T2I model and the model’s resulting default-mode diversity, emphasising the importance of data quality and representation.

This work provides a flexible and interpretable tool for evaluating T2I models, enabling researchers to pinpoint specific areas for improvement and assess the impact of training data on the diversity of generated images. By disentangling default-mode diversity from generalisation capacity, DIM-CIM offers a more comprehensive understanding of model performance than existing metrics, facilitating targeted development and optimisation. The framework enables researchers to move beyond simple quantitative assessments and delve into the qualitative aspects of image generation, fostering innovation in the field.

Researchers are now equipped with a robust methodology to assess and refine T2I models, paving the way for more creative and controllable image generation systems. The study demonstrates that increasing model size alone does not guarantee improved performance, and highlights the importance of balancing diversity and control in T2I models. Researchers emphasise the need for careful consideration of training data and prompt engineering techniques to achieve optimal results.

Future research directions include exploring the application of DIM-CIM to other generative models, such as those generating audio or video, and investigating the impact of different training techniques on model diversity and generalisation. Researchers also plan to develop automated tools to facilitate the use of DIM-CIM and make it more accessible to the broader research community. By providing a comprehensive and interpretable evaluation framework, this work contributes to the advancement of generative modelling and paves the way for more creative and controllable AI systems. The development of DIM-CIM represents a significant step forward in the evaluation of T2I models, providing a valuable tool for researchers and practitioners alike.

👉 More information

🗞 DIMCIM: A Quantitative Evaluation Framework for Default-mode Diversity and Generalization in Text-to-Image Generative Models

🧠 DOI: https://doi.org/10.48550/arXiv.2506.05108