A position paper by Eva Memmel, Clara Menzen, Jetze Schuurmans, Frederiek Wesel, and Kim Batselier from Delft University of Technology and Xebia Data introduces a link between tensor networks (TNs) and Green AI. The paper argues that TNs, due to their strong mathematical backbone and logarithmic compression potential, can enhance the sustainability and inclusivity of AI research. The authors provide an overview of efficiency metrics in Green AI literature and evaluate examples of TNs in kernel machines and deep learning. They encourage researchers to integrate TNs into their projects and treat Green AI principles as a research priority.

The Role of Tensor Networks in Green AI

The exponential growth of data and the increasing power of AI algorithms have led to a surge in computational demands. This growth, while opening doors for extraordinary opportunities, also has adverse effects on economic, social, and environmental sustainability. The energy required for training popular AI models can lead to significant CO2 emissions and high costs. This unsustainable development in AI research has led to a growing awareness in the AI community, with several researchers calling for a shift in focus towards efficiency as a benchmark for algorithmic progress. This shift is encapsulated in the concept of Green AI.

Tensor Networks: A Tool for Green AI

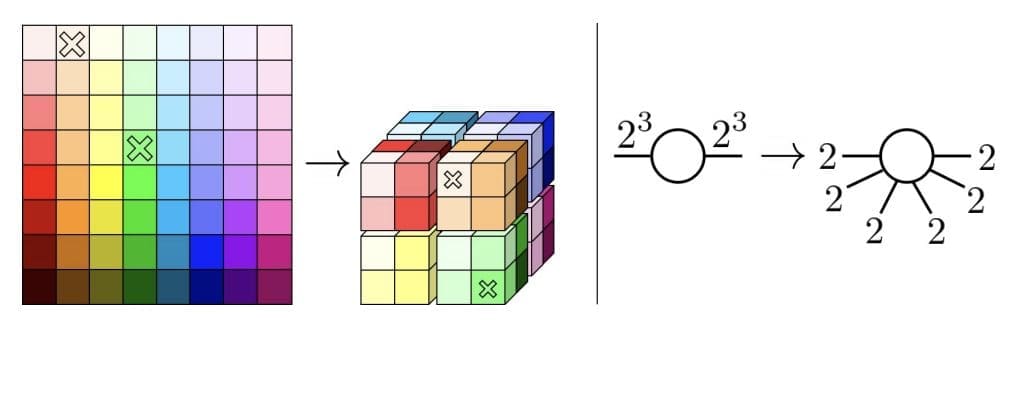

Tensor Networks (TNs), an established tool from multilinear algebra, are proposed as a valuable asset for Green AI. TNs fall under the category of AI model development, focusing on reducing energy consumption during data processing, experimentation, training, and inference. They stand alongside numerous strategies such as regularization, automated hyperparameter search, pruning, quantization, physics-informed learning, or drop-out methods.

TNs approximate data in a compressed format while preserving the essence of the information, often achieving logarithmic compression while having the potential to achieve competitive accuracy. This makes TNs an efficient and sustainable way of representing and handling big data.

The Impact of Tensor Networks on Green AI

The impact of TNs on Green AI can be seen in their solid mathematical foundation, logarithmic compression potential, and broad applicability across different data formats and model architectures. They can significantly increase the efficiency of data preprocessing, training, and inference. However, the effect of TNs on computing infrastructure and system-level impacts is limited. TNs have no effect on the computing infrastructure beyond their reduced hardware requirements.

The Sustainability of Tensor Networks

By reducing the need for hardware and computational resources, TNs can cut costs, making them an economically more sustainable solution. The reduced hardware and computational demands of TNs decrease reliance on expensive, potentially external computing resources. This can lower entry barriers to AI research, potentially fostering inclusivity and social sustainability. Additionally, the ability of TNs to minimize hardware use and shorten computational time can significantly decrease the embodied and operational emissions associated with AI, enhancing environmental sustainability.

Future Directions for Tensor Networks in Green AI

There are open challenges that can dampen the potential benefits of TNs on Green AI. For example, implementing favourable design choices for TNs often requires experience, and practitioners’ guidelines or theoretical frameworks to support decision-making are yet to be developed. Future research could further investigate proper weight initialization or efficient hyperparameter training, among others. Despite these challenges, TNs are anticipated to have a broad application in diverse sectors of AI and contribute to more sustainable AI.

External Link: Click Here For More