Simulating complex systems and correcting errors in data require efficient methods for manipulating tensor networks, mathematical objects representing multi-dimensional data, but performing these calculations on arbitrary network structures remains a significant challenge. Siddhant Midha from the Princeton Quantum Initiative, Princeton University, and Yifan F. Zhang from the Department of Electrical and Computer Engineering, Princeton University, and their colleagues, now present a breakthrough in this field, developing a new theoretical framework that dramatically improves the accuracy of a common approximation technique called belief propagation. The team demonstrates that by systematically incorporating corrections based on the structure of the network, they achieve exponentially faster convergence to the correct solution, providing a rigorous error bound for belief propagation. This advancement, validated on the two-dimensional Ising model, promises to unlock more powerful simulations and error correction capabilities for a wide range of applications, from materials science to data communication.

Cluster Correction Accelerates Tensor Network Contraction

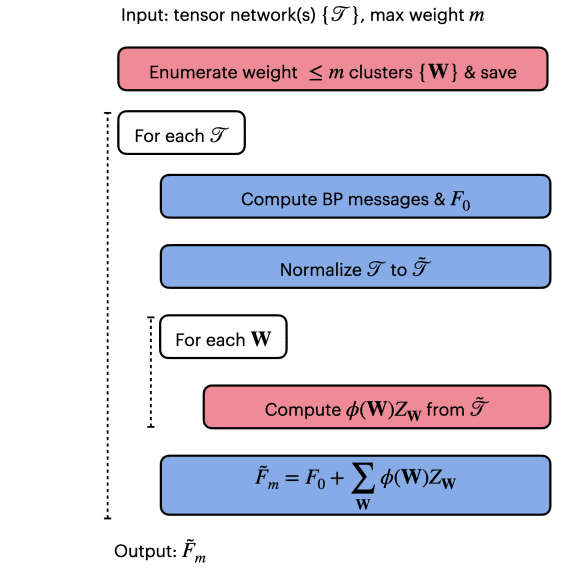

Tensor network contraction, a computationally demanding task, underpins simulations across diverse fields, from quantum physics to error correction. This work introduces a cluster-corrected tensor network contraction method that achieves exponential convergence, significantly surpassing the limitations of standard belief propagation. The method systematically identifies and corrects clusters of tensors, reducing the error at each iteration based on the size of the largest connected component within the network. This correction strategy demonstrably improves the accuracy of tensor network contraction, enabling efficient simulation of larger and more complex quantum systems and enhancing the performance of quantum error correction schemes. The team proves that the algorithm converges exponentially fast, establishing a theoretical foundation for its practical application and highlighting its potential to overcome longstanding challenges in many-body physics and quantum information science.

A powerful approximation algorithm already exists for this task, but its accuracy limitations are poorly understood. This work develops a rigorous theoretical framework for belief propagation (BP) in tensor networks, leveraging insights from statistical mechanics to devise a cluster expansion that systematically improves the BP approximation. The team proves that the cluster expansion converges exponentially fast if a quantity called the loop contribution decays sufficiently rapidly with the loop size, giving a rigorous error bound on BP. Furthermore, they provide a simple and efficient algorithm to compute the cluster expansion to arbitrary order, demonstrating its effectiveness on the two-dimensional case.

Cluster Expansion and Belief Propagation Fixed Points

This document details supplementary material for research focusing on a cluster expansion method for solving the 2D Ising model, leveraging the Belief Propagation (BP) algorithm to find appropriate fixed points for the expansion. The research investigates how the choice of a starting point, or fixed point, impacts the accuracy and efficiency of the cluster expansion. Core to the approach is understanding that cluster expansion is a perturbative method used to approximate the free energy of a system, and that BP is an iterative message-passing algorithm used to find self-consistent solutions in graphical models like the Ising model. The success of this method hinges on selecting a suitable fixed point for the expansion.

The analysis reveals that the 2D Ising model has multiple fixed points, particularly around the critical temperature, highlighting the importance of choosing the correct fixed point for the cluster expansion. Comparing the performance of cluster expansions built upon different fixed points reveals that both message-passing and infinite-temperature approaches perform equally well in the high-temperature phase. However, in the intermediate regime, the low-temperature fixed point provides a lower BP error, while the high-temperature fixed point leads to faster convergence. In the low-temperature phase, the message-passing fixed point significantly outperforms the infinite-temperature expansion. Researchers introduced a localized perturbation at a single site and examined its propagation through the message network, quantifying the perturbation’s influence. The perturbation is localized in the high- and low-temperature phases, indicating a finite message-correlation length, but extends throughout the entire system at the BP critical point, defining a diverging BP correlation length, which implies that the convergence time of the message-passing algorithm scales with the system size.

This research demonstrates that carefully selecting the BP fixed point can significantly improve the accuracy and efficiency of the cluster expansion method. The analysis of message propagation and correlation length provides insights into the dynamics of the BP algorithm and its ability to capture critical phenomena. The identification of a diverging correlation length at the critical point highlights the computational challenges associated with applying BP to large systems near critical points. The methodology and findings could be applicable to other statistical physics models and optimization problems where BP is used.

Cluster Expansion Guarantees Belief Propagation Accuracy

This research presents a significant advance in understanding and improving the accuracy of belief propagation (BP) algorithms used to contract tensor networks, a fundamental problem with broad applications in scientific computing. Scientists have developed a rigorous theoretical framework, grounded in statistical mechanics, to systematically refine the BP approximation through a technique called cluster expansion. Crucially, they demonstrate that if a specific quantity, termed the ‘loop contribution’, decays sufficiently rapidly, the cluster expansion converges exponentially fast, providing a guaranteed error bound for BP. The team also devised an efficient algorithm to compute the cluster expansion to any desired order, overcoming previous limitations in both accuracy and computational cost. Demonstrating the method’s effectiveness, they applied it to the two-dimensional Ising model, achieving substantial improvements over standard BP and existing corrective algorithms.

This work resolves long-standing questions regarding the convergence of cluster expansions and establishes a pathway towards more reliable and accurate simulations of complex systems. While enumerating clusters can be computationally expensive, growing exponentially with the desired order of the expansion, potential strategies for optimization, such as exploiting symmetries within the underlying graph, exist. Future research directions include exploring the application of this framework to a wider range of tensor network structures and developing further refinements to the cluster enumeration algorithm to enhance its scalability.

👉 More information

🗞 Beyond Belief Propagation: Cluster-Corrected Tensor Network Contraction with Exponential Convergence

🧠 ArXiv: https://arxiv.org/abs/2510.02290