The increasing demand for artificial intelligence applications drives a search for more efficient computing hardware, and photonics offers a promising path towards accelerating the complex calculations at the heart of modern AI models. However, realising the full potential of photonic computing for large Transformer models remains a significant challenge. Hanqing Zhu from The University of Texas at Austin, Zhican Zhou from King Abdullah University of Science and Technology, Shupeng Ning from The University of Texas at Austin, and colleagues address this problem with a novel hardware and software co-design framework. Their work introduces ‘Lighten’, a compression technique that reduces the size of Transformer models without significant loss of accuracy, and ‘ENLighten’, a reconfigurable photonic accelerator that dynamically adapts to the model’s needs. This combination achieves a substantial improvement in energy efficiency compared to existing photonic accelerators, paving the way for more powerful and sustainable AI systems.

Photonic Acceleration for Vision Transformers and LLMs

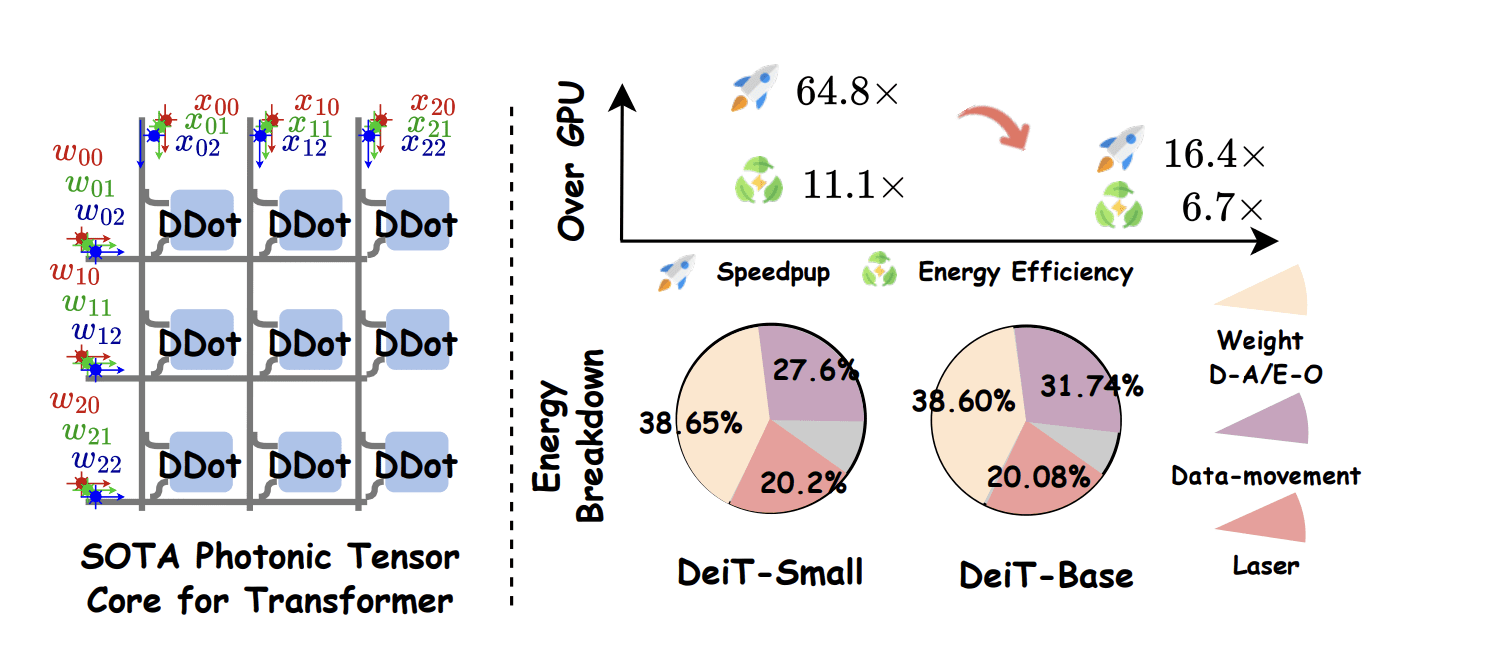

The ENLIGHTEN research project explores leveraging the speed and energy efficiency of optical computing to overcome limitations of traditional electronic hardware for demanding deep learning tasks, particularly for Vision Transformers (ViTs) and Large Language Models (LLMs). The research emphasizes a co-design approach, simultaneously developing hardware and algorithms to maximize performance, including the development of photonic tensor cores, specialized optical circuits designed to accelerate the matrix multiplications fundamental to deep learning. Researchers utilize algorithm-circuit co-design, tailoring model architecture and training processes to suit optical hardware capabilities, employing techniques like Singular Value Decomposition (SVD) and sparsity-inducing methods to reduce computational complexity. ENLIGHTEN aims to perform computations within the optical memory itself, eliminating data movement bottlenecks, while addressing practical challenges like device aging and reliability.

Specific photonic architectures, Neurolight and M3icro, are being developed for scalability and efficiency, alongside techniques like ROQ (noise-aware quantization) and pacing operator learning to improve robustness and accuracy. This work builds upon and contributes to efficient deep learning, model compression, and hardware acceleration, utilizing techniques like pruning, quantization, and low-rank approximation to reduce model size and complexity. The research is part of a broader trend towards specialized hardware accelerators, including GPUs, TPUs, and FPGAs, with photonics offering a potentially disruptive alternative, aligning with the growing interest in in-memory computing and analog computing for certain tasks. Ultimately, photonic acceleration offers a promising approach to overcome limitations of traditional electronic hardware for deep learning, requiring co-design of algorithms and hardware and model compression techniques. The research addresses both computational and practical challenges, targeting computationally intensive models like ViTs and LLMs, representing a significant effort to explore photonics as a disruptive technology for accelerating deep learning and enabling new applications in computer vision and natural language processing.

Photonic Acceleration via Weight Matrix Decomposition

The research addresses bottlenecks in applying photonic technology to accelerate large Transformer models, focusing on energy efficiency and scalability. To overcome these challenges, the team developed a hardware-software co-design framework centered around a compression technique called Lighten and a reconfigurable photonic accelerator named ENLighten. Lighten decomposes each Transformer weight matrix into a low-rank and structured-sparse component, aligning with the granularity of photonic tensor cores, without extensive retraining, achieved through layer-wise analysis of compression error using an activation-aware reconstruction error metric. The team implemented a fast batch-wise greedy rank allocator to efficiently distribute a compression budget across layers, prioritizing those most sensitive to error, beginning with a small, uniform rank and iteratively increasing the ranks of the most error-sensitive layers.

A dynamic step-wise refinement strategy adjusts the rank increment based on the remaining budget, ensuring a coarse-to-fine approach that maximizes error reduction, avoiding computationally expensive operations like additional Singular Value Decompositions. Deployed on the ENLighten accelerator, featuring dynamically adaptive tensor cores and broadband light redistribution, the Lighten-compressed model achieves a significant improvement in energy-delay product compared to state-of-the-art photonic Transformer accelerators. Experiments on ImageNet demonstrate that Lighten prunes a Base-scale Vision Transformer by 50% with only a 1% accuracy drop after just 3 epochs, or approximately one hour, of fine-tuning, enabling substantial gains in both speed and energy efficiency.

Photonic AI Acceleration With ENLighten Co-design

This work presents a novel hardware-software co-design, named ENLighten, which significantly advances photonic acceleration for modern AI models. Researchers addressed key bottlenecks in applying photonics to large Transformer networks, specifically the energy costs of data conversion and the limitations of on-chip resources. They introduced Lighten, a compression pipeline that efficiently reduces the size of Transformer weight matrices without extensive retraining, decomposing them into low-rank and structured-sparse components aligned with the granularity of photonic tensor cores. Experiments on the ImageNet dataset, using Vision Transformer models including DeiT-Small, DeiT-Base, and ViT-Base, demonstrate the effectiveness of Lighten, achieving a 50% reduction in parameters for the ViT-Base model with only a 1% drop in accuracy after just 3 epochs, or approximately one hour, of fine-tuning.

This compression flow consistently outperformed existing methods, closing the accuracy gap by up to 13% on the ViT-Base model, even without retraining. Complementing Lighten, the team designed a reconfigurable photonic accelerator, ENLighten, featuring dynamically adaptive tensor cores and broadband light redistribution, allowing for fine-grained sparsity support and power gating of inactive parts, improving energy efficiency. Measurements confirm that ENLighten achieves an improvement in energy-delay product compared to state-of-the-art photonic Transformer accelerators, building a prototype with four tiles, each containing two cores, augmented with three additional tiles of their new reconfigurable sparse engine, each with eight cores, supporting operation at a 1/4 granularity to balance performance and accuracy.

👉 More information

🗞 ENLighten: Lighten the Transformer, Enable Efficient Optical Acceleration

🧠 ArXiv: https://arxiv.org/abs/2510.01673