Generating high-resolution colour images from satellite data relies on a technique called pansharpening, which combines detailed black and white images with lower resolution colour data. However, deep learning methods for pansharpening typically struggle when applied to images from different satellite sensors, requiring costly and time-consuming retraining. Zeyu Xia, along with colleagues at the University of Sydney and Wuhan University, now presents SWIFT, a new framework that rapidly adapts existing pansharpening models to new sensors using only a small fraction of the target data. The team’s approach identifies and updates the most critical parameters within a pre-trained model, reducing adaptation time from hours to mere minutes, and achieving performance comparable to, or exceeding, full retraining on standard datasets like WorldView-2 and QuickBird. This advance promises to significantly improve the efficiency and practicality of generating high-resolution imagery for applications such as environmental monitoring and urban planning.

Sparse Weight Fine-tuning for Pansharpening Generalization

Researchers have developed SWIFT, a novel framework that dramatically improves the adaptability of pansharpening models to new sensors while minimizing computational cost. Pansharpening, the process of fusing high-resolution black-and-white images with lower-resolution colour images, is crucial for applications like environmental monitoring and urban planning. SWIFT achieves this by fine-tuning only a small fraction, approximately 30%, of the model’s parameters using just 3% of the training data, maintaining state-of-the-art performance. This makes it a practical solution for deploying pansharpening models across diverse sensor types.

The team demonstrated SWIFT’s effectiveness on datasets from WorldView-2 and QuickBird satellites, achieving state-of-the-art results on standard measures of pansharpening quality. Importantly, SWIFT often surpasses the performance of fully fine-tuning the model while requiring significantly fewer computational resources. This resource-efficient approach represents a significant advancement in cross-sensor generalization for pansharpening.

Selective Weight Adaptation for Pansharpening Models

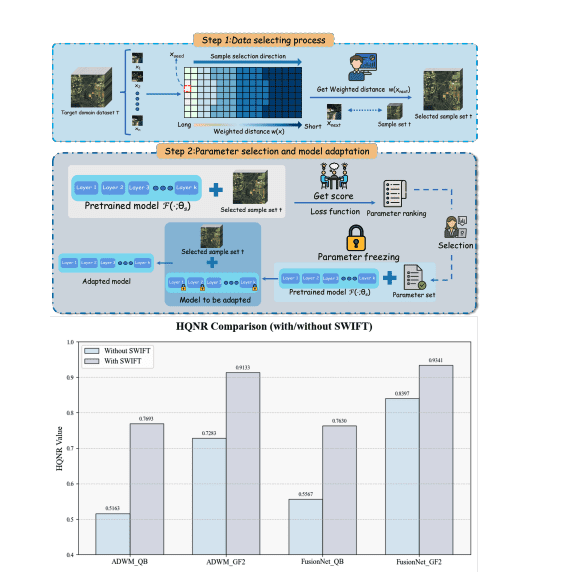

The research team developed SWIFT, a new framework to address the challenge of adapting pansharpening models to new sensors without extensive retraining or architectural changes. Traditional deep learning approaches often struggle when applied to data from unseen sensors, requiring either complex model redesigns or time-consuming full retraining processes. SWIFT offers a computationally efficient alternative by selectively updating only the most critical parts of an existing model. The core of SWIFT lies in a two-stage process. First, the team employs an unsupervised sampling strategy to intelligently select a small subset of the most informative samples from the new sensor’s data, ensuring a balanced and representative sample.

This carefully chosen subset then serves as a “probe” for the pre-trained model. In the second stage, the team analyzes how the model’s parameters respond to this new data, identifying the subset most affected by the shift in data distribution. This allows SWIFT to bypass the need for full retraining, focusing solely on updating these sensitive weights, reducing adaptation time to approximately one minute on modern hardware, and preserving the original model architecture.

Swiftly Adapting Pansharpening to New Sensors

Researchers have developed SWIFT, a new framework that significantly improves the process of pansharpening, a technique used to create high-resolution images from satellite data. Pansharpening combines detailed, black-and-white images with lower-resolution colour images, and is crucial for applications like environmental monitoring and urban planning. Existing deep learning methods for pansharpening often struggle when applied to data from different satellite sensors, requiring costly and time-consuming retraining or complex architectural changes. SWIFT addresses this challenge by efficiently adapting existing models to new sensors without extensive retraining.

The framework identifies a small subset of the most informative data from the new sensor and then pinpoints the specific parameters within the model that need adjustment. This targeted approach allows for rapid adaptation, reducing the time required to approximately one minute on standard hardware. Results demonstrate that SWIFT not only outperforms existing methods that require full retraining but also achieves comparable, and sometimes superior, performance on datasets from WorldView-2 and QuickBird satellites, establishing a new benchmark for cross-sensor pansharpening.

Swift Adaptation For Cross-Sensor Pansharpening

This research introduces SWIFT, a new framework designed to improve the adaptability of pansharpening models when applied to data from different sensors. Pansharpening combines high-resolution black-and-white images with lower-resolution multispectral images to create detailed, high-resolution colour images, but existing deep learning methods often struggle when used with unfamiliar sensors. SWIFT addresses this by efficiently identifying and updating only the most sensitive parameters within a pre-trained model, requiring minimal data and computational resources. Experiments demonstrate that SWIFT achieves state-of-the-art performance on standard metrics for image quality, surpassing even full retraining of the model on the new sensor data while significantly reducing adaptation time. The method’s success stems from a density-aware sampling strategy that prioritizes informative data points and a dynamic parameter selection process that focuses on the most impactful model weights. This advancement promises to make high-resolution satellite imagery more accessible and practical for a wider range of applications, reducing the cost and complexity of adapting models to diverse data sources.

👉 More information

🗞 SWIFT: A General Sensitive Weight Identification Framework for Fast Sensor-Transfer Pansharpening

🧠 ArXiv: https://arxiv.org/abs/2507.20311