The pursuit of scalable quantum error correction remains a central challenge in building practical quantum computers, and recent work by Arian Vezvaee, Cesar Benito, Mario Morford-Oberst, and colleagues at the University of Southern California and Universidad Autonoma de Madrid addresses this directly. The team demonstrates a significant step towards achieving subthreshold scaling of a surface-code memory on superconducting quantum processors with a non-ideal architecture, specifically utilising heavy-hex layouts. They achieve this through a co-designed approach, combining a novel code embedding strategy with robust dynamical decoupling techniques, and successfully scale the code from a uniform configuration to anisotropic arrangements. This research is significant because it not only demonstrates improved protection of quantum information across multiple error correction cycles, but also establishes a clear pathway towards rigorous testing of scalable surface-code performance under realistic, biased noise conditions, and reveals limitations in commonly used methods for evaluating code performance.

Surface Codes and Quantum Error Correction Progress

Quantum error correction protects fragile quantum information from errors inherent in building quantum computers. Surface codes are a leading candidate for practical implementation due to their potential for fault-tolerant computation and suitability for two-dimensional architectures. Current research focuses on reducing physical error rates to reach the threshold for robust logical qubits, which are qubits encoded using error correction techniques. Investigations utilize IBM Quantum and Google Quantum AI processors to benchmark performance and characterize error sources, identifying and understanding various error types, including crosstalk.

While demonstrations of entangled logical qubits confirm that quantum error correction can protect quantum information, current hardware consistently operates above the threshold for fault tolerance. System improvements, including enhanced qubit coherence, higher gate fidelities, and reduced crosstalk, are essential to overcome this limitation. Data analysis and machine learning techniques are increasingly used to optimize error correction performance and identify areas for improvement. A 30% reduction in experimental noise rates would be sufficient to achieve fault tolerance with current (5,5) surface codes, and accurate benchmarking is essential for tracking progress.

Researchers are exploring efficient decoding algorithms, optimized quantum computer architectures, error mitigation strategies, and precise calibration procedures. Cross-entropy benchmarking is used to evaluate the performance of quantum error correction, and future directions include scaling up error correction, improving hardware, developing more efficient decoding algorithms, exploring alternative error correction codes, and integrating error correction with quantum algorithms. This work demonstrates significant progress towards fault-tolerant quantum computation, highlighting the importance of both hardware improvements and software optimization.

Surface Code Memory with Anisotropic Scaling

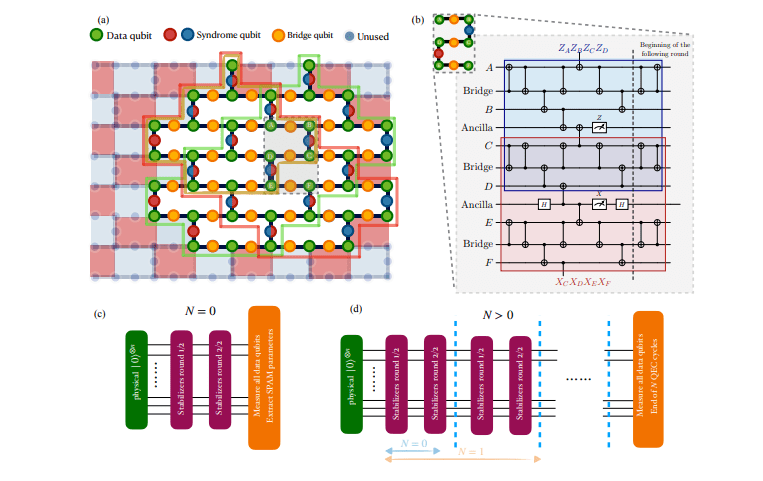

Scientists have demonstrated subthreshold scaling of a surface-code memory on superconducting processors with a heavy-hex architecture, a configuration where qubit connectivity does not directly match the code requirements. To address this challenge, they co-designed the code embedding and control mechanisms, utilizing a depth-minimizing “fold-unfold” embedding with bridge ancillas and robust, gap-aware dynamical decoupling. Experiments involved anisotropic scaling from a uniform distance 3 code to anisotropic distance (dx, dz) = (3, 5) and (5, 3) codes, allowing investigation of how increasing the distance in either the X or Z basis impacts logical state protection across multiple error correction cycles. The method involves a SWAP-based protocol for syndrome extraction, a crucial step in quantum error correction where stabilizer measurements reveal information about errors.

Instead of directly compiling SWAP operations, the team implemented a folding-unfolding scheme, initially folding weight-4 stabilizers into weight-2 operators, then measuring these using ancilla qubits before unfolding them back to their original form, minimizing circuit depth. Circuits were further optimized by removing reset gates and tracking previous measurement outcomes in software to shorten the syndrome extraction round and reduce idling errors. Researchers compared the largest embeddable anisotropic codes, (5,3) and (3,5), against smaller (3,3) codes hosted on the same superconducting chip. This careful comparison, combined with the optimized syndrome extraction and dynamical decoupling, allows for robust tests of subthreshold surface-code scaling under biased, non-Markovian noise, even on architectures where the native connectivity doesn’t perfectly align with the code structure.

Anisotropic Scaling in Surface Code Memory

Scientists have demonstrated subthreshold scaling of a surface-code memory on superconducting processors with a heavy-hex qubit arrangement, a connectivity that does not natively match the code requirements. The team co-designed the code embedding and control mechanisms, utilizing a depth-minimizing “fold-unfold” embedding with bridge ancillas and robust, gap-aware dynamical decoupling. Experiments achieved anisotropic scaling from a uniform distance 3 code to anisotropic distance (dx, dz) = (3, 5) and (5, 3) codes, revealing that increasing dz improves the protection of Z-basis logical states, while increasing dx enhances the protection of X-basis logical states across multiple error correction cycles. The research establishes that dynamical decoupling plays a major role in suppressing coherent ZZ crosstalk and non-Markovian dephasing, which accumulate during idle gaps inherent in the heavy-hex layout.

Measurements confirm that dynamical decoupling eliminates spurious subthreshold claims that arise when scaled codes without dynamical decoupling are compared to smaller codes with it, highlighting its importance for accurate performance evaluation. The team derived an entanglement fidelity metric computed directly from X- and Z-basis logical-error data, providing per-cycle, SPAM-aware bounds on code performance. Analysis using this metric reveals that commonly used single-parameter fits for computing suppression factors can be misleading when their underlying assumptions are violated, specifically stationarity, unitality, and negligible logical SPAM. These results establish a concrete path toward robust tests of subthreshold surface-code scaling under biased, non-Markovian noise by integrating quantum error correction with optimized dynamical decoupling on non-native architectures.

Anisotropic Scaling Boosts Quantum Memory Performance

Researchers have demonstrated progress towards scalable quantum error correction by successfully implementing a surface code memory on superconducting hardware with limited native connectivity. The team co-designed the code embedding and control mechanisms, employing a depth-minimizing approach alongside robust dynamical decoupling techniques to mitigate errors. Through experiments, they achieved anisotropic scaling from one code configuration to others, revealing that increasing the code’s dimensions in specific directions enhances the protection of quantum states during error correction cycles. While achieving full subthreshold scaling remains a challenge, the results indicate it is within reach with further hardware refinements.

A key finding is the significant role of dynamical decoupling in suppressing coherent crosstalk and non-Markovian dephasing, which accumulate during processor idle times. The team developed a new entanglement fidelity metric to accurately quantify performance, revealing that commonly used analysis methods can be misleading when assumptions about the system are not met. This metric allowed for a more precise assessment of code performance and highlighted the importance of optimizing each code configuration individually with respect to dynamical decoupling. The authors acknowledge that demonstrating subthreshold scaling requires careful consideration of the data analysis and that spurious results can arise from improper comparisons. They observed the onset of subthreshold scaling on one processor.

👉 More information

🗞 Surface code scaling on heavy-hex superconducting quantum processors

🧠 ArXiv: https://arxiv.org/abs/2510.18847