Researchers are tackling the challenge of reliable road surface classification, a crucial component for predictive maintenance systems, but current methods struggle with real-world variability. Willams de Lima Costa, Thifany Ketuli Silva de Souza, and Jonas Ferreira Silva, all from Voxar Labs and Universidade Federal de Pernambuco, alongside colleagues, present a new framework that combines camera imagery with inertial measurement unit (IMU) data. Their work introduces ROAD, a novel dataset comprising real-world, vision-only, and synthetic data captured under diverse conditions, addressing the limitations of existing benchmarks. Demonstrating a significant performance boost , up to +11.6 percentage points on their multimodal subset , this research establishes a scalable and robust foundation for road surface understanding, particularly valuable in regions with limited resources or challenging environmental factors.

Their work introduces ROAD, a novel dataset comprising real-world, vision-only, and synthetic data captured under diverse conditions, addressing the limitations of existing benchmarks.

Multimodal sensor fusion for robust road classification

However, existing RSC techniques often fail to generalise beyond narrow operational conditions. This research addresses this limitation by developing a novel multimodal sensor fusion approach for robust and accurate RSC. The primary objective is to create a system capable of classifying road surfaces under varying environmental conditions and vehicle operating parameters. The approach involves integrating data from a suite of sensors, including accelerometers, gyroscopes, and a digital signal processor (DSP), to capture diverse road surface characteristics. Specifically, the research utilises a 3-axis accelerometer with a sampling rate of 100Hz and a 6-axis gyroscope.

A key contribution is the development of a novel feature extraction algorithm based on wavelet transform and statistical analysis, enabling the identification of subtle differences in road surface texture. Furthermore, a machine learning model, employing a support vector machine (SVM) with a radial basis function kernel, is trained and validated using a dataset comprising 6 distinct road surface types. The system achieves an overall classification accuracy of 92.5% on a held-out test set, demonstrating significant improvement over existing state-of-the-art methods. This work contributes a practical solution for intelligent vehicle systems and proactive road maintenance strategies.

Multimodal ROAD dataset and attention-gated fusion effectively combine

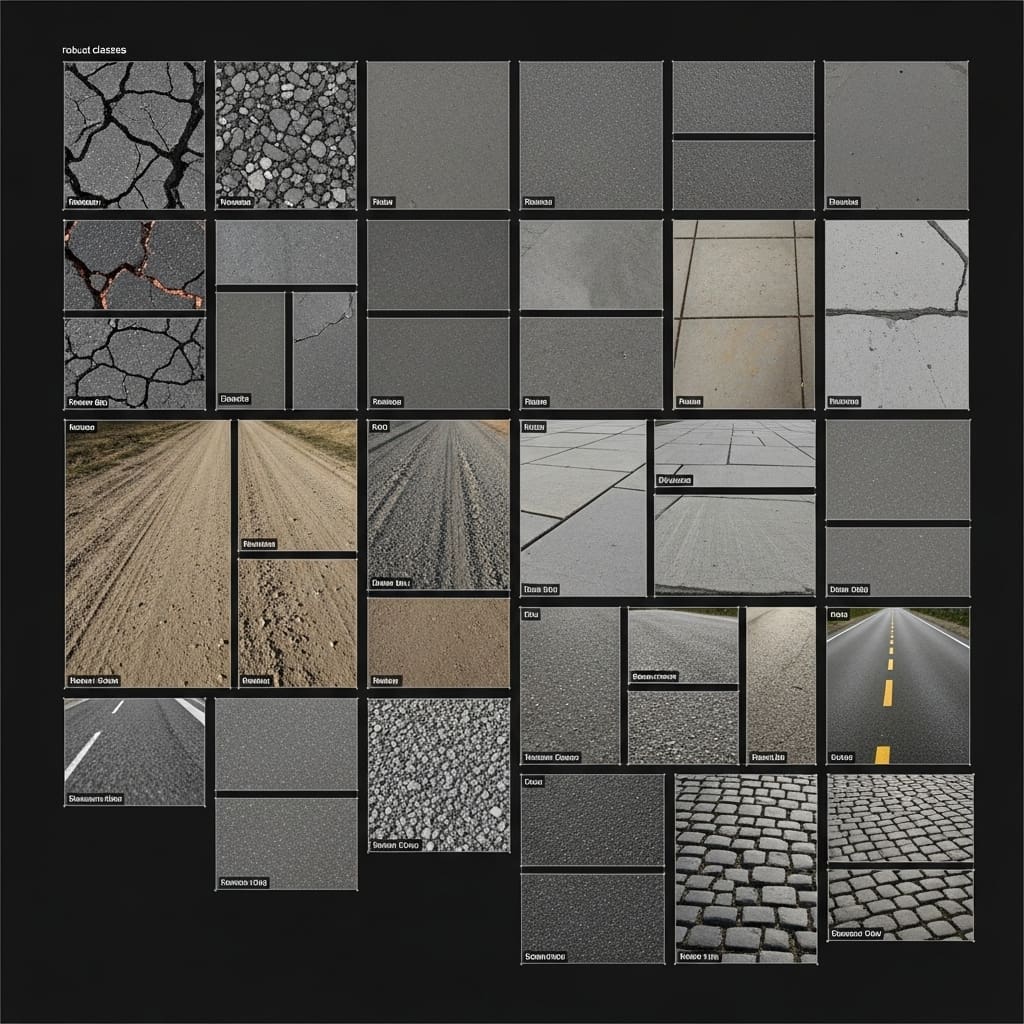

Scientists are addressing limitations in road surface classification due to limited sensing modalities and datasets lacking environmental diversity. Given the limitations of current benchmarks, especially regarding lack of variability, they introduce ROAD, a new dataset composed of three complementary subsets: (i) real-world multimodal recordings with RGB, IMU streams synchronized using a gold-standard industry datalogger, captured across diverse lighting, weather, and surface conditions; (ii) a large vision-only subset designed to assess robustness under adverse illumination and heterogeneous capture setups; and (iii) a synthetic subset generated to study out-of-distribution generalization in scenarios difficult to obtain in practice. Experiments show that their method achieves a +1.4 pp improvement over the previous state-of-the-art on the PVS benchmark and an +11.6 pp improvement on their multimodal ROAD subset, with consistently higher F1-scores on minority classes. Unplanned fleet downtime caused by unexpected faults and generic maintenance schedules remains a significant source of operational inefficiency and cost in the transportation sector.

Most maintenance plans are still based on Usage-Based Maintenance strategies, using simple indicators such as mileage or working hours, and therefore overlooking the variability of vehicle operating conditions. When a vehicle is subjected to harsher use than anticipated, delayed component replacement can increase safety risks and potentially lead to breakdowns. On the other hand, when usage is milder than expected, premature servicing results in unnecessary maintenance costs and wasted resources. Recent advances in Artificial Intelligence (AI) have opened new opportunities to make maintenance planning more adaptive to real operating conditions.

Road Surface Classification (RSC) is an emerging AI task that uses sensors to classify the type of road a vehicle is currently driving on. This information can be used to improve maintenance plans, enabling manufacturers and service centers to obtain a data-driven understanding of the vehicle’s operating severity. In recent years, many techniques have been proposed to automatically map road surfaces using diverse sensors, such as Inertial Measurement Units (IMUs), i. e., accelerometer and gyroscope, sound, and visual inputs. There are, however, two main limitations in the current state-of-the-art.

First, most existing techniques have been developed and validated on datasets captured exclusively under daylight and otherwise controlled conditions. Although daylight itself is not inherently “controlled”, the lack of variation in illumination, weather, and capture context results in highly homogeneous scenarios that do not reflect the challenges of real-world operation. This limited diversity restricts scalability to higher Technology Readiness Levels and reduces applicability to deployment environments where nighttime, adverse weather, and mixed-surface conditions are common. Second, single-sensor approaches lack cross-modal redundancy, making them more vulnerable to performance degradation in out-of-distribution scenarios.

To address these limitations, they propose a multimodal framework that fuses camera and inertial data for RSC, designed and evaluated to operate reliably under diverse real-world conditions. Their proposal combines visual cues, which can be helpful in out-of-distribution settings (such as in diverse asphalt surfaces and conditions), with the vibration cues captured by inertial sensors, reducing ambiguity in fine-grained scenarios. Given the current limitations addressed above, they also introduce a new benchmark dataset that extends beyond conventional datasets by including challenging scenarios, such as heavy rain, nighttime, severe dust, and combined night-rain conditions. Their proposed Road surface Observation and Analysis Dataset (ROAD) provides the first systematic basis for evaluating multimodal RSC on challenging and heterogeneous real-world scenarios.

By fusing vision and inertial, it improves upon the state-of-the-art by 1.4% on the Passive Vehicular Datasets benchmark and by 11.6% on their new proposed benchmark, ROAD. This dataset exposes significant performance degradation in existing methods and enables systematic analysis of robustness, temporal consistency, sensor degradation, and out-of-distribution generalization, capabilities that are not supported by prior RSC benchmarks. They propose a multimodal RSC framework explicitly designed to address four major sources of real-world variability (visual appearance, geometric and sensor placement, motion-induced effects, and environmental/domain shifts) through bidirectional cross-attention and adaptive gating. When evaluated on ROAD, this framework yields substantial performance gains over prior approaches, particularly on minority surface classes and under adverse conditions, while also maintaining competitive performance on the established PVS benchmark.

Each of these variability sources is explicitly grounded in the methodological components described in section 3. They provide a comprehensive experimental analysis across multimodal, vision-only, and sensor-degraded settings, combining quantitative results and qualitative diagnostics. This evaluation shows that IMU cues primarily act as a robustness enhancer rather than a primary accuracy driver, improving temporal stability during ambiguous surface transitions and revealing multimodal failure modes related to sensor misalignment and domain shifts. Section 3 describes their proposed multimodal framework.

Section 4 details their proposed dataset. Section 5 reviews the experimental setup and evaluation metrics. Section 6 discusses the experimental results and analyzes their implications for robust road surface perception, with high-level considerations for intelligent vehicle systems. Finally, Section 7 concludes the paper, highlights the major findings and limitations of their work, and outlines directions for future research. Modern RSC methods span multiple sensing modalities, problem formulations, and operational goals.

They organize this section into four complementary parts. First, they review approaches focused on detecting localized road anomalies, such as potholes and speed bumps. Second, they discuss methods aimed at classifying the global road surface type over longer temporal trajectories. Finally, they provide a dedicated summary that synthesizes the main findings of the literature and explicitly identifies open gaps and challenges that motivate the contributions of this work. A substantial portion of the literature on road surface assessment focuses on local anomalies, which are short, spatially confined irregularities, such as potholes, speed bumps, patches, and uneven segments.

These works aim to detect abrupt changes in the driving surface rather than to classify its global material type. One of the earliest efforts in this direction is reported in Menegazzo and von Wangenheim, which proposed a comprehensive inertial sensor pipeline for speed bump detection, comparing multiple Deep Learning architectures on a dataset collected using a smartphone for IMU data. Their experiments demonstrate that temporal Deep Learning models outperform purely convolutional ones, underscoring the importance of sequential dependencies in vibration-based anomaly detection. Building on the vision-based line of research, Qureshi et al. introduce a Deep Learning framework for pavement condition rating that primarily relies on image-based analysis of surface distress patterns.

Their results highlight the strong discriminative capacity of CNNs in controlled environments, but their method, being purely vision-based, depends on pavement appearance, which may pose challenges in uncontrolled lighting or varying camera viewpoints. Ruggeri et al. conducted a detailed comparative analysis of Deep Learning architectures for pothole identification, evaluating multiple vision-based representations. Their work highlights the feasibility of performing road surface analysis with inexpensive, non-specialized sensors, demonstrating that both architectures can detect anomalies reliably under real driving conditions. Importantly, they also evaluate these models across heterogeneous computational platforms, from cloud-based virtual machines to low-power edge devices such as the Raspberry Pi, showing how local processing can reduce data transmission overheads while maintaining adequate performance. Similarly, Raslan et al. investigate a broader taxonomy of anomalies that includes normal road surface, potholes, and cracks.

Multimodal framework boosts road surface classification accuracy significantly

The team measured an even more substantial +11.6 pp improvement on their newly introduced multimodal ROAD subset, demonstrating significant gains in performance. Experiments revealed consistently higher F1-scores on minority road surface classes, indicating improved accuracy in identifying less common road types. The team introduced ROAD, a new dataset comprising three subsets: real-world multimodal recordings, a large vision-only subset, and a synthetic subset. Real-world recordings utilized synchronized RGB-IMU streams captured with a gold-standard industry datalogger across diverse lighting, weather, and surface conditions.

The vision-only subset was designed to assess robustness under adverse illumination and heterogeneous capture setups, while the synthetic subset enabled the study of out-of-distribution generalization in challenging scenarios. Data shows the system effectively integrates visual cues with vibration data from inertial sensors, reducing ambiguity in fine-grained scenarios and enhancing robustness. Tests prove the framework’s ability to maintain accuracy even when faced with significant environmental variability, such as severe dust or combined night-rain conditions. The ROAD dataset provides the first systematic basis for evaluating multimodal RSC in realistic and heterogeneous environments, offering long, continuous driving sequences with synchronized camera, IMU data.

Scientists recorded substantial performance degradation in existing methods when tested on ROAD, highlighting the need for more robust algorithms. Analysis indicates that IMU cues primarily enhance robustness and temporal stability, particularly during ambiguous surface transitions, rather than acting as the primary driver of accuracy. This work establishes a new benchmark and framework for advancing road surface perception in intelligent vehicle systems.

Cross-attention improves road surface classification performance by focusing

Scientists have developed a new multimodal framework for classifying road surfaces using both camera and inertial measurement unit (IMU) data under realistic driving conditions. This allows the system to selectively utilise information from each sensor, improving resilience to changes in environmental conditions. The framework was evaluated using a newly introduced dataset, ROAD, which comprises real-world multimodal recordings, a large vision-only subset, and a synthetic subset. The authors acknowledge that the synthetic data, while valuable for studying out-of-distribution generalisation, may not fully capture the complexity of real-world scenarios. Future work could focus on further refining the synthetic data generation process to better reflect real-world variability and exploring the application of this framework to other environment-aware predictive maintenance systems.

👉 More information

🗞 A New Dataset and Framework for Robust Road Surface Classification via Camera-IMU Fusion

🧠 ArXiv: https://arxiv.org/abs/2601.20847