Multimodal large language models increasingly rely on visual understanding to perform complex tasks, yet current training methods often prioritise text and treat visual input as secondary information. Penghao Wu, Yushan Zhang from Linköping University, and Haiwen Diao from Nanyang Technological University, along with colleagues, address this limitation by introducing Visual Jigsaw, a novel post-training framework that directly strengthens a model’s ability to interpret visual data. The team achieves this by formulating a self-supervised task where models must reconstruct a shuffled image, video, or 3D scene, effectively learning to order visual information without relying on external annotations or complex generative processes. This approach delivers substantial improvements in fine-grained perception, temporal reasoning, and 3D spatial understanding, demonstrating the significant potential of vision-centric training to enhance the capabilities of multimodal AI systems.

Visual Jigsaw for Multimodal Learning

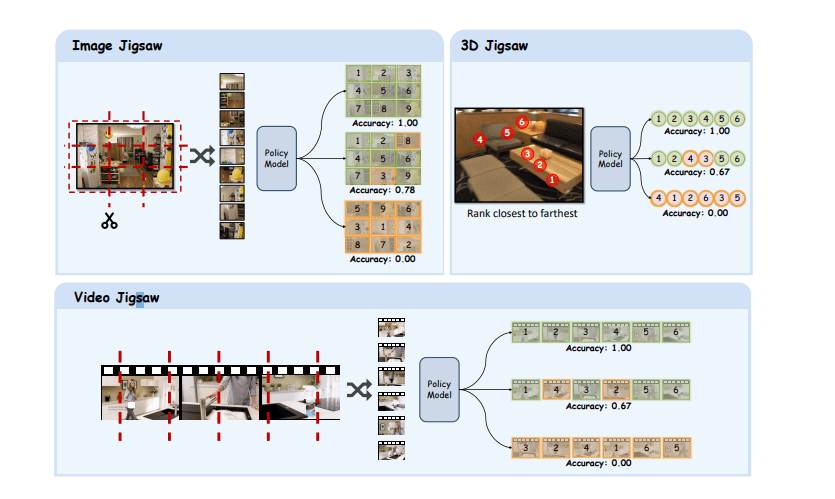

This appendix details experiments with Visual Jigsaw, a self-supervised learning technique designed to improve multimodal large language models (MLLMs). The overarching goal is to enhance these models’ abilities to perceive and reason about the world around them. The appendix provides supplementary information to a research paper describing the method, offering a deeper understanding of the experimental setup and results. The core of the research involves training MLLMs to solve jigsaw puzzles using images, videos, and 3D scenes. This process challenges the models to understand visual relationships and spatial arrangements.

The appendix includes visual examples of these puzzles for each modality, illustrating the specific tasks the models are trained on. Qualitative examples demonstrate the model’s improved performance on various tasks after training with the Visual Jigsaw method. A crucial section details the exact prompts used to instruct the model during both training and evaluation, ensuring reproducibility and clarity. These prompts guide the model to articulate its reasoning process before providing a final answer, encouraging more explainable artificial intelligence. The research explores how the Visual Jigsaw method can be applied to different types of visual data.

For images, the model reconstructs images from shuffled tiles. For videos, it reorders shuffled video clips to create a coherent sequence. And for 3D data, it arranges points based on their distance from the camera. This versatility highlights the broad applicability of the technique.

Visual Reasoning via Jigsaw Reconstruction

Researchers have pioneered Visual Jigsaw, a novel post-training framework that enhances visual understanding in multimodal large language models (MLLMs). Recognizing that existing methods often focus on text, they developed a self-supervised approach that directly strengthens an MLLM’s ability to interpret visual information. The method involves partitioning visual inputs, images, videos, and 3D data, into segments and then shuffling their natural order. The MLLM is then tasked with reconstructing the correct sequence, effectively learning to reason about visual relationships without relying on textual cues.

For images, the team divided images into non-overlapping patches, shuffled them, and trained the model to predict the original order. This forces the MLLM to analyze visual features and spatial relationships to correctly reassemble the image. The concept extends to video, where shuffled temporal clips challenge the model to predict the original chronological order, improving temporal reasoning. For 3D data, shuffled points with varying depth values require the model to reconstruct the correct depth order. The team implemented this framework using reinforcement learning from verifiable rewards, allowing the model to learn from intrinsic signals without manual annotations.

Experiments demonstrate that the approach significantly improves performance in fine-grained perception, temporal reasoning, and 3D spatial understanding. The MLLM achieves high accuracy on the image jigsaw task, demonstrating the effectiveness of the method. Similar results were observed for video and 3D data, highlighting the power of self-supervised learning. This innovative method provides a lightweight, verifiable objective that strengthens visual perception without altering the MLLM architecture or introducing additional generative modules, offering a streamlined and efficient approach to improving visual understanding.

Visual Jigsaw Improves Multimodal Understanding Significantly

This work presents Visual Jigsaw, a new post-training framework designed to enhance visual understanding in multimodal large language models (MLLMs). Scientists achieved substantial improvements in fine-grained perception, temporal reasoning, and 3D spatial understanding by formulating a self-supervised task where models reconstruct shuffled visual information. The method requires no additional visual generative components and derives its supervisory signal automatically, eliminating the need for manual annotations. Experiments demonstrate that the framework consistently improves performance across a diverse suite of vision-centric benchmarks, including image and video understanding tasks.

On image benchmarks, the method achieved gains on several key metrics, demonstrating enhanced fine-grained perception and spatial understanding. The model also attained improved scores on visual grounding tasks, confirming enhanced visual grounding capabilities. Quantitative results reveal gains on compositional understanding tasks, indicating a deeper understanding of visual scenes. For video understanding, the framework consistently improved performance across various benchmarks and frame settings. Scientists observed gains on several key metrics, indicating enhanced performance on video-based tasks. The results confirm that solving image and video jigsaw tasks encourages the model to better capture local patch details, infer global spatial layouts, and reason about inter-patch relations, directly benefiting fine-grained, spatial, and temporal understanding. These results demonstrate the effectiveness of Visual Jigsaw in enhancing multimodal understanding, offering a promising approach to developing more capable and versatile MLLMs.

Visual Jigsaw Improves Multimodal Understanding

This work presents Visual Jigsaw, a new self-supervised method for enhancing the visual understanding capabilities of multimodal large language models. The researchers addressed a limitation in current post-training approaches, which often prioritize text-based reasoning while underutilizing visual information. Visual Jigsaw frames visual understanding as an ordering problem, partitioning and shuffling visual inputs and then tasking the model with reconstructing the correct sequence. This approach avoids the need for complex visual reconstruction and integrates seamlessly with existing text-based models.

Experiments across images, videos, and 3D data demonstrate that Visual Jigsaw consistently improves performance in fine-grained perception, temporal reasoning, and 3D spatial understanding. Importantly, these gains in visual understanding are achieved without compromising the model’s existing reasoning abilities. The authors acknowledge that this work focuses on self-supervised learning and future research could explore combining this approach with other learning paradigms. They highlight the potential of perception-focused tasks as a valuable complement to existing methods for developing more robust and capable multimodal models, paving the way for future advancements in the field.

👉 More information

🗞 Visual Jigsaw Post-Training Improves MLLMs

🧠 ArXiv: https://arxiv.org/abs/2509.25190