Accurate depth perception is crucial for the reliable operation of unmanned systems, and researchers continually seek ways to achieve this with efficient, low-cost technologies. Chang Liu, Juan Li, and Sheng Zhang, from the Beijing Institute of Technology, alongside colleagues, address the challenges of monocular depth estimation on embedded systems with their new model, BoRe-Depth. This innovative approach, containing just 8. 7 million parameters, achieves significantly improved depth estimation and sharper object boundaries compared to existing lightweight models. By adaptively fusing depth features and integrating semantic knowledge into the system, BoRe-Depth delivers accurate results and operates efficiently, achieving 50. 7 frames per second on a Jetson Orin platform, representing a substantial advance for resource-constrained robotic applications.

Semantic Guidance Improves Monocular Depth Estimation

Scientists are advancing the field of monocular depth estimation, the process of determining distance from a single image, with a focus on improving accuracy and efficiency for real-world applications like robotics and autonomous driving. This work introduces a method that incorporates semantic information, understanding what objects are in a scene, to refine depth estimations and pays particular attention to object boundaries for more precise results. The research also investigates the potential of advanced network architectures, including efficient designs and transformers, to achieve both accuracy and speed. The method was evaluated using standard datasets such as NYU Depth Dataset V2, KITTI Dataset, and SIL3D, employing metrics like Absolute Relative Difference, Square Root of the Mean Squared Error, Delta 1, and Structural Similarity Index to quantify performance. Ultimately, this work presents a new approach to monocular depth estimation that aims to improve accuracy and efficiency by integrating semantic and boundary information, and by leveraging state-of-the-art deep learning techniques.

Enhanced Detail and Boundary Refinement Training

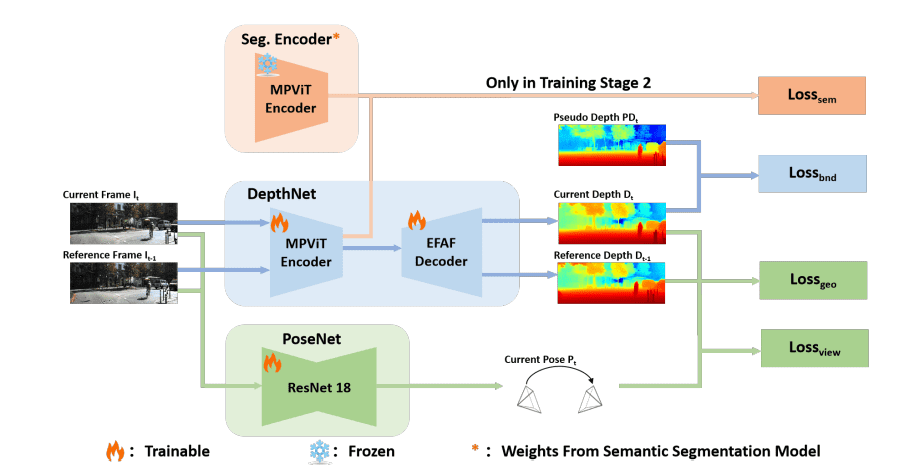

Researchers have developed BoRe-Depth, a novel model for accurate monocular depth estimation on embedded systems. This addresses limitations in existing lightweight approaches that often produce blurred object boundaries. The team engineered an Enhanced Feature Adaptive Fusion Module (EFAF) to improve detail representation, inspired by techniques that combine and adapt multi-level features. This module overcomes the shortcomings of simple upsampling operations by fully utilizing encoded features to enhance global detail. To further refine boundary quality, the team implemented a two-stage training strategy, first training a coarse model using conventional methods, then introducing a semantic information loss function to prioritize boundary regions and improve overall accuracy.

This function calculates the difference between features generated by a pre-trained semantic segmentation encoder and those from the depth estimation encoder, effectively transferring semantic knowledge and enhancing object recognition capabilities. The study pioneers the deployment of BoRe-Depth on an NVIDIA Jetson Orin platform, achieving a processing speed of 50. 7 frames per second with only 8. 7 million parameters, demonstrating a significant reduction in computational load while maintaining high performance. Researchers validated the approach through extensive testing on multiple challenging datasets, demonstrating that BoRe-Depth significantly outperforms previous lightweight models in terms of both accuracy and boundary definition. The team made the code publicly available to facilitate further research and development in the field.

BoRe-Depth Achieves Accurate Boundary Detail Estimation

Scientists have developed BoRe-Depth, a novel monocular depth estimation model designed for efficient operation on embedded systems. The BoRe-Depth model contains only 8. 7 million parameters, enabling deployment on resource-constrained platforms without sacrificing performance. Central to this breakthrough is the Enhanced Feature Adaptive Fusion Module (EFAF), which adaptively fuses depth features to enhance the representation of boundary details. Experiments demonstrate that EFAF significantly improves the quality of depth estimation results, particularly around object edges.

To further refine boundary accuracy, the team implemented a two-stage training strategy, introducing semantic information loss in the second stage to guide the model’s focus on boundary regions and improve object recognition capabilities. The resulting BoRe-Depth model achieves a processing speed of 50. 7 frames per second (FPS) when deployed on an NVIDIA Jetson Orin, demonstrating its real-time capabilities. This performance represents a substantial advancement in the field, allowing for accurate and efficient 3D perception in unmanned systems and other embedded applications.

Realtime Depth Estimation with Semantic Refinement

Researchers have developed BoRe-Depth, a novel method for real-time monocular depth estimation, achieving high-quality results on embedded systems. The team addressed challenges in existing low-cost depth estimation techniques, which often suffer from poor accuracy and blurred object boundaries. BoRe-Depth leverages a large depth estimation model to generate accurate depth maps, then uses these as training data for a self-supervised learning process. Key to the success of BoRe-Depth is the Enhanced Feature Adaptive Fusion Module, which improves boundary detail representation, and the integration of semantic knowledge into the model’s encoder.

This semantic integration refines depth estimation by providing rich object shape information, allowing the model to gradually improve from initial coarse estimations. Validated on multiple datasets encompassing indoor and outdoor scenes, static and dynamic environments, the model significantly outperforms existing lightweight monocular depth estimation techniques. Future work may focus on improving the robustness of this process or exploring alternative self-supervised learning strategies. The team demonstrates the effectiveness of their approach through detailed ablation studies, highlighting the crucial role of each component in enhancing overall model performance.

👉 More information

🗞 BoRe-Depth: Self-supervised Monocular Depth Estimation with Boundary Refinement for Embedded Systems

🧠 ArXiv: https://arxiv.org/abs/2511.04388