Large language models excel at complex reasoning, but their substantial computational demands hinder widespread practical application. Antonios Valkanas from McGill University, Soumyasundar Pal from Huawei Montréal, and Pavel Rumiantsev, also from McGill University, with colleagues, address this challenge by introducing C3PO, a novel framework for optimising cascaded language models. This research presents a self-supervised method that intelligently distributes queries across models of varying computational cost, ensuring efficient reasoning without relying on labelled training data. By focusing on minimising potential performance loss compared to using the most powerful model alone, C3PO guarantees both cost control and reliable generalisation, achieving state-of-the-art results on challenging reasoning benchmarks and paving the way for scalable deployment of large language models.

CPO Performance Across Diverse Reasoning Benchmarks

This analysis details experimental results and a thorough preparation checklist, indicating a well-prepared and responsible research paper. Experiments compared a method, CPO, against existing approaches across datasets including AQuA, GSM8K, and BigBench Temporal Sequences, categorizing results based on accuracy and cost-effectiveness. Results indicate CPO frequently achieves high percentages of very good classifications, demonstrating strong performance. Key observations highlight a focus on cost-effectiveness, strong performance across multiple datasets, and a commitment to transparency and reproducibility.

Self-Supervised Cascade Optimization for Language Models

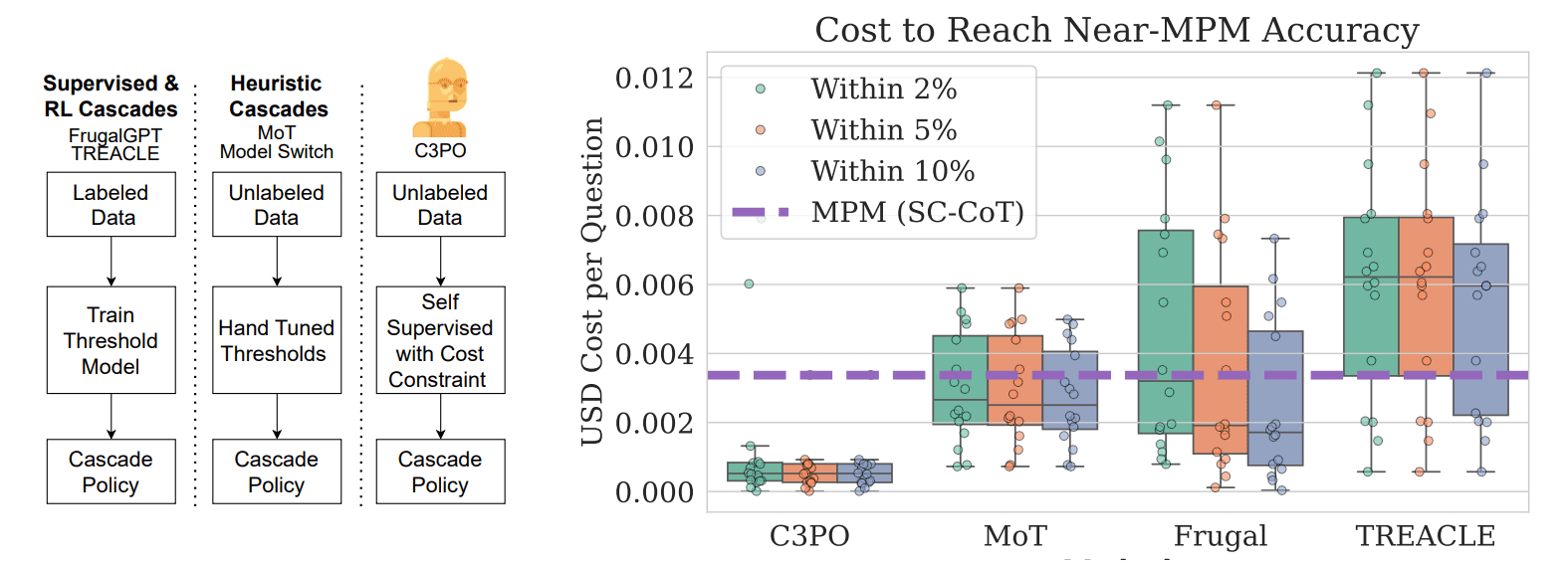

This study introduces Cost Controlled Cascaded Prediction Optimization (C3PO), a novel framework for optimizing cascades of large language models (LLMs) without requiring labeled data. Recognizing the substantial inference costs associated with powerful LLMs, scientists engineered a system where smaller, less expensive models handle simpler queries, escalating only the most challenging cases to larger models. C3PO operates in a self-supervised manner, learning to efficiently exit the cascade by analyzing consistency between a smaller model and the most powerful model (MPM) within the system, minimizing regret with respect to the MPM while prioritizing accuracy. Experiments employed a cascade of LLAMA models across diverse reasoning benchmarks including GSM8K, MATH-500, BigBench-Hard and AIME, demonstrating that C3PO achieves state-of-the-art performance, surpassing existing cascading baselines in both accuracy and cost-efficiency, often requiring less than 20% of the cost of the MPM to achieve comparable accuracy within a 2, 5, or 10% margin.

Cascaded LLMs Optimised with Self-Supervised Exit Policy

Scientists have developed C3PO, a novel framework for optimizing cascaded large language models (LLMs) to reduce inference costs without sacrificing accuracy. The work introduces a self-supervised approach, eliminating the need for expensive labeled datasets typically required for training LLM cascades, and enabling easy adaptation to new tasks and domains. C3PO learns to efficiently exit the cascade, stopping inference at cheaper models, by exploiting consistency between a small model and the most powerful model in the cascade. Experiments demonstrate that C3PO achieves state-of-the-art performance across a diverse set of reasoning benchmarks, including GSM8K, MATH-500, BigBench-Hard, and AIME, surpassing existing cascading approaches in both accuracy and cost-efficiency, requiring less than 20% of the cost of the most powerful model to achieve an accuracy gap of at most 2%, 5%, or 10%. The research delivers rigorous theoretical guarantees, including conformal cost bounds that control inference cost and PAC-Bayesian generalization bounds certifying the learned cascade decision rules generalize effectively, while also achieving data efficiency by learning from a small “self-supervision” pool of unlabeled prompts.

Cascaded LLMs Optimised Without Training Data

Scientists have developed C3PO, a new framework for optimizing cascaded large language models, addressing the challenge of high inference costs that limit real-world deployment. This system intelligently manages a cascade of models, using smaller, cheaper models for simple queries and escalating only the most difficult cases to more powerful ones, achieving this without requiring labeled training data. The research team demonstrates both theoretical guarantees for cost control and generalization accuracy, alongside strong empirical results on challenging reasoning benchmarks including GSM8K, MATH-500, and BigBench-Hard, consistently outperforming existing cascading methods. The system exhibits robustness when applied to different reasoning tasks than those used during training, demonstrating its adaptability.

👉 More information

🗞 C3PO: Optimized Large Language Model Cascades with Probabilistic Cost Constraints for Reasoning

🧠 ArXiv: https://arxiv.org/abs/2511.07396