Microsoft researchers published Phi-4-Mini-Reasoning: Exploring the Limits of Small Reasoning Language Models in Math, detailing how a compact 3.8 B-parameter model achieved superior performance on math reasoning tasks compared to larger models through a systematic training approach leveraging high-quality chain-of-thought data.

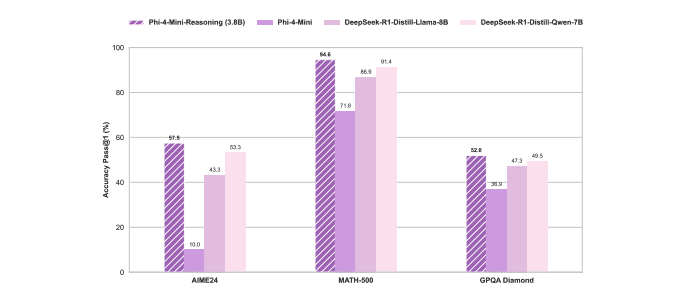

The study presents a four-step training method for enhancing reasoning in small language models (SLMs). The approach includes mid-training on synthetic long-CoT data, supervised fine-tuning, Rollout DPO, and reinforcement learning with verifiable rewards. Applied to Phi-4-Mini, the model achieved superior performance on math tasks compared to larger models like DeepSeek-R1-Distill-Qwen-7B and Llama-8B. The results demonstrate that high-quality Cot data and a systematic training recipe can unlock strong reasoning capabilities in resource-constrained SLMS.

In recent years, large language models (LLMS) have emerged as a cornerstone of artificial intelligence, fundamentally altering how we interact with technology. These models, distinguished by their ability to process vast amounts of text data, have undergone significant advancements that enhance their performance, adaptability, and applicability across various domains. From refining conversational AI to solving complex mathematical problems, LLMs are at the forefront of technological innovation.

A pivotal advancement in LLMs is the integration of reinforcement learning (RL), particularly through methods like Reinforcement Learning from Human Feedback (RLHF). This technique involves training models based on human evaluations, where models are rewarded for producing responses that align with user preferences. For instance, platforms like ChatGPT leverage RLHF to refine their outputs iteratively, ensuring they meet user expectations more effectively.

The algorithms used to generate responses from LLMs have also seen notable improvements. Techniques such as beam search and temperature control are employed to enhance the quality and relevance of generated text. Beam search explores multiple potential responses simultaneously, while temperature control adjusts the randomness in response generation, balancing creativity with coherence. These methods contribute to more accurate and contextually appropriate outputs.

Architectural changes within LLMs have been crucial in improving their efficiency and effectiveness. By modifying how information flows through the model, researchers have enhanced its ability to handle complex tasks, from natural language understanding to generation. These architectural tweaks ensure that models perform better and operate more efficiently, making them accessible for a wider range of applications.

One area where LLMs have shown remarkable progress is in mathematical reasoning. Innovations like Metamath enable models to generate and solve mathematical problems autonomously, while approaches such as LIMO simplify the reasoning processes within these models. Additionally, frameworks like MAMMOTH focus on hybrid instruction tuning, allowing models to handle mathematical tasks more effectively by combining different learning strategies.

The development of open-source solutions, such as Dapo and SRPO, has democratized access to advanced LLMs. These platforms provide the tools necessary for implementing reinforcement learning across various domains, fostering collaboration and innovation within the AI community. By making these technologies accessible, they encourage a broader range of applications and improvements.

The landscape of large language models is continually evolving, driven by advancements in reinforcement learning, decoding algorithms, architectural design, mathematical reasoning, and open-source initiatives. These innovations contribute to developing more responsive, accurate, and versatile AI systems. As research continues, LLMs are poised to play an even greater role in shaping the future of technology and human interaction.

👉 More information

🗞 Phi-4-Mini-Reasoning: Exploring the Limits of Small Reasoning Language Models in Math

🧠 DOI: https://doi.org/10.48550/arXiv.2504.21233