Stochastic Gradient Descent, a cornerstone of modern machine learning, frequently powers the algorithms that underpin large-scale data analysis, yet its behaviour in complex, high-dimensional scenarios remains poorly understood. Jiaqi Li, Zhipeng Lou from the University of California, San Diego, Johannes Schmidt-Hieber from the University of Twente, and Wei Biao Wu now present rigorous statistical guarantees for this essential technique, and its averaged variant, in precisely these challenging high-dimensional settings. The team achieves this breakthrough by adapting powerful tools from the analysis of complex time series, effectively viewing the learning process as a dynamic system and establishing its stability. This innovative approach not only proves that the algorithm converges reliably, but also provides a framework for analysing a wide range of other high-dimensional learning methods, representing a significant advance in our theoretical understanding of machine learning.

NeurIPS Checklist Assessment Confirms Theoretical Work

This assessment thoroughly addresses the NeurIPS checklist, providing clear justifications for each response. The team accurately identifies whether each point applies, doesn’t apply, or is relevant to their theoretical work, demonstrating careful consideration of ethical guidelines. The assessment consistently provides reasoning for each answer, showcasing a thoughtful approach to responsible research, and correctly identifies when a point is not applicable to the research. Overall Strengths: * Completeness: Every item on the checklist receives a considered response. * Accuracy: Answers align logically with the description of the work, a theoretical study without data collection or human subjects.

- Justification: Each answer includes a clear explanation, demonstrating careful thought. * Understanding of Nuance: The assessment correctly identifies when a point is not applicable to the research. The assessment adequately addresses potential impacts and reinforces the reasoning that safeguards are not applicable because the work is purely theoretical. In conclusion, this is an exceptionally well-done self-assessment, demonstrating a strong grasp of NeurIPS ethical guidelines and their application to the research, and the team is well-prepared for submission.

High-Dimensional Online Learning via Autoregressive Analysis

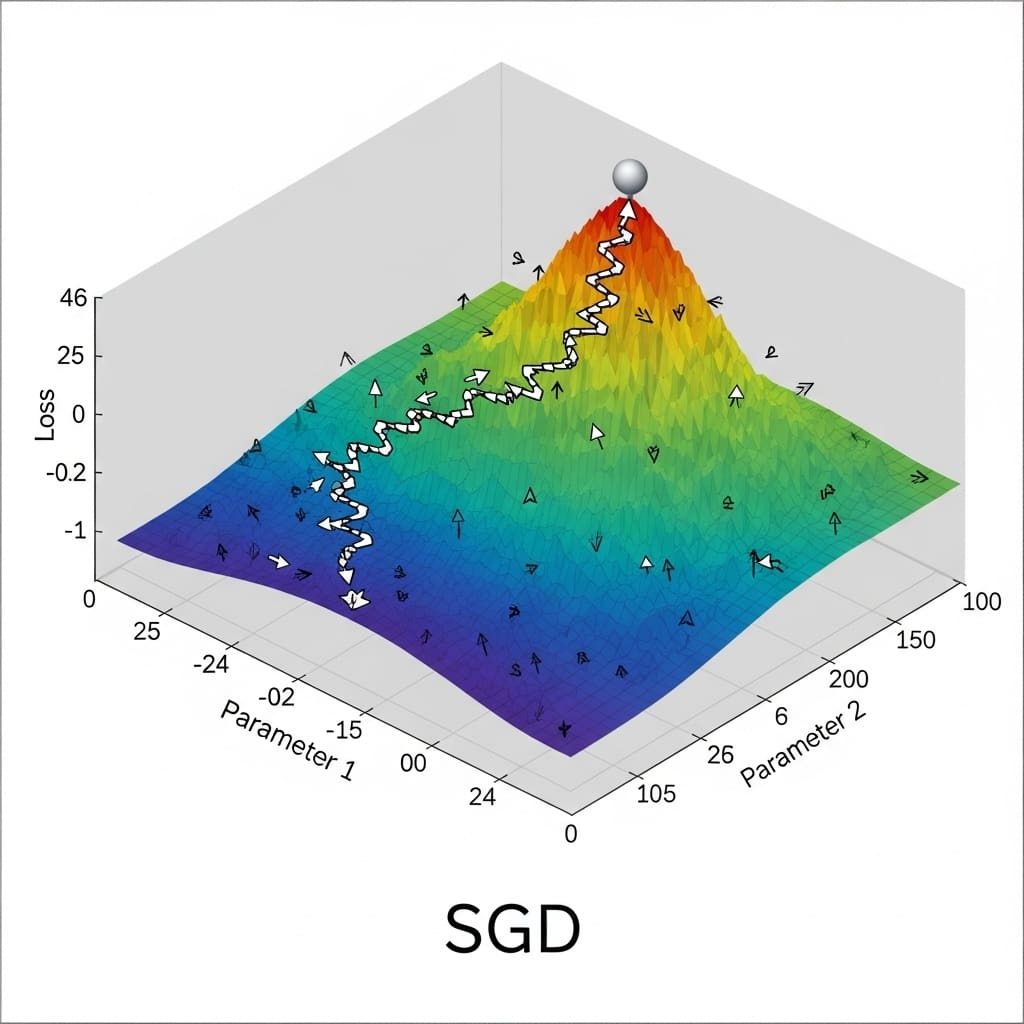

This work introduces a novel approach to analyzing Stochastic Gradient Descent (SGD) and its averaged variant (ASGD) in high-dimensional learning scenarios, tackling the challenges posed by constant learning rates. Researchers transferred powerful tools from high-dimensional time series analysis to online learning, enabling rigorous statistical guarantees where previously limited understanding existed. The study views SGD as a nonlinear autoregressive process, allowing the application of established coupling techniques to demonstrate geometric-moment contraction of the high-dimensional SGD iterates, thereby establishing asymptotic stationarity regardless of initial conditions. Scientists derived a sharp high-dimensional moment inequality, delivering explicit, non-asymptotic bounds for the q-th moment of the (A)SGD error in general ls-norms for any q greater than or equal to 2.

This generalized moment convergence extends to the max-norm (l∞) by selecting s approximately equal to the logarithm of the dimensionality, a crucial advancement for sparse and structured estimation in high-dimensional data. The team rigorously established the algorithmic complexity required to achieve targeted accuracy, demonstrating the number of iterations needed to reach a specified error level. Beyond average-case performance, the research delivers high-probability concentration bounds for high-dimensional ASGD with constant learning rates, a significant departure from existing theory focused on decaying learning rates and low dimensions. By leveraging a dependency-adjusted functional dependence measure, scientists developed a tight Fuk-Nagaev-type inequality, providing control over the algorithmic complexity needed to achieve targeted accuracy with high confidence. This new inequality complements the moment and complexity characterizations of large-step stochastic optimization, unlocking a deeper theoretical understanding of constant learning-rate SGD and ASGD, paving the way for more efficient and robust large-scale machine learning models.

Stationary Stochastic Gradient Descent in High Dimensions

This work delivers significant theoretical advancements in understanding Stochastic Gradient Descent (SGD) and its averaged variant (ASGD) within high-dimensional learning scenarios, specifically addressing the challenges posed by constant learning rates. Researchers established the asymptotic stationarity of SGD iterates, regardless of initial conditions, by introducing novel coupling techniques inspired by high-dimensional nonlinear time series analysis. This breakthrough enables the use of fixed, large learning rates, a common practice in large-scale machine learning, while maintaining theoretical guarantees. The team derived a sharp high-dimensional moment inequality, providing explicit, non-asymptotic bounds for the q-th moment of (A)SGD iterates for any q greater than or equal to 2, and extending to arbitrary ls-norms.

This generalized moment convergence offers a more comprehensive understanding of algorithm behavior beyond traditional mean squared error analysis, and notably extends to the max-norm case, selecting s approximately equal to the logarithm of the dimensionality, which is crucial for modern sparse and structured estimation problems. Beyond average-case performance, the study delivers the first high-probability concentration bounds for ASGD in high-dimensional settings with constant learning rates. By developing a tight Fuk-Nagaev-type inequality, researchers control the algorithmic complexity required to achieve targeted accuracy with high confidence, providing bounds for the number of iterations, k, to guarantee a target error level. This tail-decay result complements the moment and complexity characterizations of large-step stochastic optimization, marking a substantial step forward in the theoretical foundations of high-dimensional learning.

SGD Convergence and Stationarity in High Dimensions

This work significantly advances the theoretical understanding of Stochastic Gradient Descent (SGD) and its averaged variant (ASGD) when applied to high-dimensional learning problems. Researchers established rigorous statistical guarantees for these algorithms using constant learning rates, a challenging area due to the complexities of high-dimensional spaces. By adapting techniques from the analysis of high-dimensional time series, the team demonstrated the asymptotic stationarity of SGD, meaning the algorithm’s iterations stabilize over time, regardless of the initial starting point. Furthermore, the study derived non-asymptotic bounds for the convergence of SGD and ASGD, quantifying how quickly the algorithms approach optimal solutions in various norms commonly used in high-dimensional sparse models. A key achievement is the development of a high-probability tail bound for ASGD, providing a probabilistic measure of the algorithm’s performance. The researchers highlight the potential for extending this framework to more complex, non-convex optimization tasks, offering insights into the stability and reliability of large-scale learning algorithms.

👉 More information

🗞 Statistical Guarantees for High-Dimensional Stochastic Gradient Descent

🧠 ArXiv: https://arxiv.org/abs/2510.12013