Creating realistic and dynamic hand-object interactions presents a major hurdle for even the most advanced computer graphics systems, often relying on simplified object models and pre-programmed movements. Zisu Li, Hengye Lyu from HKUST (Guangzhou), Jiaxin Shi from XMax. AI Ltd., and colleagues address this challenge with SpriteHand, a new framework that generates realistic videos of hands interacting with a diverse range of objects. The team’s autoregressive video generation system creates these interactions in real time, taking a static object image and a video of hand movements as input, and producing convincing visual effects at approximately 18 frames per second. This achievement moves beyond the limitations of traditional methods, offering a pathway to more natural and versatile interactions in virtual and augmented reality, robotics, and other applications requiring realistic physical simulations.

Real-time Hand Interaction with Generated Virtual Objects

Researchers envision a future where users perceive the world through a camera, either from a head-mounted display or a mobile device. This framework, called SpriteHand, seamlessly integrates virtual objects into live scenes, dynamically responding to the user’s hand gestures within the captured environment. Modeling and synthesizing complex hand-object interactions has long been a significant challenge, even for state-of-the-art physics engines, which often struggle with realistic contact, deformation, and dynamic responses. This research addresses these limitations by introducing a novel framework that leverages deep learning to generate plausible hand-object interactions in real time, without relying on explicit physical simulations. The team aims to create a system capable of handling a wide range of object geometries, hand poses, and interaction dynamics, offering a more flexible and computationally efficient alternative to traditional methods. This work contributes a new approach to real-time virtual object manipulation, paving the way for more immersive and interactive augmented reality experiences.

Fast Autoregressive Diffusion for Video Generation

Recent advances in video generation have relied heavily on diffusion models, but achieving real-time performance remains a key challenge. Researchers are actively exploring new techniques to accelerate these models without sacrificing visual quality, including distillation methods and novel architectural designs. Attention mechanisms and transformer networks are increasingly employed to capture long-range dependencies in video sequences.

Real-time Hand-Object Interaction Video Synthesis

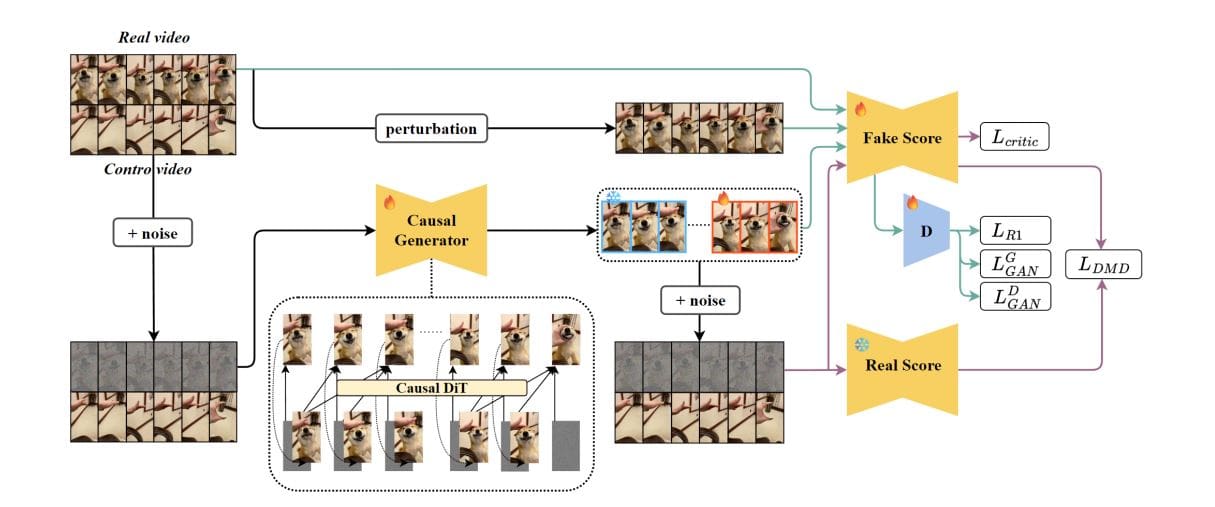

Researchers have developed SpriteHand, a novel framework capable of synthesizing realistic hand-object interaction videos in real time, achieving a breakthrough in video generation technology. This work addresses the significant challenge of modeling complex interactions between hands and objects, a task previously difficult for even advanced physics engines. SpriteHand generates videos at approximately 18 frames per second with a resolution of 640×368 pixels, maintaining a low latency of around 150 milliseconds on a single RTX 5090 GPU, and supports continuous output exceeding one minute in duration. The core of SpriteHand is a bidirectional diffusion transformer, initially trained on large-scale video datasets and adapted for hand-object interaction. This model encodes both an initial frame containing the hand and object, and a control video depicting hand motion without the object, to synthesize a corresponding interaction video. Researchers then transitioned this model into a causal variant, enabling real-time, autoregressive synthesis by restricting attention to past frames, ensuring temporal consistency.

👉 More information

🗞 SpriteHand: Real-Time Versatile Hand-Object Interaction with Autoregressive Video Generation

🧠 ArXiv: https://arxiv.org/abs/2512.01960