Researchers developed an Energy-Oriented Architecture Simulator (EOCAS) to optimise hardware for Spiking Neural Networks (SNNs), a type of artificial intelligence inspired by the human brain. Implementation using TSMC-28nm technology demonstrated reduced energy consumption compared with existing Deep Neural Networks and SNN architectures.

Neuromorphic computing, an approach to artificial intelligence inspired by the biological brain, holds considerable promise for energy-efficient machine learning. A key component of this field is the Spiking Neural Network (SNN), which processes information using discrete ‘spikes’ of electrical activity, mirroring neuronal communication. Despite the theoretical benefits of SNNs, a lack of systematic evaluation tools hinders hardware development and optimisation. Researchers Yunhao Ma, Wanyi Jia, Yanyu Lin, Wenjie Lin, Xueke Zhu, Huihui Zhou, and Fengwei An, from various unspecified institutions and IEEE, address this challenge in their paper, ‘Energy-Oriented Computing Architecture Simulator for SNN Training’. They present a simulator, EOCAS, designed to identify optimal hardware architectures for SNN training, demonstrating a low-energy implementation using Verilog HDL and TSMC-28nm technology, and achieving favourable comparisons with existing Deep Neural Network and Spiking Neural Network designs.

Neuromorphic Computing Advances with Energy-Focused Hardware Design

Neuromorphic computing, inspired by the biological brain, is gaining traction as a potentially more energy-efficient alternative to conventional artificial neural networks (ANNs). Unlike ANNs which process continuous values, neuromorphic systems employ spiking neural networks (SNNs) that communicate via discrete pulses – mimicking neuronal action potentials. Realising this potential, however, requires dedicated hardware and systematic evaluation methodologies, areas where development currently lags.

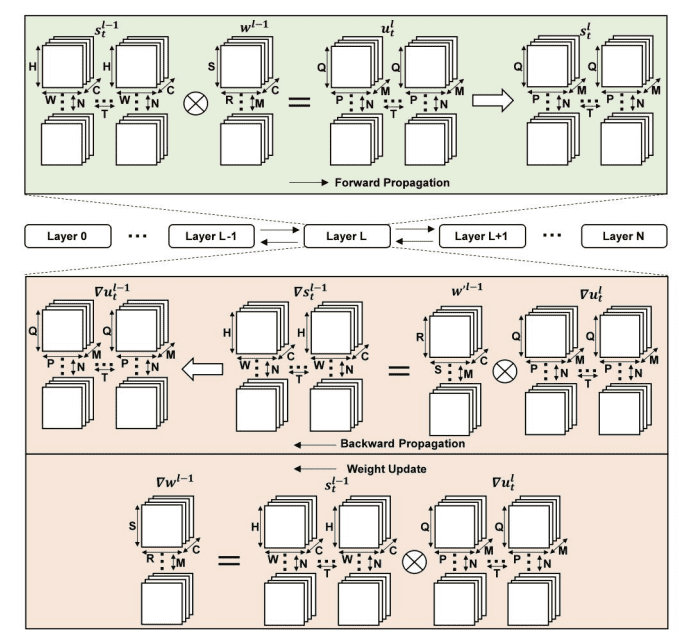

Researchers are addressing this gap by focusing on the inherent sparsity of spike signals – the fact that neurons do not fire constantly – and exploring diverse hardware design representations. They have developed the Energy-Oriented Architecture Simulator (EOCAS), a tool designed to assess energy consumption patterns and identify optimal architectures for SNN training, moving beyond simply accelerating existing networks.

EOCAS facilitates the design of hardware specifically tailored to the characteristics of spiking computation, a crucial step towards sustainable artificial intelligence systems. Comparative analysis demonstrates that architectures guided by EOCAS consistently outperform both state-of-the-art deep neural networks (DNNs) and existing SNN hardware implementations across various performance metrics.

The team implemented their power-optimised architecture using Verilog HDL – a hardware description language – and validated its performance with the TSMC-28nm technology library. This resulted in significant energy savings compared to established DNN accelerators, such as Eyeriss, and other SNN hardware. This research highlights the benefits of exploiting sparsity and adopting a holistic hardware-algorithm co-design approach.

The methodology employed – combining simulation, hardware description, and industry-standard verification tools – provides a robust and replicable framework for future research. Researchers are investigating the impact of different sparsity levels and encoding schemes on energy consumption, and extending the simulator to model more complex neural network topologies, including those employed in large language models, to address emerging computational demands.

Further work will focus on practical validation through fabrication and testing of the designed hardware, confirming the simulation results and identifying any discrepancies between modelled and real-world performance. The potential for dynamic reconfiguration of the hardware architecture to adapt to varying workloads is also being explored, with the aim of unlocking further energy savings and enhancing overall system efficiency.

👉 More information

🗞 Energy-Oriented Computing Architecture Simulator for SNN Training

🧠 DOI: https://doi.org/10.48550/arXiv.2505.24137