Spiking Neural Networks (SNNs) represent a fundamentally new approach to artificial intelligence, offering the potential for dramatically improved power efficiency and real-time processing, particularly for applications at the edge and within the Internet of Things. However, realising this potential requires overcoming significant challenges in designing optimal network architectures, a task complicated by the interplay between software models and underlying hardware constraints. Kama Svoboda and Tosiron Adegbija, both from The University of Arizona, lead a comprehensive survey of the emerging field of Spiking Neural Network architecture search, or SNNaS, examining current methods and highlighting the crucial role of co-designing both hardware and software. This work provides a valuable overview of the field, charting a course for future research and demonstrating how a holistic approach can unlock the full capabilities of these biologically inspired networks.

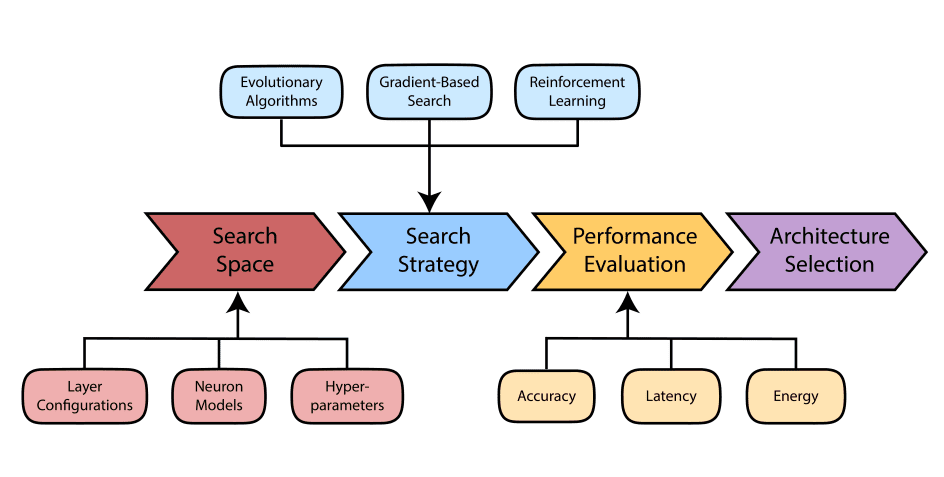

Scientists are developing a diverse range of algorithms and techniques to discover optimal network architectures for various tasks, including Reinforcement Learning, Evolutionary Algorithms, and Gradient-Based NAS. These techniques are complemented by One-Shot NAS, which efficiently searches within a larger “supernet”, and Zero-Shot NAS, which predicts network performance without training. Researchers also investigate Bandit-Based NAS, utilizing algorithms to efficiently explore possible architectures.

Beyond core methods, scientists are exploring innovative approaches like NAS without labels, leveraging unlabeled data, and curriculum learning, which gradually increases search difficulty. Encouraging diversity in architectures and focusing on block-wise NAS, where optimal building blocks are identified, further expands the toolkit. Crucially, researchers are developing methods for accurately estimating network performance without full training, often employing proxy tasks or predicting network weights, driven by the computational cost of NAS. Recognizing the potential of SNNs for efficient, real-time processing in edge and IoT applications, scientists are establishing a comprehensive understanding of their operational principles and key distinctions from traditional artificial neural networks. The core of this study lies in a systematic exploration of SNNaS methodologies, encompassing the design of search spaces, the implementation of optimization algorithms, and the development of acceleration strategies.

Researchers meticulously consider performance estimation techniques, with particular emphasis on integrating hardware constraints into the search process. They examine diverse neuron models, including the Leaky Integrate-and-Fire (LIF) model, the Hodgkin-Huxley model, and the Izhikevich model, each offering different trade-offs between computational efficiency and biological plausibility. Scientists also investigate various spike encoding schemes, recognizing their significant impact on architecture performance, including rate-based encoding, and temporal encoding methods like time-to-first-spike and phase coding. These networks, inspired by biological neurons, offer significant advantages in power efficiency and real-time processing, making them ideal for edge and IoT applications. However, designing optimal SNN architectures presents challenges due to their complexity and the interplay between hardware limitations and network models. This work surveys the state-of-the-art in SNN-specific NAS approaches, revealing key insights into future research directions.

The team highlights that traditional gradient-based optimization methods, commonly used in artificial neural networks, are ineffective for SNNs due to the discrete nature of spike functions. Researchers are addressing these challenges by developing specialized performance metrics beyond standard accuracy, including spike timing precision, firing rates, and temporal dynamics, crucial for evaluating spiking behavior and ensuring network performance. The work details how NAS methods must also account for neuromorphic hardware constraints, such as limited precision and asynchronous computation, to produce architectures compatible with these platforms, utilizing diverse search spaces ranging from global searches to sequential designs and cell-based approaches. This work encompasses hardware-aware design strategies, explicit consideration of temporal dynamics, and the development of novel search algorithms including surrogate gradients and Bayesian optimization. Current SNNaS methods often prioritize finding a single, universal architecture, rather than tailoring designs to specific hardware platforms. Addressing these challenges is crucial for scaling spiking neural networks to real-world applications and fully realizing the potential of neuromorphic computing, requiring future research to prioritize device-targeted solutions and explore co-optimization strategies to unlock the full capabilities of spiking networks and advance the field of neuromorphic computing.

👉 More information

🗞 Spiking Neural Network Architecture Search: A Survey

🧠 ArXiv: https://arxiv.org/abs/2510.14235