Convolutional neural networks, the engines behind many modern image processing applications, operate as complex systems yet lack a unifying mechanical explanation, a gap that Liam Frija-Altrac and Matthew Toews of École de Technologie Supérieure, Montreal, are now addressing. Their research establishes a novel framework that interprets convolutional filtering through the lens of classical mechanics, drawing parallels with concepts from relativity and momentum. The team decomposes convolutional kernels into components that behave like potential and kinetic energy, demonstrating how image content diffuses and shifts within the network, and crucially, how the speed of this shift relates directly to the energy distribution within the filter. This work represents a significant step forward, becoming the first to connect the inner workings of generic convolutional neural networks to fundamental principles of energy and momentum found in physics.

We consider kernels decomposed into orthogonal even and odd components. Even components cause image content to diffuse isotropically while preserving the center of mass, analogous to rest or potential energy with zero net momentum. Odd kernels cause directional displacement of the center of mass, mirroring kinetic energy with non-zero momentum. The speed of information displacement is linearly related to the ratio of odd versus total kernel energy. Even-Odd properties are analysed in the spectral domain via the discrete cosine transform, where the structure of small convolutional filters is dominated by low-frequency bases, specifically the DC component and gradients.

Convolutional Kernels and Activation Function Effects

Experiments investigate how combinations of convolutional kernels and activation functions affect signal processing through repeated convolution and activation. The goal is to understand how these choices influence the diffusion, velocity, and center of mass movement of a test pattern. The research focuses on DC kernels, representing constant values, and Gradient kernels, detecting directional changes, combined with ReLU and Modulus activation functions. Key parameters include the mixing ratio (β), which weights the DC and Gradient kernels, and metrics such as σx (diffusion), μx (center of mass), and t (time step).

Results demonstrate that DC kernels promote diffusion and stability, while Gradient kernels induce directional movement. The mixing ratio controls the speed of movement, and Modulus activation allows for faster movement of the center of mass compared to ReLU. The 3×3 kernels produce more pronounced diffusion and movement than 2×2 kernels. These experiments demonstrate how carefully chosen convolutional kernels and activation functions control signal behavior within a neural network, influencing diffusion, velocity, and movement, which is fundamental to understanding how CNNs process data.

Kernels as Energy and Momentum Operators

This work establishes a connection between elementary mechanics and convolutional filtering in neural networks, inspired by principles of special relativity and mechanics. Scientists demonstrate that kernels can be understood through the lens of energy and momentum, a fundamental concept in physics. The research team decomposed kernels into orthogonal even and odd components, revealing that even components induce isotropic diffusion, analogous to potential energy, while odd components cause directional displacement, mirroring kinetic energy. Experiments with one-dimensional filters, specifically sum and gradient operators, revealed distinct transformations following convolution and ReLU activation.

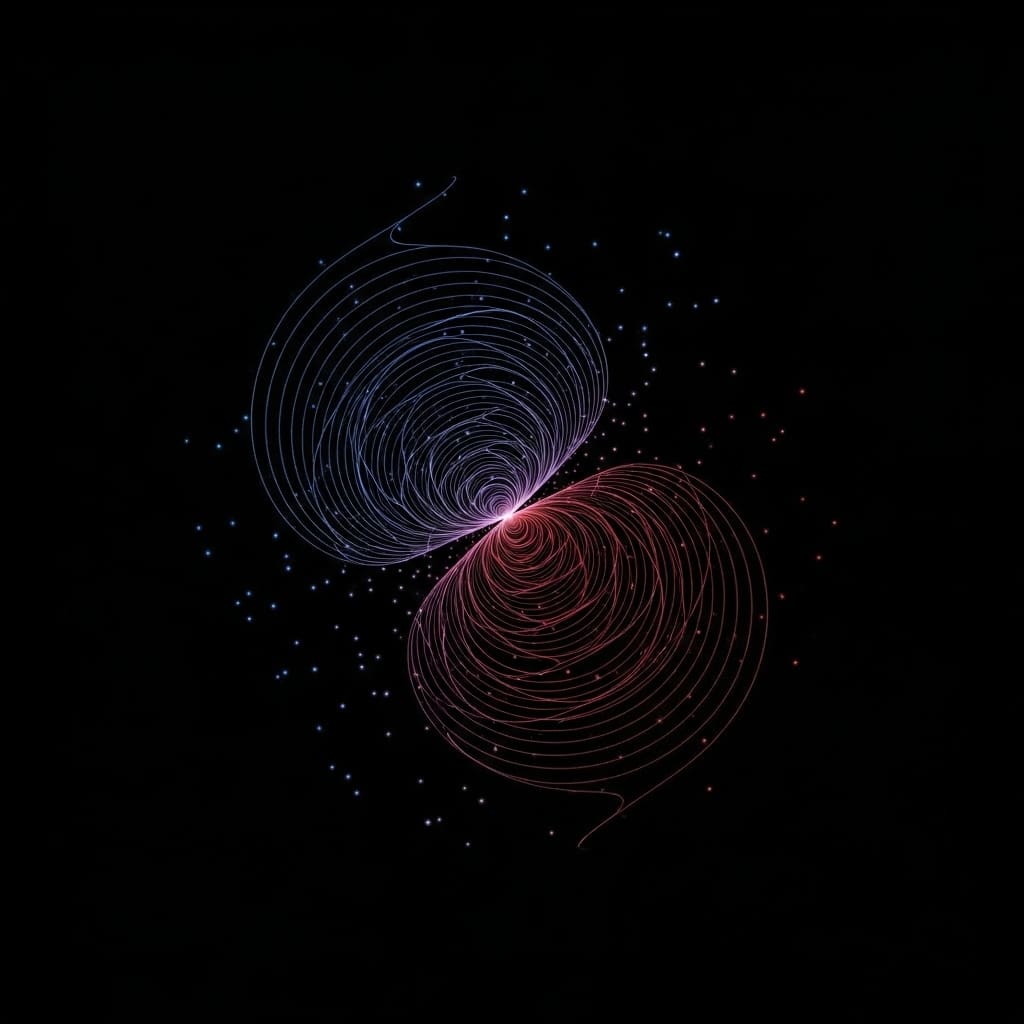

Normalizing activations to unit length, the team measured that the image approached a Normal distribution with a standard deviation of σ = √t, confirming the diffusion process approximates a Gaussian distribution. Applying gradient operators induced directional shifting, with information propagating at a constant speed of 0.5 pixels per layer. The team further demonstrated that any filter can be decomposed into orthogonal even and odd components, allowing for visualization of filters in a 3D space and providing a new framework for analyzing convolutional filter behavior.

Convolutional Networks Mimic Classical Mechanics

This research establishes a framework, termed elementary information mechanics, for understanding how convolutional neural networks process information. The team demonstrates that the action of convolutional filters can be modelled using principles from classical mechanics, specifically relating to energy and momentum. They achieve this by decomposing filters into even and odd components, revealing that even components cause isotropic diffusion while odd components induce directional displacement, mirroring the behaviour of potential and kinetic energy. The study highlights that the speed of this displacement is directly proportional to the ratio of odd to total kernel energy, and that ReLU activation functions result in behaviour closely resembling a Lorentz transform from relativistic physics.

Analysis of filters using the discrete cosine transform confirms that these fundamental modes of propagation are dominated by low-frequency components, consistently observed in trained CNN filters. This work represents the first demonstration of a link between information processing within CNNs and the energy-momentum relation, a key concept in modern physics. The authors acknowledge that their current analysis focuses primarily on small 3×3 filters and that higher-order frequency components could represent additional mechanical aspects in larger filters. Future research will explore the application of this framework to other areas of deep learning, including optimization, initialization, and domain adaptation, as well as extending the principles to network architectures beyond CNNs. The team suggests that this approach may ultimately offer insights into the relationship between energy and mass in the physical world and information propagation within neural networks.

👉 More information

🗞 The Mechanics of CNN Filtering with Rectification

🧠 ArXiv: https://arxiv.org/abs/2512.24338