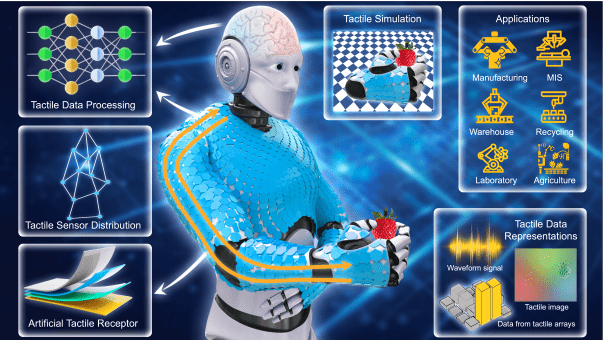

Giving robots a sense of touch, akin to human perception, remains a key challenge in robotics research, yet is crucial for safe and effective interaction with the world around us. Shan Luo, Nathan F. Lepora, and Wenzhen Yuan, all from IEEE, lead a comprehensive outlook on the current state of tactile robotics, identifying key hurdles and potential breakthroughs in this rapidly evolving field. The team, alongside Kaspar Althoefer, Gordon Cheng, and Ravinder Dahiya, demonstrate how advances in tactile sensing, utilising technologies from piezoresistive sensors to optical systems, are now being supported by powerful simulation tools and integrated with other sensing modalities like vision. This holistic approach, they argue, is essential to unlock the transformative potential of tactile robotics across diverse sectors, including manufacturing, healthcare, recycling, and agriculture, paving the way for robots that can truly ‘feel’ their environment.

Tactile Sensing Foundations and Emerging Trends

This comprehensive overview details the exciting field of tactile sensing and active touch, covering fundamental principles to cutting-edge applications. The research explores how robots can “feel” their environment, mirroring the human sense of touch. Core themes include sensor technologies, such as those responding to pressure, vibration, and light, and signal processing techniques for interpreting raw data. Biomimicry, inspired by the structure and function of human skin and mechanoreceptors, plays a significant role in sensor design. Active touch focuses on how robots explore objects, employing exploratory procedures like lateral motion and in-hand manipulation, and utilising active inference to reduce uncertainty about their surroundings.

Perception and object recognition are key areas of development, enabling robots to identify shapes, textures, and materials through touch. This capability extends to manipulation and grasping, improving grasp stability, detecting slip, and controlling force application. Applications are diverse, ranging from industrial automation and healthcare to agriculture and human-robot interaction. Current trends highlight the integration of deep learning with tactile data and other sensory inputs, such as vision and audio, to create a more complete understanding of the environment. Researchers are also drawing inspiration from biology to design more sophisticated sensors and algorithms.

Active inference and embodied intelligence offer promising frameworks for building robots that actively explore and learn from their experiences. Advances in haptic rendering are crucial for immersive virtual and augmented reality experiences. Combining tactile sensing with soft robotics allows for more delicate and adaptable manipulation, while integrating it with other sensors creates robust and versatile sensing systems. Notable researchers are leading the way in biomimetic tactile sensing, exploration strategies, and theoretical frameworks for embodied intelligence.

Simulating Touch for Robotic Tactile Sensing

Researchers are actively developing robotic touch, or tactile sensing, to enable robots to interact more effectively with the world and with humans. This field draws inspiration from biological systems, aiming to give robots a sense of touch analogous to our own. A diverse range of sensor technologies are being explored, each offering unique strengths in detecting physical contact and properties. A key innovation lies in the use of simulation tools to generate large-scale tactile datasets. These virtual environments allow researchers to test and refine sensor designs and algorithms without the limitations of physical experimentation, accelerating the development process.

Furthermore, these simulations provide a controlled environment for training algorithms to interpret complex tactile data, such as force, texture, and contact location. This approach moves beyond simply detecting touch to understanding the properties of objects and surfaces. Recent work builds on these foundations by revisiting fundamental areas like sensor types and distributed sensing, but also incorporates these new computational tools. Researchers are now focusing on benchmarking tactile systems, establishing standardised methods for evaluating performance and comparing different technologies. This emphasis on rigorous evaluation is crucial for driving progress and ensuring that tactile sensors meet the demands of real-world applications. The ultimate goal is to create robots with multi-modal sensing capabilities, combining touch with vision and other senses for a more robust and nuanced understanding of their surroundings.

Realistic Tactile Sensing via Advanced Simulation

Researchers are making significant strides in equipping robots with a sense of touch comparable to humans, a crucial step towards safe and effective interaction with the world and with people. This progress relies heavily on tactile sensors, which detect physical contact and translate it into electronic signals, but creating realistic and reliable tactile sensing systems presents considerable challenges. A key innovation lies in the development of sophisticated simulation tools that allow researchers to design, test, and refine these sensors and the algorithms that interpret their data. Current tactile sensors often employ soft, deformable materials to mimic the sensitivity of human skin.

However, accurately modelling the complex interplay between contact forces, material deformation, and the resulting sensor readings is computationally demanding. Researchers are tackling this challenge with both physics-based and data-driven simulation methods. Physics-based approaches build detailed physical models to replicate how the sensor responds to force and pressure. An increasingly popular approach leverages the power of machine learning. By training models on large datasets of sensor readings, researchers can predict how a sensor will behave in new situations.

Techniques like Generative Adversarial Networks (GANs) are used to generate realistic tactile data, bridging the gap between simulated environments and real-world performance. These data-driven methods are particularly effective at modelling complex sensor behaviour and can significantly reduce the computational burden compared to purely physics-based simulations. The integration of tactile simulation with robot simulation engines, such as GraspIt!, Gazebo, and MuJoCo, is proving particularly fruitful. By combining these tools, researchers can create virtual environments where robots can “feel” and interact with objects, allowing for extensive testing and refinement of tactile sensing systems.

Furthermore, geometry-based methods, which use depth cameras to capture the shape of objects at the point of contact, are enhancing the fidelity of these simulations. These advances in tactile simulation are not merely academic exercises. They are paving the way for robots that can perform delicate tasks in manufacturing, provide more effective assistance in healthcare, and even improve recycling and agricultural processes. By enabling robots to “feel” their environment, researchers are bringing us closer to a future where robots and humans can collaborate safely and seamlessly.

Tactile Robotics Advances and Remaining Challenges

Tactile robotics has experienced considerable progress, yielding diverse applications across manufacturing, healthcare, agriculture, and human-robot interaction. Recent developments demonstrate enhanced capabilities in areas such as precision manipulation, safe interaction with dynamic environments, and improved navigation for legged robots. Multi-modal contact processing, utilising full-body tactile sensing, now enables robots to achieve compliance, control forces, and avoid collisions effectively, signifying substantial steps towards more sophisticated and interactive robotic systems. Current research focuses on managing the increasing volume of tactile data and integrating diverse sensor types, with emerging issues relating to distributed energy, computing, and communication hardware in large-scale tactile networks. Researchers acknowledge that this review did not cover all relevant topics, specifically mentioning the complexities of distinguishing self-caused tactile sensations from external stimuli and the considerations surrounding optimal sensor coverage across a robot’s body. Future work will likely need to address these issues to further refine tactile sensing capabilities and broaden the scope of robotic applications.

👉 More information

🗞 Tactile Robotics: An Outlook

🧠 ArXiv: https://arxiv.org/abs/2508.11261