Achieving high-fidelity quantum states represents a critical challenge in the development of quantum technologies, including communication, sensing, and computation. Junpei Oba and Hsin-Pin Lo, working with colleagues at Toyota Central R and D Labs., Inc. and NTT Basic Research Laboratories, present a new framework for pinpointing the specific causes of errors that degrade these states. Current methods of assessing quantum state quality, known as quantum state tomography, often combine multiple error sources into a single result, obscuring their individual contributions. This team’s approach combines simulation and optimisation to model potential error sources and then refine those models until they accurately reproduce experimental data, successfully identifying the origins of 86% of the errors in their time-bin entangled photon pair experiments and offering a pathway to significantly improve quantum state quality across various quantum platforms.

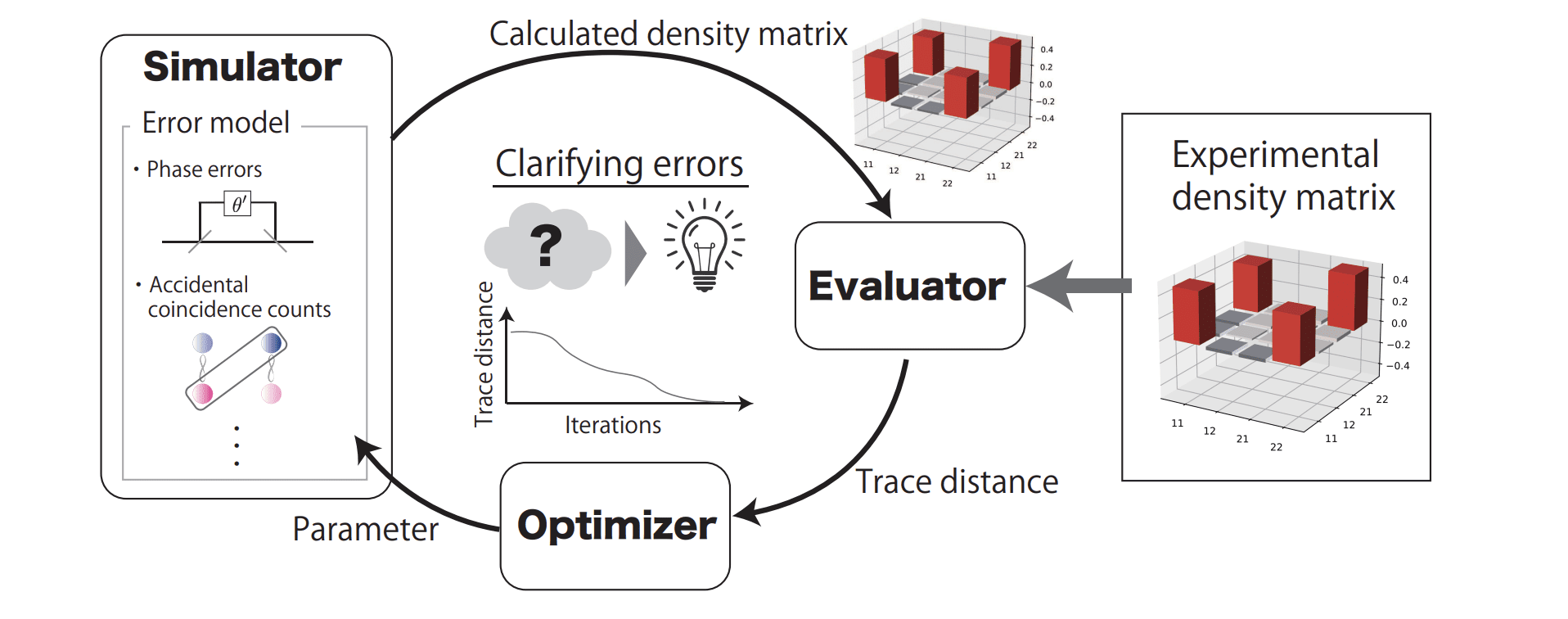

Quantum information processing encompasses areas such as quantum communication, quantum sensing, and quantum computing. In practice, the quality of quantum states degrades due to various error sources, and quantum state tomography serves as a standard diagnostic tool. However, quantum state tomography often aggregates multiple error sources within a single measurement, making individual identification challenging. To address this problem, researchers propose an automated method for quantifying error sources by combining simulation and parameter optimisation to reproduce the experimental quantum state. The focus of this work is the experimental generation of time-bin entangled photon pairs, for which relevant error sources are modelled and simulated.

Entangled Photons and Quantum Key Distribution

This research centers around the creation, manipulation, and distribution of quantum states, like entangled photons, for applications in quantum communication, computation, and sensing. A significant portion details experimental setups, particularly those involving spontaneous four-wave mixing to generate entangled photon pairs. Quantum Key Distribution (QKD) is a recurring theme, highlighting experiments aimed at secure communication using quantum principles. Polarization entanglement and time-bin entanglement, where entanglement is based on the arrival time of photons, are both explored. Quantum states are inherently fragile, and imperfections in experimental setups introduce errors that limit the performance of quantum devices. Traditionally, diagnosing these errors involves quantum state tomography, which provides an overall picture of the state’s quality but struggles to isolate the individual contributing factors. This new approach combines computer simulations with a parameter optimization process to effectively reverse-engineer the errors present in an experimental quantum state.

By modelling likely error sources, such as imperfections in optical components and detector limitations, they created a simulated quantum state with adjustable parameters. The simulation was then refined by automatically adjusting these parameters until the simulated state closely matched the experimentally measured state, as determined by a mathematical measure called trace distance. The results demonstrate a significant improvement in understanding the dominant error sources, reducing the trace distance from 0. 177 to 0. 024, indicating that the model explains 86% of the errors.

Importantly, reducing the predicted error sources in the simulation led to a corresponding improvement in the quality of the actual quantum state. The versatility of this method extends beyond photon-based quantum systems, making it applicable to a wide range of quantum platforms. The team developed a technique that combines simulation and optimization to accurately model the density matrix and pinpoint the factors limiting its quality. By systematically adjusting parameters within the simulation, they successfully reduced the discrepancy between the modeled and experimentally obtained density matrices, improving the accuracy of error identification. The results demonstrate that this approach can account for a significant portion of the observed errors, approximately 86%, and refine the understanding of limitations in quantum state generation. This improved characterization is crucial for advancing quantum technologies, as it allows researchers to address specific error sources and enhance the fidelity of quantum operations.

👉 More information

🗞 Model-based framework for automated quantification of error sources in quantum state tomography

🧠 ArXiv: https://arxiv.org/abs/2508.05538