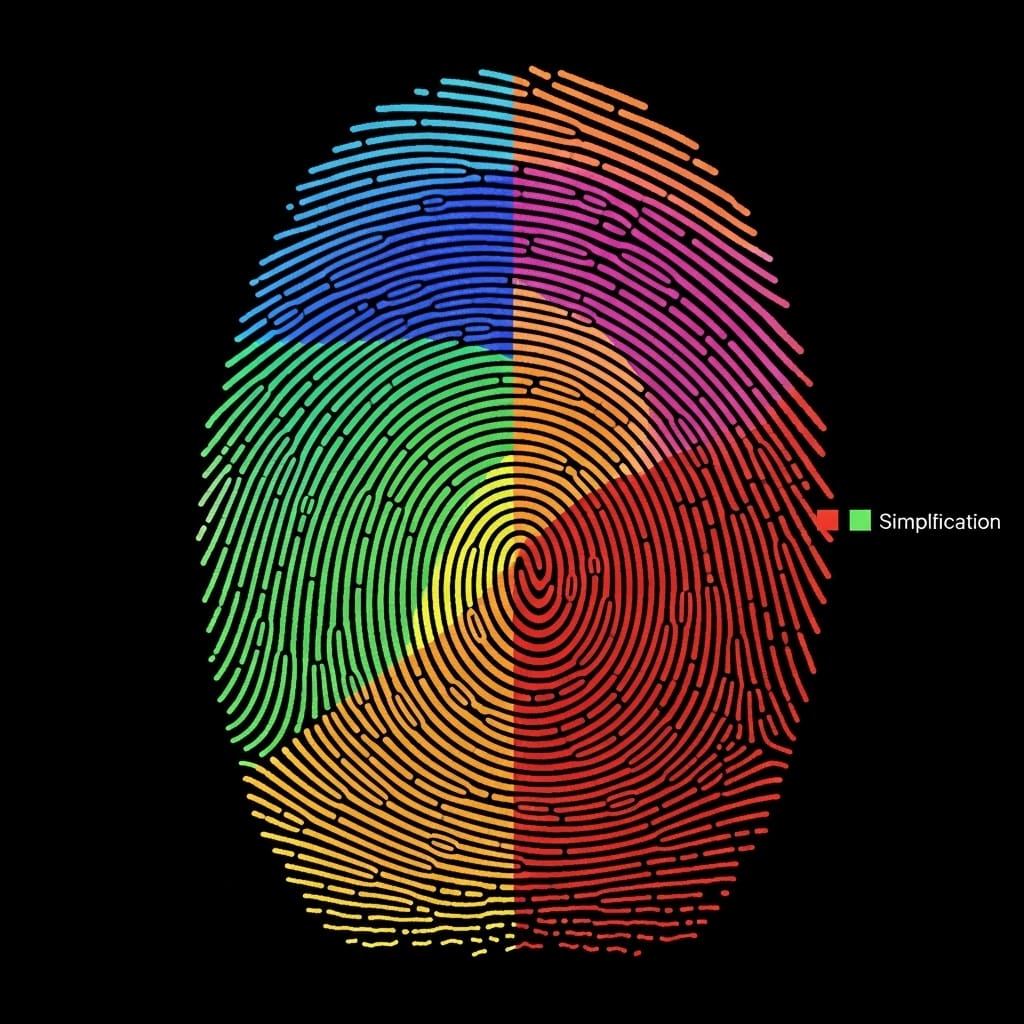

Researchers are tackling the challenge of understanding how Large Language Models simplify text, a crucial step towards building truly adaptive systems. Lars Klöser, Mika Elias Beele, and Bodo Kraft, all from Aachen University of Applied Sciences, present a new diagnostic toolkit called the Simplification Profiler, which creates detailed ‘fingerprints’ of a model’s simplification behaviour. This innovative approach moves beyond simply measuring simplification quality and instead focuses on identifying a model’s unique characteristics , particularly important for languages where training data is limited. By demonstrating that these fingerprints can reliably distinguish between different model configurations with up to 71.9% F1-score, the team provides developers with a granular and actionable method for building more effective text simplification tools.

The research team achieved this by moving away from reliance on human-rated datasets and instead focusing on measuring a model’s unique behavioural signature through aggregated simplifications. This innovative approach is particularly vital for languages like German, where data scarcity exacerbates the challenge of creating flexible models adaptable to diverse target groups rather than a single simplification style.

The core of the Simplification Profiler lies in its ability to create a multidimensional fingerprint based on linguistically grounded properties, dividing these into universal quality criteria, linguistic correctness, factual accuracy, and coherence, and context-dependent parameters such as linguistic level, content scope, terminology use, and text length. Researchers operationalized this concept with a meta-evaluation testing the descriptive power of these fingerprints, bypassing the need for extensive human annotation. This test specifically assessed whether a simple linear classifier could reliably identify different model configurations based solely on their generated simplifications, confirming the sensitivity of the metrics to specific model characteristics. Experiments show the Profiler can distinguish between high-level behavioural variations stemming from different prompting strategies and fine-grained changes resulting from prompt engineering, including the impact of few-shot examples.

The complete feature set achieved classification F1-scores up to 71.9 %, a substantial improvement of over 48 percentage points compared to simpler baseline methods. This demonstrates the Profiler’s granular, actionable analysis capabilities, offering developers a powerful tool to build more effective and truly adaptive text simplification systems. The team’s approach is compositional, leveraging robust, well-established tools for specific aspects like Natural Language Inference models, grammar checkers, and readability indices, rather than building a single monolithic evaluation model. This allows the Profiler to benefit from existing expert knowledge and broad training data, potentially enhancing the generalizability and reliability of individual measurements.

The research establishes that a multi-dimensional analysis of specific text properties provides an informative and nuanced fingerprint of ATS system performance, enabling targeted development and iterative improvements to LLMs. The0.9 %, a significant improvement of over 48 percentage points compared to simple. Experiments revealed that the Profiler can reliably distinguish between high-level behavioral variations in strategies and fine-grained changes resulting from few-shot examples. This novel evaluation paradigm is particularly vital for languages where data scarcity presents a significant challenge.

The team meticulously generated a diverse testbed of simplified texts using the German Wikipedia as a primary data source, employing the Gemma model family with 1B, 4B, and 12B parameters. They created 18 distinct simplification configurations by combining two core prompting strategies, plain prompts and property-oriented prompts, alongside variations with and without few-shot examples. Measurements confirm that the toolkit can detect nuanced impacts of in-context learning, demonstrating its sensitivity to subtle changes in model behaviour. This methodology allows for a clear isolation and measurement of the distinct effects of different models and prompting strategies.

Results demonstrate the Profiler’s ability to quantify the tension between simplifying linguistic form and preserving semantic content. The toolkit’s metrics are organised into three key areas, with semantic fidelity assessed using two NLI-based metrics. Specifically, the Content Correctness (COR) metric calculates the aggregated absence of contradictions between original sentences and their simplifications, expressed as a percentage, SCCor = 1 |TO| X s TO i ∈TO (1 −P(contradiction)) · 100. The complete feature set achieved classification F1-scores up to 71.9 %, a substantial improvement of over 48 percentage points compared to simple baseline methods.

Tests prove the toolkit’s granular, actionable analysis enables developers to build more effective and adaptive text simplification systems. The Simplification Profiler offers a multi-dimensional analysis of specific text properties, providing an informative fingerprint of system performance. This allows for targeted development, as the toolkit is sensitive enough to detect the fingerprints of different development choices, including variations in models and prompts0.9%. This confirms that the Profiler’s metrics are sensitive to specific model characteristics and provide a nuanced understanding of performance. The authors acknowledge that the evaluated dimensions, linguistic correctness, adequacy, and complexity, are not exhaustive. Further research should incorporate metrics for pragmatic qualities like tone, register, user engagement, and trust, tailoring the Profiler to specific simplification goals. Future work will also involve conducting human correlation studies and expanding the toolkit to new languages. This development represents a key step towards building more adaptive and responsible text simplification systems, enabling developers to make targeted adjustments and understand the trade-offs between readability, content coverage, and linguistic correctness.

👉 More information

🗞 Profiling German Text Simplification with Interpretable Model-Fingerprints

🧠 ArXiv: https://arxiv.org/abs/2601.13050