Researchers are tackling the challenge of creating truly personalised visual content with a new evaluation framework called the Visual Personalization Turing Test (VPTT). Rameen Abdal from Snap Research, James Burgess from Stanford University, Sergey Tulyakov from Snap Research, and Kuan-Chieh Jackson Wang from Snap Research, et al, present a paradigm that moves beyond simply replicating a person’s style, instead focusing on whether generated content feels authentically created by that individual. This work is significant because it introduces VPTT-Bench, a 10k-persona benchmark, alongside a novel metric, the VPTT Score, calibrated against both human and large language model judgements, offering a scalable and privacy-safe approach to assessing perceptual indistinguishability in personalised generative models.

The VPTT assesses whether a model’s generated content, images, videos, or 3D assets, is perceptually indistinguishable from content a specific person might plausibly create or share.

This groundbreaking approach reframes personalization as simulating a personal perspective, rather than merely reproducing appearance. To operationalize the VPTT, researchers present the VPTT Framework, integrating a 10,000-persona benchmark called VPTT-Bench, a visual retrieval-augmented generator (VPRAG), and the VPTT Score, a text-only metric.

VPTT-Bench addresses the challenge of inaccessible real-world user data by constructing a large-scale benchmark of synthetic personas, represented through “deferred renderings” , structured, attribute-rich descriptions that ensure privacy. The team demonstrates a strong correlation between human evaluations, calibrated Visual Language Model (VLM) judgements, and the automated VPTT Score, validating its reliability as a perceptual proxy.

Experiments reveal that VPRAG achieves the optimal balance between alignment and originality in personalized generation. This system leverages hierarchical semantic retrieval to interpret a user’s style from their visual history and applies it to new content, offering a scalable and privacy-safe foundation for personalized generative AI.

The research establishes a new evaluation framework, proving the VPTT Score is a reliable proxy for human perception of personalization through extensive analysis involving approximately 120,000 evaluations. This work opens possibilities for monetizing generative AI, building trustworthy systems, and creating personally resonant experiences. The VPTT determines success when a model’s output is indistinguishable from content a person might plausibly create or share.

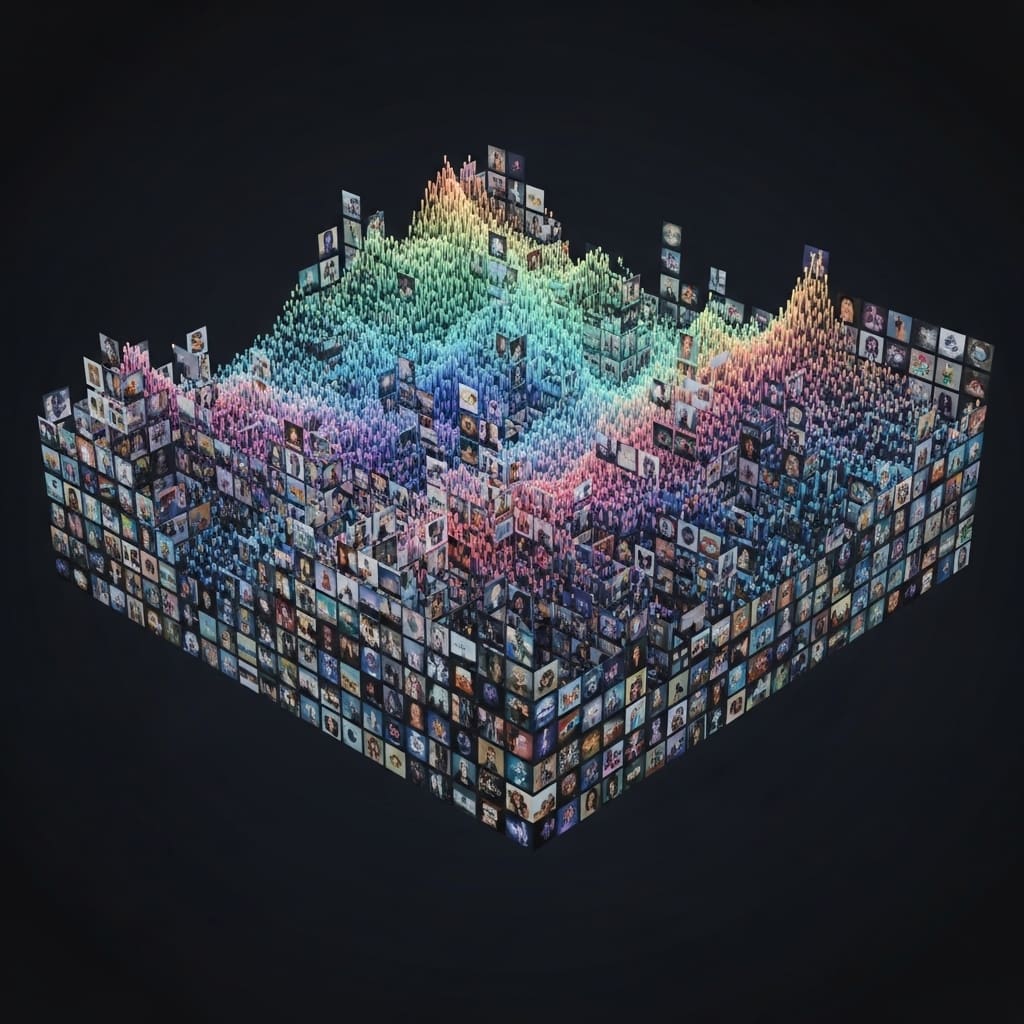

To implement this, researchers developed the VPTT Framework, integrating a 10,000-persona benchmark termed VPTT-Bench, a visual retrieval-augmented generator (VPRAG), and the VPTT Score, a text-only metric. The study constructed VPTT-Bench using a deferred rendering pipeline, initially sampling personas from PersonaHub with associated demographic data.

Visual and scenario elements, including lighting, materials, environment, actions, foreground, and background, were then extracted and composed into structured captions using a large language model. This process generated 30 corresponding visual assets per persona, creating a privacy-safe and semantically grounded dataset for evaluating contextual personalization.

Researchers rendered approximately 1000 synthetic personas to build a rich visual library for this purpose. Scientists then engineered VPRAG, a novel system for visual personalization, which avoids costly retraining by conditioning generation on a persona’s existing assets. VPRAG employs hierarchical semantic retrieval, with an optional learnable feedback mechanism, to compose a personalized prompt enriched with unique stylistic elements.

Evaluation involved a two-fold approach, beginning with the introduction of VPTTscore as an automatic proxy for the full VPTT. A visual-level evaluation was conducted through VPTT, validated by human study and extended with calibrated Visual Language Model (VLM) judges, establishing strong correlations between text-level (VPTTscore), VLM, and human evaluations.

A large-scale deferred rendering analysis, comprising approximately 120,000 evaluations using VPTTscore, revealed that VPRAG’s structured design achieves the best trade-off between output alignment and novelty. The core principle of the VPTT is that a model successfully passes if its output, images, videos, or 3D assets, is indistinguishable from content a specific person might plausibly create or share.

To facilitate this, researchers developed the VPTT Framework, integrating a 10,000-persona benchmark called VPTT-Bench, a visual retrieval-augmented generator (VPRAG), and the VPTT Score, a text-only metric. Experiments revealed a high correlation between human evaluations, calibrated Visual Language Model (VLM) judgements, and the VPTT Score, validating its reliability as a perceptual proxy for assessing personalization.

The team measured strong agreement across all three evaluation methods, confirming the VPTT Score’s ability to accurately reflect human perception of visual authenticity. VPRAG achieved the best balance between originality and alignment, offering a scalable and privacy-safe foundation for personalized generative models.

Researchers constructed VPTT-Bench, comprising approximately 10,000 synthetic personas, represented through “deferred renderings”, structured, attribute-rich intermediates that defer visual realization. This approach enables privacy-safe research at scale, circumventing the limitations of accessing real-world user data.

Additionally, the team rendered around 1,000 synthetic personas to create a rich visual library for training and evaluation. The novel VPRAG system conditions generation on a persona’s existing assets through hierarchical semantic retrieval, enriching prompts with unique stylistic elements. The evaluation framework introduced the VPTTscore as an automatic proxy for the full VPTT, validated through human studies and extended with calibrated VLM judges.

A large-scale deferred rendering analysis, encompassing approximately 120,000 evaluations using the VPTTscore, demonstrated that VPRAG’s structured design delivers a superior trade-off between output alignment and novelty. The core idea is to move beyond simply replicating identity and instead focus on plausibility, establishing a benchmark for truly personalized generative models.

To facilitate this, researchers developed the VPTT Framework, comprising a 10,000-persona dataset (VPTT-Bench), a visual retrieval-augmented generator (VPRAG), and the VPTT Score, a text-based metric. Experiments demonstrated a strong correlation between human evaluations, judgements from Visual Language Models (VLMs), and the VPTT Score, validating its reliability as a proxy for perceptual assessment.

VPRAG consistently outperformed baseline models in achieving a balance between originality and contextual grounding, offering a scalable and privacy-preserving approach to personalized generation across different models. The authors acknowledge a limitation stemming from the synthetic nature of the VPTT-Bench dataset, relying on a single family of generator models for persona creation. Future research will focus on incorporating real-user data through opt-in and federated learning methods to bridge the gap between simulated and genuine personalization, all while maintaining user privacy.

👉 More information

🗞 Visual Personalization Turing Test

🧠 ArXiv: https://arxiv.org/abs/2601.22680