Improving image and video generation relies heavily on reward models, yet a systematic understanding of how to effectively scale these models has remained elusive, until now. Jie Wu, Yu Gao, and Zilyu Ye, along with Ming Li, Liang Li, and Hanzhong Guo, present a new framework called RewardDance that tackles fundamental limitations in current reward model approaches. The researchers demonstrate that by reformulating reward signals as probabilities, essentially asking a vision-language model if a generated image is better than a reference, they unlock unprecedented scaling potential, achieving models with up to 26 billion parameters. This breakthrough not only improves the quality and diversity of generated content across image and video formats, but also resolves the critical problem of “reward hacking”, where models exploit flaws in the reward system rather than genuinely improving quality, paving the way for more reliable and creative artificial intelligence.

Reward models currently face limitations due to architectural constraints and input methods, hindering effective scaling and alignment with vision-language models (VLMs). Critically, the Reinforcement Learning from Human Feedback (RLHF) optimisation process is plagued by “reward hacking,” where models exploit flaws in the reward signal without improving true quality. To address these challenges, researchers introduce RewardDance, a scalable reward modelling framework that overcomes these barriers through a novel generative reward paradigm. By reformulating the reward score as the model’s probability of predicting a “yes” token, it indicates that the generated image outperforms a reference image.

Latent Diffusion Models and Image Generation

Latent Diffusion Models form the foundation for many recent advances in image generation. Score-Based Generative Modeling offers an alternative approach, while Hierarchical Text-Conditional Image Generation with CLIP Latents guides image creation using textual descriptions. SDXL represents an improved latent diffusion model capable of generating high-resolution images, and ByteEdit provides a technique for generative image editing. Qwen-Image is a large-scale image generation model, while Emu3 uses next-token prediction for image creation. Research demonstrates that autoregressive models can compete with diffusion models for image generation.

RewardDance Improves Image Generation with Probabilistic Rewards

The research team introduces RewardDance, a new framework designed to significantly improve image and video generation through enhanced reward signals. Existing reward models struggle with limitations in architecture and alignment with VLMs, hindering their ability to effectively scale. RewardDance overcomes these challenges by reformulating the reward score as a probability, specifically the likelihood of a “yes” token indicating superior image quality compared to a reference image. This approach intrinsically aligns the reward objectives with the architecture of VLMs, unlocking improvements in both scaling and contextual understanding.

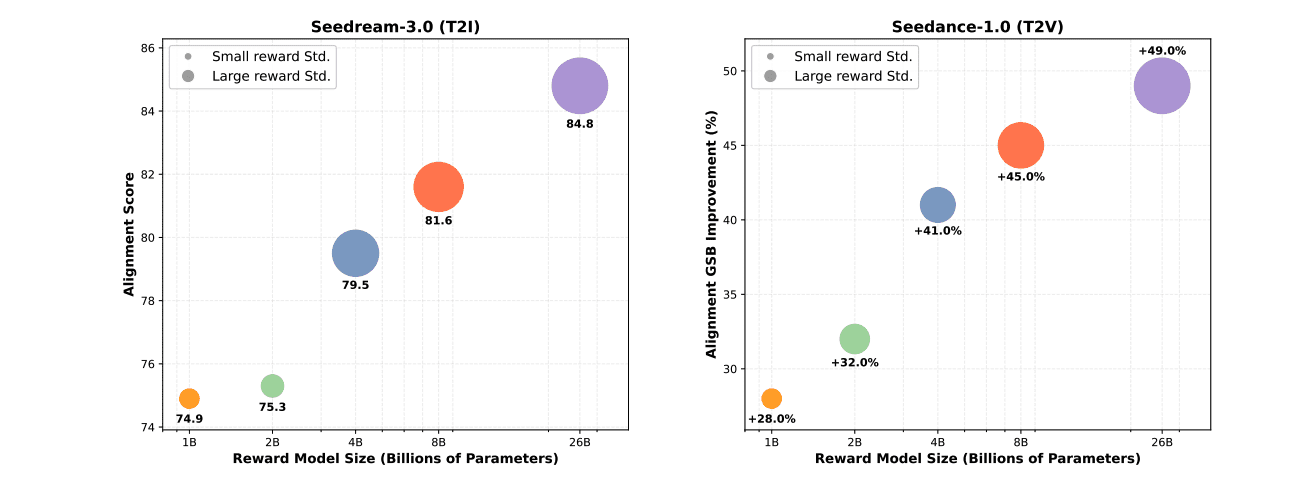

Experiments demonstrate that RewardDance enables the successful scaling of reward models up to 26 billion parameters, a substantial increase compared to existing methods. This scaling directly translates to improved performance; for example, increasing the reward model size from 1 billion to 26 billion parameters boosts alignment scores from 67. 0 to 73. 6 when used with the FLUX0. 1-dev model during reinforcement learning.

Even more dramatic gains were observed with the Seedream-3. 0 model, where scores increased from 74. 1 to 84. 8 with the largest reward model. These results consistently demonstrate that larger VLM-based reward models are more effective at capturing human preferences and guiding diffusion models toward higher-quality outputs.

Furthermore, the team addressed the problem of reward hacking. RewardDance, even at the largest scale of 26 billion parameters, maintains high reward variance during fine-tuning, proving its resistance to hacking and its ability to generate diverse, high-quality outputs. The framework also incorporates a novel inference-time scaling technique, “Search over Paths,” which prunes unpromising generation paths to select the most promising trajectories, further enhancing performance. Evaluations using Bench-240 and SeedVideoBench-1. 0 benchmarks confirm these improvements across both image and video generation tasks, demonstrating the versatility and effectiveness of the RewardDance framework.

Reward Prediction as Token Generation Scales

This work addresses a critical limitation in visual reward models, where existing methods struggle to scale effectively and align with the underlying architecture of VLMs. The researchers introduce RewardDance, a novel framework that reformulates reward prediction as a token generation task. By converting reward scores into a probability score for a “yes” token, RewardDance intrinsically aligns with the autoregressive mechanism of VLMs, enabling scaling in both model size, from 1 billion to 26 billion parameters, and context richness through the incorporation of instructions, reference examples, and chain-of-thought reasoning. Extensive experiments across text-to-image, text-to-video, and image-to-video generation demonstrate that scaling reward models along these dimensions consistently improves the quality of the reward signal and, consequently, the quality of the generated content.

Importantly, the team resolved the issue of reward hacking; their scaled models maintained high reward variance during training, indicating robustness and the ability to produce diverse, high-quality outputs. The authors acknowledge that future work could explore extending this approach to multimodal tasks, such as audio-to-video generation, and integrating more complex reasoning capabilities into the reward models. This research establishes scalability as a foundational principle for visual reward models, paving the way for more powerful and robust reward modeling in the future of visual generation.

👉 More information

🗞 RewardDance: Reward Scaling in Visual Generation

🧠 ArXiv: https://arxiv.org/abs/2509.08826