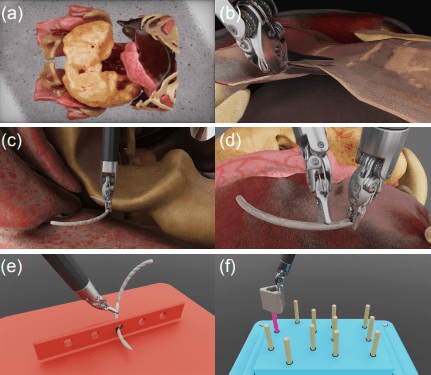

On April 21, 2025, researchers introduced SuFIA-BC, a novel approach utilizing photorealistic digital twins to generate high-quality synthetic data for enhancing visuomotor policies in surgical robotics. This study addresses key challenges in behavior cloning by creating realistic surgical environments that improve learning and autonomy in robotic surgery.

The study addresses challenges in surgical behavior cloning due to complex environments, data scarcity, and calibration issues. It introduces SuFIA-BC, a visual behavior cloning framework for surgical autonomy, integrated with photorealistic digital twins generating synthetic data. Evaluations reveal that current state-of-the-art models struggle with contact-rich surgical tasks, emphasizing the need for customized perception pipelines, control architectures, and larger-scale datasets tailored to surgical demands.

In recent years, surgical training has undergone a significant transformation with the integration of synthetic data and artificial intelligence (AI). Traditionally dependent on costly and limited physical models or cadavers, the medical community is now turning to digital solutions that offer scalability and accessibility.

Synthetic data, computer-generated data mimicking real-world scenarios, is at the forefront of this revolution. By creating virtual patient models, surgeons can practice on diverse and controlled scenarios without the constraints of physical resources. This approach reduces costs and enhances accessibility, making high-quality training more widely available.

The use of advanced AI models has further enhanced surgical simulations. PointNet, a deep learning model for handling point cloud data, is pivotal in processing three-dimensional environments, crucial for realistic surgical settings. Additionally, NVIDIA’s denoising diffusion probabilistic models (DDPMs) contribute by generating high-quality, realistic surgical scenarios, invaluable for training purposes.

While these technologies show promise, their integration into existing training systems is still evolving. They currently complement traditional methods rather than replace them entirely. Key challenges include ensuring the accuracy of synthetic data and covering a wide range of surgical cases to match real-world complexities.

The adoption of synthetic data and AI in surgical training could democratize access to high-quality education, particularly benefiting regions with limited resources. This could lead to better-prepared surgeons, potentially improving patient outcomes by reducing errors. However, it’s essential to acknowledge that while these models enhance training, they may not yet fully replicate all real-world nuances.

Looking ahead, research is focusing on integrating haptic feedback for a more realistic touch sensation and expanding the variety of surgical procedures covered. The goal is to create adaptable AI models capable of real-time scenario adjustments during simulations.

Synthetic data and AI present a promising future for surgical training, offering significant benefits while acknowledging ongoing challenges. As this technology evolves, it holds the potential to revolutionize healthcare education and patient care globally.

👉 More information

🗞 SuFIA-BC: Generating High Quality Demonstration Data for Visuomotor Policy Learning in Surgical Subtasks

🧠 DOI: https://doi.org/10.48550/arXiv.2504.14857