Configuration optimisation presents a major challenge in machine learning, demanding careful coordination of numerous factors including model architecture, training strategies, and hyperparameters. Researchers Yuxing Lu from Peking University, Yucheng Hu from Southeast University, and Nan Sun from Huazhong University of Science and Technology, alongside colleagues, now present a new approach that moves beyond purely numerical methods by incorporating natural language feedback. Their work introduces Language-Guided Tuning, a framework which uses a multi-agent system to intelligently refine configurations through reasoning, employing textual gradients as qualitative signals to understand training dynamics and the relationships between different settings. This innovative method demonstrates substantial performance gains across a range of datasets, while also offering a level of interpretability often lacking in automated optimisation techniques, potentially streamlining the development of more effective machine learning models.

LLMs as intelligent AutoML pipeline designers

This research explores a new approach to Automated Machine Learning (AutoML), leveraging Large Language Models (LLMs) not just for hyperparameter tuning, but as intelligent agents capable of designing entire machine learning pipelines. The team demonstrates that LLMs can learn how to optimize over time, understanding the optimization process itself through a combination of techniques including reflective evolution and textual gradients. Prompt engineering plays a crucial role in guiding the LLM’s behaviour and ensuring useful optimization suggestions. Evaluations on datasets including MNIST, CIFAR-10, CIFAR-100, wine quality data, and potable water data, as well as standard AutoML and Neural Architecture Search benchmarks, demonstrate that the LLM-based optimizers achieve state-of-the-art performance, improving optimization efficiency and generalizing well to new datasets and tasks. The LLMs learn from past experiences and adapt their strategies, showcasing the potential to significantly advance AutoML and make machine learning more accessible.

Language Models Guide Machine Learning Configuration

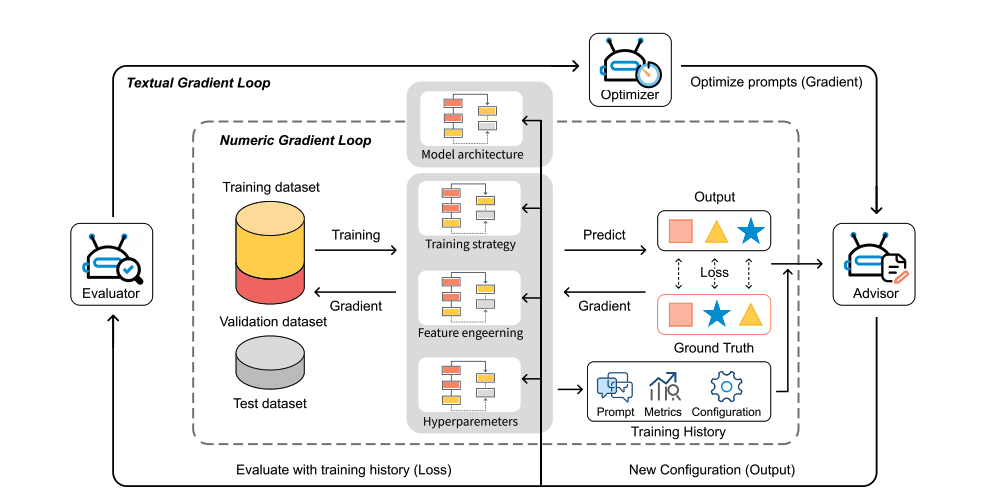

Researchers have developed Language-Guided Tuning (LGT), a novel methodology designed to overcome limitations in current machine learning configuration optimization. Traditional methods often treat model architecture, training strategies, and hyperparameters in isolation, hindering performance, while automated approaches struggle with adaptability. LGT addresses these challenges by employing a multi-agent system powered by LLMs, enabling coordinated reasoning across multiple dimensions. At the heart of LGT is a collaborative framework consisting of three specialized agents: an Advisor proposing changes, an Evaluator assessing impact, and an Optimizer refining prompts, creating a self-improving feedback loop. This innovative approach integrates textual feedback alongside numerical optimization signals, allowing LGT to reason about the underlying causes of performance issues and achieve substantial improvements in accuracy and error reduction across six diverse datasets.

Textual Gradients Guide Machine Learning Configuration

A new framework, Guided Tuning (LGT), significantly improves the process of configuring machine learning models. Traditional optimization methods often treat aspects like model architecture and training strategies as independent variables, limiting their effectiveness, while automated approaches struggle to adapt. LGT overcomes these limitations by employing a multi-agent system that intelligently optimizes configurations through natural reasoning. The core of LGT lies in its use of textual gradients, which provide semantic understanding of how training is progressing and how different configuration choices interact.

This allows LGT to coordinate three specialized agents: an Advisor proposing changes, an Evaluator assessing progress, and an Optimizer refining decisions, creating a self-improving feedback loop. Across six diverse datasets, LGT consistently outperformed established optimization methods, achieving substantial gains in accuracy and efficiency, with gains of up to 23. 3% in classification accuracy and 49. 3% reduction in regression error. Beyond performance, LGT offers transparent reasoning for every configuration change, fostering trust and understanding.

Language Guidance Boosts Machine Learning Optimisation

This paper introduces Language-Guided Tuning (LGT), a novel framework designed to improve the optimisation of machine learning configurations. LGT employs a multi-agent system, comprising an Advisor, Evaluator, and Optimizer, that leverages textual gradients to understand the relationships between different configuration settings. This approach moves beyond traditional methods that typically treat each setting independently, allowing for a more coordinated and intelligent search for optimal values. Results demonstrate that LGT achieves substantial performance gains across six diverse datasets, with improvements of up to 23.

3% in accuracy and 49. 3% reduction in error compared to existing techniques like Neural Architecture Search and Bayesian Optimisation. The framework also exhibits dynamic convergence, adapting at the epoch level, and provides enhanced interpretability through natural language reasoning. Future work could explore methods to mitigate limitations related to Large Language Models and extend the framework to a wider range of machine learning tasks.

👉 More information

🗞 Language-Guided Tuning: Enhancing Numeric Optimization with Textual Feedback

🧠 ArXiv: https://arxiv.org/abs/2508.15757