For decades, computer vision researchers have strived to build machines capable of fully understanding the visual world, but progress has often been fragmented across specialised tasks. Andrei-S, Ştefan Bulzan, and Cosmin Cernăzan-Glăvan from the Department of Computer and Information Technology at Universitatea Politehnică Timişoara, present a comprehensive survey charting the evolution and increasing convergence of these vision subdomains. Their work introduces the concept of Open World Detection (OWD), a unifying framework designed to encompass class-agnostic and broadly applicable detection methods. This survey highlights how techniques ranging from saliency detection and out-of-distribution analysis are converging, paving the way for more versatile and robust perception systems capable of identifying anything, anywhere, and ultimately achieving a more complete understanding of visual scenes.

Multimodal Benchmarks and Datasets for LLMs

Recent research explores datasets, models, and techniques for large language models (LLMs) applied to multimodal tasks. Key datasets include ADE20K, Places, Sun Database, COCO, and ImageNet, providing extensive visual data for training and evaluation, alongside newer resources like MMMU and List Items One By One, specifically designed for multimodal LLMs. Several model architectures are central to this work, including Vision Transformers and multimodal LLMs, with GPT-4 and GPT-4V serving as important baselines for comparison. Specific techniques under investigation include Filip, focusing on fine-grained image-language pre-training, and Ferret, designed for comprehensive visual grounding.

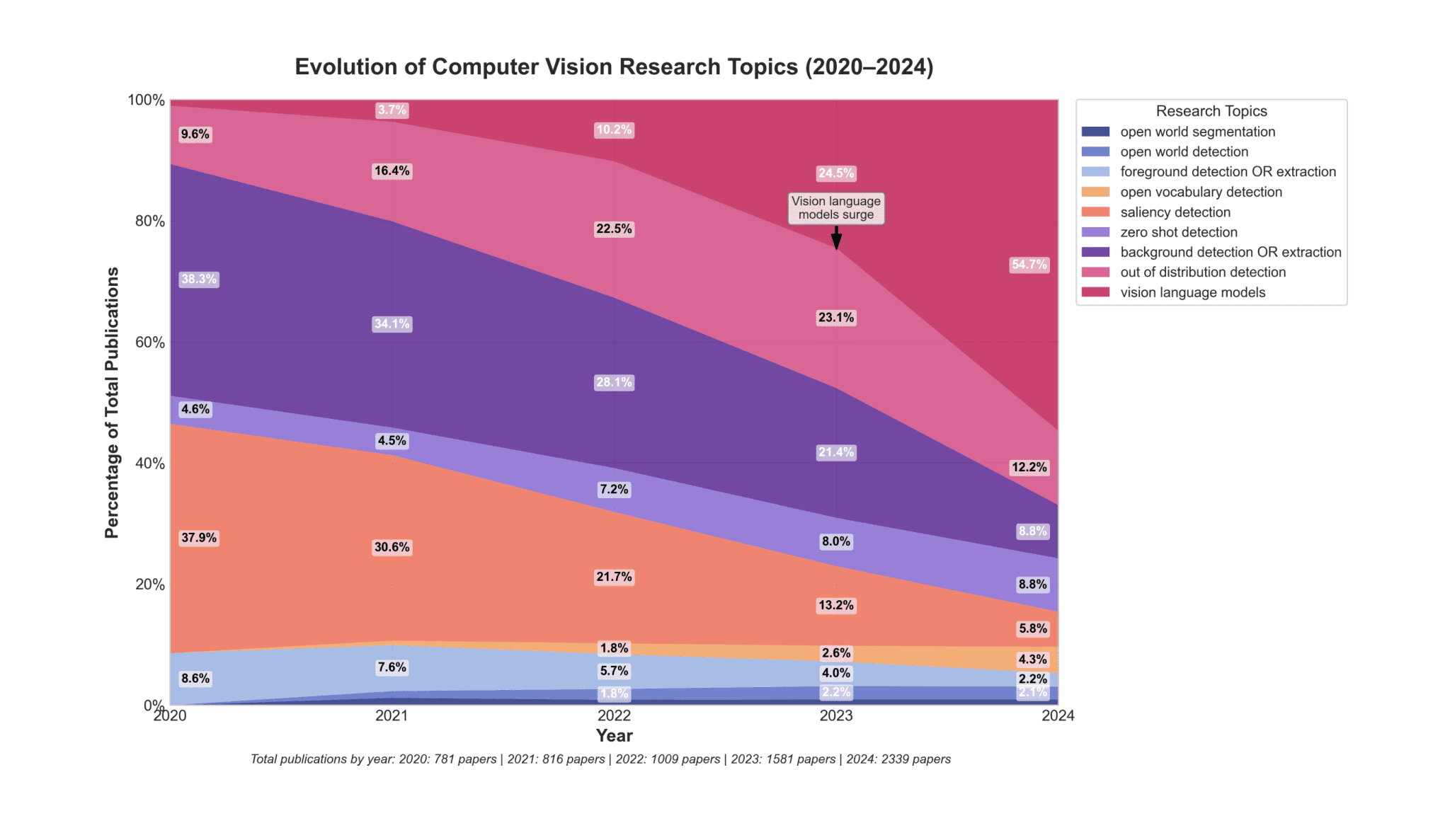

Object detection frameworks like You Only Look Once (YOLO) and Mask R-CNN are frequently employed, alongside attention mechanisms and contrastive learning, valuable for zero-shot detection. Researchers are particularly interested in open-vocabulary detection and segmentation, enabling systems to identify objects beyond predefined categories, utilizing techniques like Region-of-Interest prompting, Set-of-Mark Prompting, and Attention Prompting to guide LLMs in understanding visual information. Innovations like Specificity-Preserving RGB-D Saliency Detection and Quadrangle Attention further refine these capabilities, while Generalized Decoding aims to improve overall performance. The research focuses on core tasks including object detection, semantic segmentation, scene understanding, visual grounding, and out-of-distribution (OOD) detection. Saliency detection, visual question answering, image captioning, and reasoning are also key areas of investigation, increasingly utilizing RGB-D data combining standard images with depth information. This survey traces the historical development of foundational vision subdomains, such as saliency detection and foreground/background separation, to establish a comprehensive understanding of the field, ultimately arriving at the core of OWD and its reliance on Vision-Language Models (VLMs) to interpret visual information. The team meticulously explores the overlap between these subdomains, demonstrating how advancements in one area often benefit others, driving overall progress. They highlight the crucial role of large-scale datasets and large multimodal models in enabling systems to perform multiple vision tasks simultaneously, revealing a clear trend towards holistic approaches and more generalized, adaptable models.

To provide a complete overview, scientists conduct a thorough review of datasets and benchmarks essential for training and evaluating OWD systems, mapping the landscape of available data and identifying key resources. Researchers delineate major eras of visual detection, highlighting paradigm shifts and the evolution of methodologies. This historical perspective provides a structured understanding of the current state of the field and informs future research directions, articulating a vision for a unified domain of perception where the boundaries between different vision tasks blur. This framework aims to create systems capable of perceiving the visual world in a more comprehensive and adaptable manner, moving beyond the limitations of specialized algorithms. The historical development of vision subdomains, from saliency detection to foreground/background separation, reveals a growing trend towards holistic perception. Early work in saliency detection focused on identifying the most salient object in a scene, but recent advancements, such as the PiCANet technique, now highlight multiple objects at varying levels of importance.

These methods utilize pixel-wise contextual attention mechanisms and pyramid attention structures to achieve more comprehensive scene understanding. Generalized Co-Salient Object Detection allows for the detection of common salient objects across images, even with noise and uncertainty, demonstrating progress towards handling real-world complexity. The field increasingly views saliency detection as a component of broader object detection, integrating it into comprehensive vision-language models. Foreground/background separation, a foundational technique for surveillance and object tracking, also aligns with the goals of OWD by focusing on detecting all objects within a scene.

Initial methods, like the Running Gaussian Average proposed in 1997, modeled pixels as Gaussian distributions, but struggled with complex backgrounds. Later innovations, such as the Temporal Median Filter, improved robustness by using a buffer of recent frames, though required significant memory. The Mixture of Gaussians (MoG) model, introduced in 1999, addressed dynamic backgrounds by modeling each pixel as a mixture of Gaussian distributions, adapting to changing scenes. The work highlights how foundational areas like saliency detection, foreground/background separation, and zero-shot detection contribute to building systems capable of generalizing to previously unseen objects and scenarios. It demonstrates that progress in large multimodal models, particularly those integrating vision and language, is enabling more effective detection by combining semantic understanding with flexible representation. The authors conclude that OWD is poised to become increasingly important for applications demanding robustness and adaptability to changing environments.

While acknowledging promising directions, the survey also points to ongoing challenges in areas such as improving spatial localization, incorporating 3D information, and scaling models efficiently for resource-constrained settings. Future work, they suggest, should continue to explore both the fundamental mechanisms of visual perception and the holistic, multimodal paradigms that are reshaping the scope of computer vision. The review is based on existing literature and did not involve the generation or analysis of new datasets.

👉 More information

🗞 Towards Open World Detection: A Survey

🧠 ArXiv: https://arxiv.org/abs/2508.16527