The increasing need for data privacy presents significant challenges for collaborative machine learning, particularly when dealing with sensitive information distributed across multiple devices. Atit Pokharel, Ratun Rahman, Shaba Shaon, and colleagues from The University of Alabama in Huntsville address this issue by investigating a new approach to secure federated quantum learning. Their research explores how inherent noise within current quantum computers can be strategically employed to protect model information during training, offering a mechanism for differential privacy without requiring the exchange of raw data. This innovative method tunes noise levels to achieve specific privacy targets, balancing security with the need for accurate results, and demonstrates a robust defence against adversarial attacks, paving the way for reliable and secure quantum computing applications.

Noise Empowers Privacy in Quantum Federated Learning

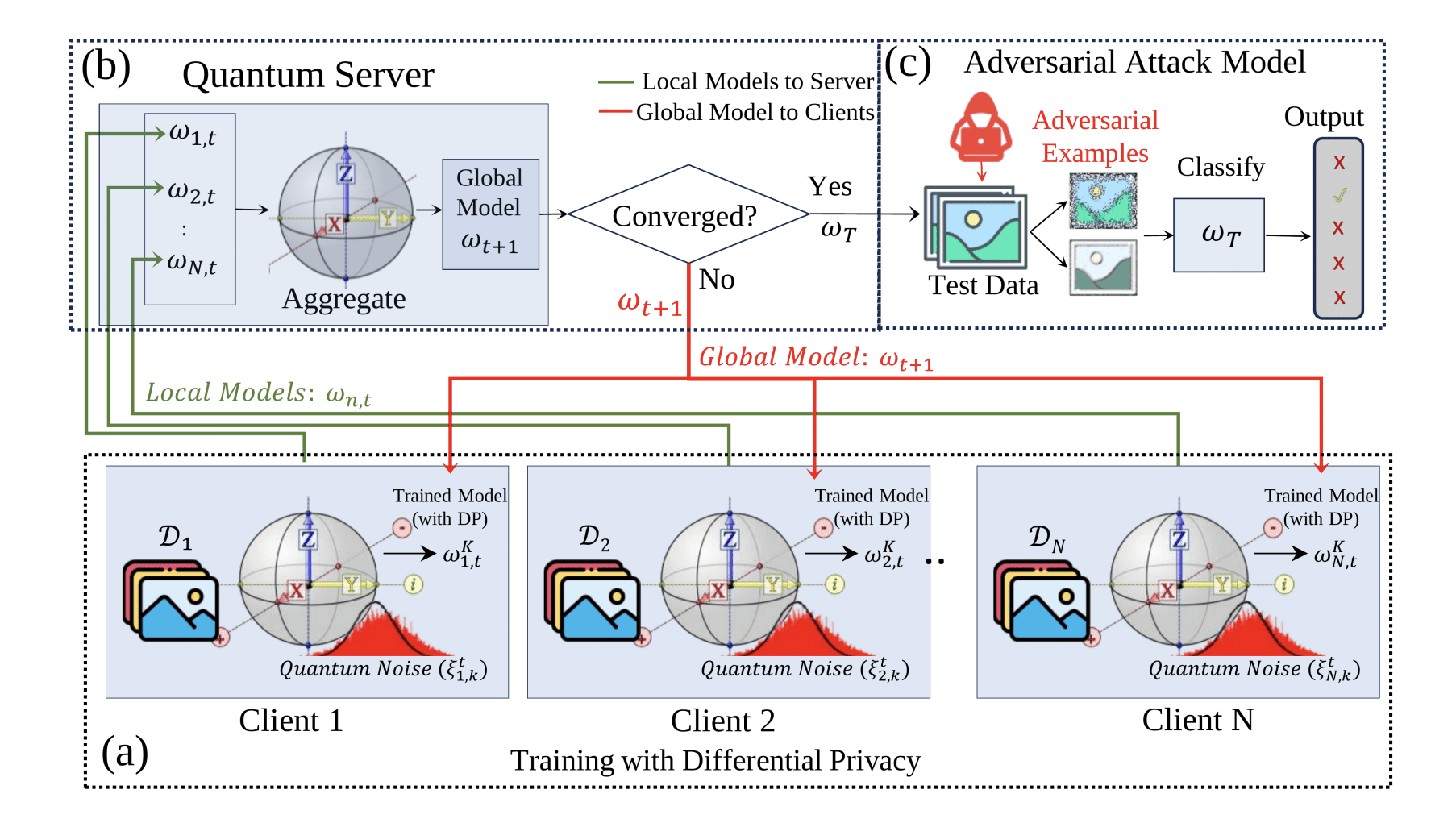

Researchers have developed a novel framework for quantum federated learning (QFL) that leverages inherent noise within near-term quantum devices to enhance data privacy and model security. This approach, termed differentially-private QFL (DP-QFL), capitalizes on the randomness already present in noisy intermediate-scale quantum (NISQ) devices, effectively using it as a mechanism for differential privacy without requiring additional artificial noise. Federated learning, a distributed machine learning technique, allows model training across multiple devices holding local data samples, circumventing the need to centralise sensitive information. QFL extends this paradigm to the quantum realm, potentially unlocking advantages in computational power and model expressiveness, but introduces new privacy challenges due to the nature of quantum information exchange. The team demonstrates that by carefully tuning the level of noise through adjustments to measurement shots and the strength of depolarizing channels, quantum operations that introduce errors, they can achieve desired privacy levels while balancing the limitations of current quantum hardware. This framework addresses a critical vulnerability in QFL systems, where shared model updates can be exploited by adversaries to infer sensitive information or compromise model performance.,

Experiments involved implementing a quantum-based adversarial attack, specifically a targeted attack designed to induce misclassification, to simulate realistic threat scenarios and then evaluating the resilience of DP-QFL against it. Results demonstrate a tunable trade-off between privacy and robustness, allowing for optimization based on specific security requirements and hardware constraints; higher privacy levels generally correspond to a slight reduction in model accuracy, a common characteristic of privacy-preserving techniques. Extensive simulations assessed training performance under varying privacy budgets, quantified using the epsilon parameter in differential privacy, a measure of the privacy loss, achieved through different levels of quantum noise, using established datasets such as the MNIST handwritten digit dataset and the Fashion-MNIST dataset for comparison. By harnessing existing noise characteristics, the team avoids the need for complex and resource-intensive privacy-preserving techniques often employed in classical machine learning, such as adding Gaussian noise or employing secure multi-party computation. The findings have significant implications for reliable quantum computing applications, paving the way for secure and collaborative training of quantum models in a distributed network of devices, particularly in sectors like healthcare and finance where data privacy is paramount.,

.

👉 More information

🗞 Differentially Private Federated Quantum Learning via Quantum Noise

🧠 ArXiv: https://arxiv.org/abs/2508.20310