Predicting continuous properties accurately remains a key challenge in numerous scientific and engineering fields, yet deep-learning methods often struggle when labelled data is limited. Kevin Tirta Wijaya from the Max Planck Institute for Informatics, alongside Michael Sun and Minghao Guo from MIT, and colleagues, present a new approach to refine regression models using readily available expert knowledge in the form of pairwise rankings. Their method, called RankRefine, improves predictions by intelligently combining the output of an existing regression model with estimates derived from ranking comparisons, crucially without requiring any further training of the original model. This technique achieves significant gains, demonstrated by up to a 10% reduction in error when predicting molecular properties using just a small number of comparisons sourced from a general-purpose language model, offering a practical and broadly applicable solution for enhancing regression accuracy in data-scarce scenarios.

This research introduces RankRefine, a model-agnostic and easily integrated post-hoc method that improves regression accuracy by incorporating expert knowledge derived from pairwise rankings. Given a query item and a small reference set with known properties, RankRefine combines the output of an existing regression model with a rank-based estimate using inverse-variance weighting, crucially without requiring any model retraining. When applied to molecular property prediction, RankRefine achieves up to a 10% relative reduction in mean absolute error, utilising only 20 pairwise comparisons obtained from a general-purpose large language model without any finetuning. This demonstrates that rankings provided by either human experts or general-purpose large language models are sufficient for impactful improvement.

Rank Refinement with Pairwise Expert Knowledge

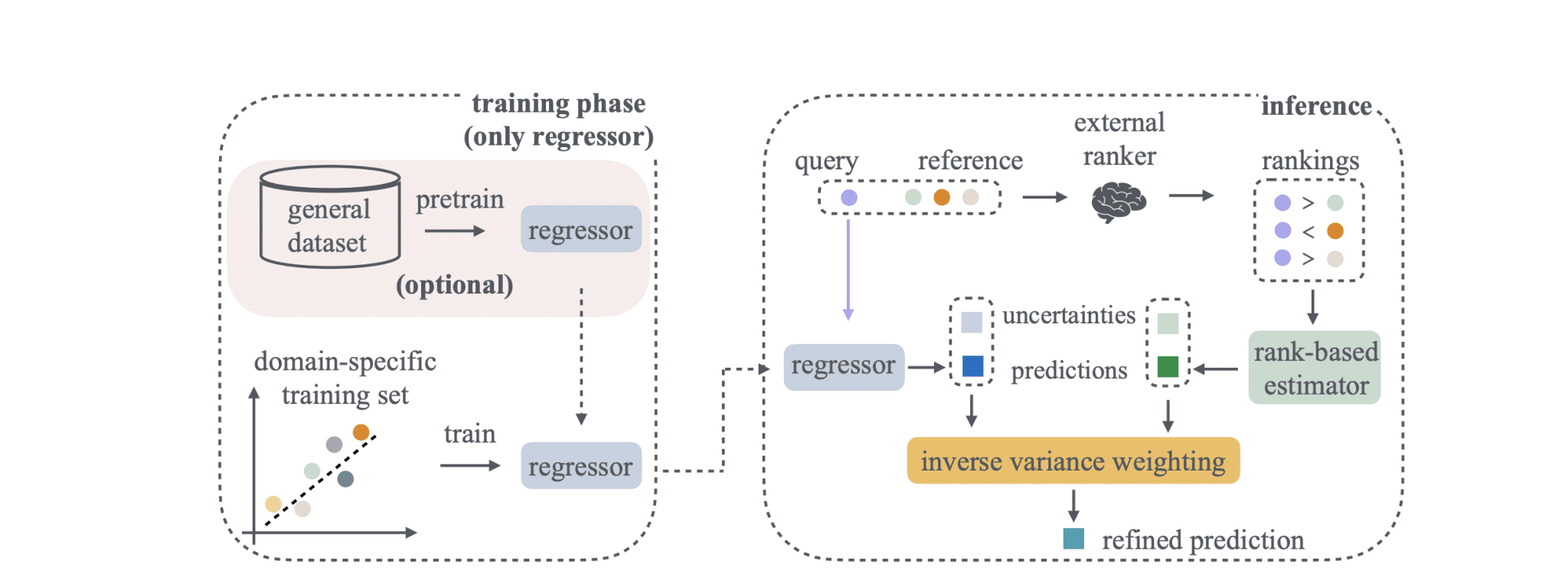

Researchers developed RankRefine, a novel post-hoc method that enhances regression predictions by incorporating expert knowledge conveyed through pairwise rankings, addressing the challenge of limited labeled data in many scientific fields. The approach cleverly combines the outputs of a base regression model with estimates derived from ranking comparisons, requiring no retraining of the original model and ensuring broad applicability across diverse tasks. Scientists employ a system where, given a query item and a small set of labeled references, a ranker determines the relative order between the query and each reference, establishing a likelihood that, when minimized, yields a rank-based property estimate. This rank-based estimate is then seamlessly fused with the base regressor’s prediction using inverse-variance weighting, a technique that prioritizes more reliable estimates, resulting in a refined prediction without altering the underlying regression model.

The team demonstrates the method’s versatility by showing it functions independently of the specific regression model used, making it suitable for low-data, few-shot, and meta-learning scenarios where labeled data is scarce. Experiments reveal that RankRefine consistently achieves up to a 10% relative reduction in mean absolute error, even when utilizing only 20 pairwise comparisons obtained from a general-purpose large language model, highlighting its efficiency and minimal computational demands. Furthermore, scientists validated the effectiveness of RankRefine on both synthetic and real-world benchmarks, including multiple molecular property prediction tasks, consistently observing improvements in predictive performance. Analysis confirms a strong correlation between ranking quality and the effectiveness of RankRefine, underscoring the potential of large language models and other expert-informed rankers as valuable complements to data-scarce regression models. The researchers provide source code to facilitate further exploration and application of this innovative methodology.

RankRefine Boosts Prediction Accuracy with Pairwise Comparisons

Researchers have developed RankRefine, a novel method that significantly improves the accuracy of property prediction, even when limited training data is available. This technique refines regression models by incorporating expert knowledge conveyed through pairwise rankings, effectively leveraging comparisons between items rather than relying solely on absolute values. The core of RankRefine lies in its ability to combine the predictions of a base regression model with a rank-based estimate, weighting them according to their respective variances. Experiments confirm that any informative ranker with finite variance lowers the expected error after this fusion process, as predicted by theoretical bounds.

To validate this, simulations using ideal predictions matched observed improvements with prescribed target values, confirming the correctness of the fusion rule under certain assumptions. Across nine molecular datasets and three tabular regression tasks, RankRefine consistently lowered prediction errors, even when rankers achieved only 55% accuracy and using just 10 reference comparisons. Increasing the number of comparisons to 20 generally yielded optimal results, with diminishing returns beyond that point. The team observed that the method generalizes effectively beyond molecular property prediction, delivering substantial improvements in diverse areas like crop yield prediction, student performance assessment, and international education cost estimation.

Rankings Boost Regression with Limited Data

RankRefine, a new post-hoc method, improves the accuracy of regression models, particularly when limited data is available. The method works by incorporating expert knowledge in the form of pairwise rankings, simply indicating which items in a set have higher or lower values of a property, and combining this with the predictions of an existing regression model. This fusion, achieved through inverse variance weighting, lowers expected error whenever the ranking information is reasonably reliable. Experiments across diverse datasets, including molecular property prediction and tabular data, demonstrate consistent improvements with RankRefine, even when ranking accuracy is modest. Notably, the method successfully leveraged rankings generated by a large language model, achieving measurable reductions in prediction error for properties related to drug absorption, distribution, metabolism, and excretion.

👉 More information

🗞 Post Hoc Regression Refinement via Pairwise Rankings

🧠 ArXiv: https://arxiv.org/abs/2508.16495