Knowledge distillation offers a powerful way to shrink complex machine learning models into more manageable forms, enabling deployment on devices with limited computing power. Chen-Yu Liu from Quantinuum, Kuan-Cheng Chen from Imperial College London, and Keisuke Murota from Quantinuum, alongside Samuel Yen-Chi Chen from Brookhaven National Laboratory and Enrico Rinaldi from Quantinuum, present a new approach to this technique called Quantum Relational Knowledge Distillation. The team extends existing relational knowledge distillation methods by incorporating principles from quantum mechanics, specifically mapping data relationships into a quantum-inspired Hilbert space. This allows the distillation process to capture more nuanced connections between data points, consistently improving the performance of smaller “student” models across a range of vision and language tasks, while crucially maintaining a fully classical deployment, meaning the resulting models run on standard hardware. This research demonstrates, for the first time, how quantum-inspired techniques can enhance knowledge distillation without requiring quantum computers.

Quantum Kernels Distill Compact Machine Learning Models

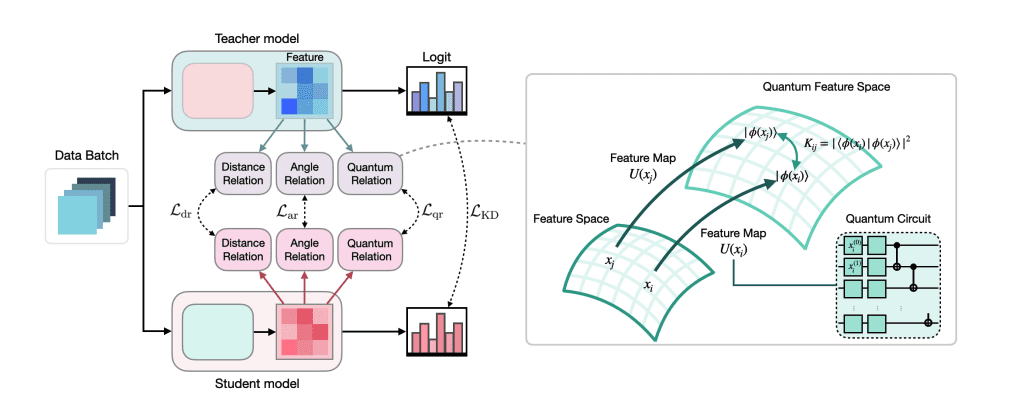

Researchers have developed a new technique for compressing large machine learning models, enabling efficient deployment on devices with limited computing resources. QRKD begins by mapping data into a theoretical quantum space, a high-dimensional environment that allows for a richer representation of the connections between different data points. This mapping utilizes quantum kernels, mathematical functions that capture the similarity between data points in this quantum space.

By analyzing these relationships, the technique guides a smaller “student” model to better mimic the behavior of a larger, more complex “teacher” model. Crucially, the student model remains entirely classical, allowing for deployment on standard hardware without requiring quantum computing resources. Results consistently demonstrate performance improvements across various tasks, including image recognition with datasets like MNIST and CIFAR-10, and natural language processing using models like GPT-2. These tests show that QRKD consistently outperforms standard relational knowledge distillation techniques, which also aim to transfer relational information between models.

The method consistently enables the student model to learn more effectively from the teacher when quantum-inspired relational information is incorporated. A key advantage of QRKD is its compatibility with existing machine learning workflows. It integrates seamlessly into current knowledge distillation pipelines with minimal architectural changes, making it a practical solution for model compression. Furthermore, the technique addresses a limitation of some quantum kernel methods, which can lose expressiveness in complex scenarios, by focusing on capturing meaningful relationships rather than relying solely on global data representations.

This allows for more effective knowledge transfer and improved performance of the compressed student model. This research highlights the potential of combining quantum-inspired principles with classical machine learning techniques to create more efficient and powerful artificial intelligence systems. QRKD enhances the distillation process by embedding features into a quantum Hilbert space and aligning their resulting kernel values, thereby improving the student model’s ability to mimic the teacher model’s behaviour while preserving structural similarity. Results demonstrate consistent performance improvements across both vision and language tasks, including image classification with CNNs and text generation using transformer-based architectures, when compared to conventional relational knowledge distillation. Importantly, QRKD maintains a fully classical inference pipeline, meaning both teacher and student models remain classical neural networks, and quantum resources are only required during the training phase for relational supervision. While the current implementation relies on idealized quantum simulations, the authors acknowledge limitations related to potential hardware noise and sampling variance in future deployments. Future research directions include extending QRKD to function with noisy quantum processors and designing robust quantum circuits or measurement schemes to mitigate these challenges, potentially broadening the applicability of quantum-enhanced knowledge distillation to a wider range of vision and language domains.

👉 More information

🗞 Quantum Relational Knowledge Distillation

🧠 ArXiv: https://arxiv.org/abs/2508.13054