Deep learning is increasingly moving to edge devices, demanding efficient hardware solutions capable of running complex models with limited power and resources. Vineet Kumar, Ajay Kumar M, and Yike Li, from University College Dublin, along with colleagues, present a new System-on-Chip (SoC) architecture designed to accelerate deep learning inference at the edge. Their work uniquely combines an open-source deep learning accelerator with a streamlined RISC-V processor, and crucially, operates without the overhead of a traditional operating system by generating bare-metal code. This innovative approach significantly improves both execution speed and storage efficiency, paving the way for more powerful and responsive edge computing applications, as demonstrated through successful inference testing with models like LeNet-5, ResNet-18, and ResNet-50.

RISC-V SoC Integrates NVDLA for Edge AI

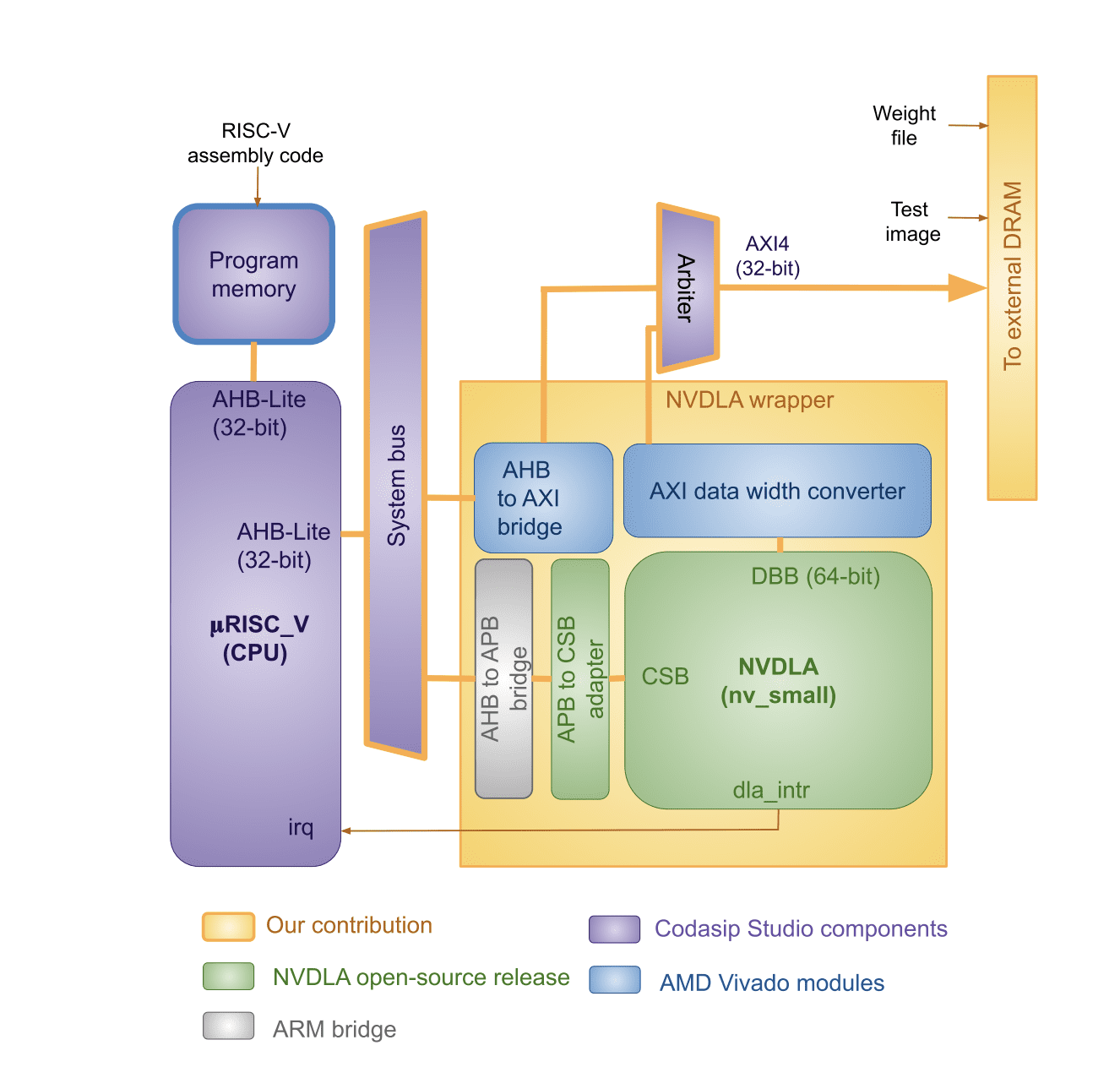

Researchers designed and implemented a System-on-Chip (SoC) combining the NVIDIA Deep Learning Accelerator (NVDLA) with a RISC-V processor, creating a lightweight, standalone deep learning inference engine. This hardware implementation on an FPGA allows for efficient operation without a full operating system, valuable for edge AI applications demanding low latency, low power consumption, and minimal resource usage. The system demonstrates a practical approach to deploying deep learning models on resource-constrained devices. The core of the system is the NVDLA, an open-source deep learning accelerator, controlled by a RISC-V processor, an open-standard instruction set architecture offering flexibility and customisation.

The design was implemented on a Xilinx ZCU102 FPGA, providing hardware acceleration and customisation capabilities. It operates independently of a Linux kernel, simplifying the software stack and reducing overhead. External DDR4 memory stores weights and input images, increasing system capacity, while AXI interconnects manage communication between components and handle frequency differences. The design underwent initial validation through behavioral simulation using standard NVDLA test traces, followed by implementation on the FPGA. The team measured resource utilisation, demonstrating the feasibility of the design.

Execution time measurements for LeNet-5, ResNet-18, and ResNet-50 at 100 MHz demonstrate performance improvements over previous work. Future work includes expanding model support, generating INT8 calibration tables for NVDLA, and integrating the ONNC compiler to simplify deployment from ONNX models. This work is highly relevant to the growing field of edge AI, enabling low-latency inference critical for real-time applications. The use of open-source hardware promotes innovation and collaboration, while the FPGA implementation allows for customisation and optimisation. The simplified software stack and hardware acceleration contribute to reduced power consumption, offering significant advantages for edge AI applications.

Bare-Metal Acceleration of Deep Learning Models

Researchers engineered a novel System-on-Chip (SoC) architecture to accelerate deep learning models for edge computing, tightly coupling the open-source Deep Learning Accelerator (NVDLA) with a 32-bit, 4-stage pipelined RISC-V core called uRISC_V. This innovative design prioritises efficiency by generating bare-metal application code in assembly, bypassing the complexities and overheads associated with operating systems. The team developed a complete software generation workflow that transforms trained neural network models into both RISC-V assembly code and corresponding weight files, enabling direct hardware configuration of the NVDLA. This streamlined approach delivers faster execution and reduced storage requirements, making it ideal for resource-constrained edge devices.

Scientists pioneered an automated methodology to generate configuration files for arbitrary Caffe-based neural networks, addressing a significant gap in existing documentation and tools. The process extracts weights and generates the necessary configuration, which is then converted into RISC-V assembly code, allowing for direct control of the NVDLA hardware. This eliminates the need for a Linux kernel and associated driver stacks, significantly reducing performance and storage overhead. The system was implemented on an AMD ZCU102 FPGA board, integrating the NVDLA with the Codasip μRISC V core, and validated using established models like LeNet-5, ResNet-18, and ResNet-50.

Experiments demonstrate that the implemented system can perform inference with LeNet-5 in 4. 8 ms, ResNet-18 in 16. 2 ms, and ResNet-50 in 1. 1 seconds, all at a system clock frequency of 100 MHz. The technique reveals a substantial improvement in efficiency compared to existing Linux-kernel based implementations, and supports both small and full configurations of the NVDLA. Researchers meticulously validated the system, demonstrating its ability to execute complex deep learning models directly on hardware, paving the way for more efficient and responsive edge computing solutions.

RISC-V and NVDLA Accelerate Edge Deep Learning

Researchers have developed a novel System-on-Chip (SoC) architecture that significantly accelerates deep learning models for edge computing applications, achieving substantial performance gains through a combination of hardware and software optimisation. The team tightly coupled an open-source Deep Learning Accelerator, NVDLA, with a 32-bit RISC-V processor core, named uRISC_V, to create a highly efficient processing unit. A key innovation lies in the development of a toolflow that generates bare-metal application code, bypassing the complexities and overheads associated with traditional operating systems. This streamlined architecture and software flow deliver improvements in both execution speed and storage efficiency, making it ideally suited for resource-constrained edge devices.

Experiments conducted on an AMD ZCU102 FPGA board, utilising the NVDLA-small configuration, demonstrate the effectiveness of this approach with several benchmark models. The team successfully ran LeNet-5, achieving inference in just 4. 8 milliseconds at a system clock frequency of 100 MHz. More complex models also showed significant acceleration, with ResNet-18 completing inference in 16. 2 milliseconds and the demanding ResNet-50 model completing in 1.

1 seconds under the same conditions. These results represent a substantial improvement over existing solutions that rely on operating system overheads or simulation-based implementations. The researchers also developed an automated methodology for generating configuration files for arbitrary Caffe-based neural networks, converting these files into RISC-V assembly code for direct hardware configuration of NVDLA. This eliminates the need for complex driver stacks and allows for precise control over the accelerator, supporting both small and full NVDLA configurations. Unlike prior work that often assumed unrealistic operating frequencies, this implementation operates effectively at 100 MHz on FPGA, demonstrating a practical and deployable solution for accelerating deep learning at the edge. The combination of a tightly coupled architecture, bare-metal execution, and automated configuration generation delivers a powerful and efficient platform for a wide range of edge computing applications.

👉 More information

🗞 Bare-Metal RISC-V + NVDLA SoC for Efficient Deep Learning Inference

🧠 ArXiv: https://arxiv.org/abs/2508.16095