Researchers are tackling the critical challenge of ensuring safety in reinforcement learning (RL) as agents transition to new, unseen environments. Tingting Ni from EPFL and Maryam Kamgarpour from EPFL, along with colleagues, present a novel algorithm that significantly improves both the safety and speed at which optimal policies are discovered during testing. This work addresses a key limitation of current RL methods , their potential to violate safety constraints in real-world applications like robotics and healthcare , by offering provable guarantees on policy safety and sample complexity. Their findings demonstrate a near-optimal policy can be achieved with a demonstrably tight sample complexity, representing a substantial advance in constrained RL and paving the way for safer, more efficient agents.

This work addresses a key limitation of current RL methods, their potential to violate safety constraints in real-world applications like robotics and healthcare, by offering provable guarantees on policy safety and sample complexity. Their findings demonstrate a near-optimal policy can be achieved with a demonstrably tight sample complexity, representing a substantial advance in constrained RL and paving the way for safer, more efficient agents.

Provable safe and efficient meta reinforcement learning

The research addresses a critical gap in the field: ensuring the safety of policies deployed in real-world applications, such as robotics and healthcare, while simultaneously minimising the number of interactions needed to learn effectively. This significant reduction in sample complexity is particularly impactful when the task distribution is concentrated or the set of tasks is small. Their model-free approach directly estimates policy gradients, avoiding the need to estimate system dynamics, although earlier iterations incurred a higher dependence on ε, with a sample complexity of O(ε−6). This new work significantly improves upon this, reducing real-world interactions, which is especially critical in constrained settings where violations of safety constraints can be costly or dangerous. Furthermore, the team rigorously validated their approach through both theoretical analysis and empirical evaluation. This breakthrough opens avenues for deploying reinforcement learning agents in safety-critical applications with greater confidence and reduced risk.

Constrained meta-reinforcement learning for safe policy refinement offers

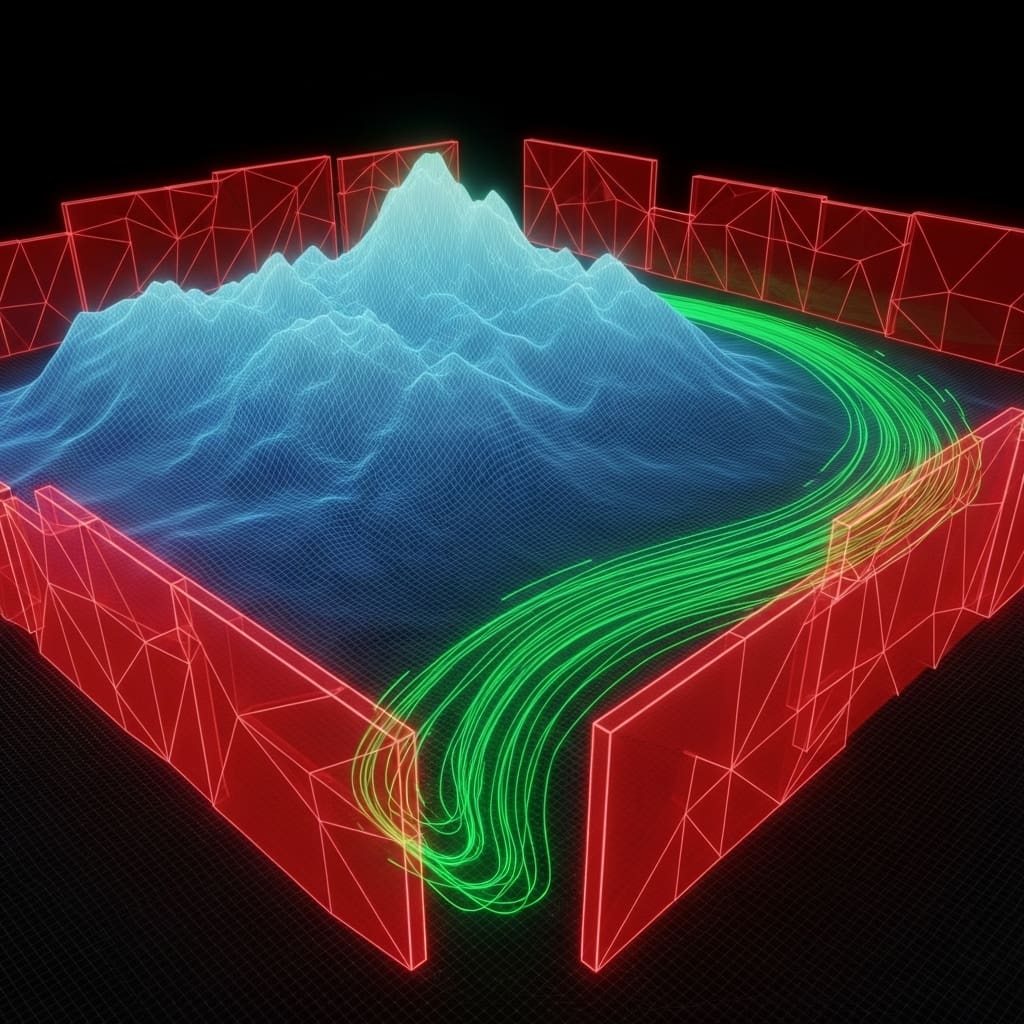

Researchers employed a simulation and test paradigm, utilising inexpensive data collection in simulation to inform policy development before deployment in the real world. Experiments employed constrained Markov decision processes to directly integrate safety constraints into the learning process, focusing on safe exploration to prevent costly or dangerous constraint violations during testing. This contrasts with model-free approaches exhibiting a worse dependence on ε, with a sample complexity of O(ε−6). During training, a meta policy was learned to maximise expected reward while satisfying safety constraints, either in expectation or uniformly across tasks. Empirical validation was conducted in a gridworld environment, where test tasks were sampled from a truncated Gaussian distribution, showcasing superior learning efficiency and safe exploration compared to baseline methods.

Safe meta-learning with guaranteed feasibility and efficiency

This work addresses a critical gap in constrained reinforcement learning, where ensuring safety during testing while minimising sample complexity remains a significant challenge. Experiments revealed that the algorithm learns a policy set approximating the optimal policy for any given task, alongside a single policy guaranteed to be feasible across all tasks within a defined family. This approach relies on three oracles, leveraging recent advances in constrained and meta reinforcement learning to facilitate efficient learning. Measurements confirm the algorithm’s ability to minimise test-time reward regret, quantifying the cumulative sub-optimality of deployed policies.

The study introduces the concept of a mixture policy, defined as a probability-weighted combination of multiple policies, implemented by sampling an index and executing the corresponding policy for all timesteps. Data shows that sublinear reward regret implies convergence of this mixture policy towards the optimal policy, directly characterising the sample complexity. Specifically, the algorithm ensures that each test policy remains feasible, preventing constraint violations in real-world applications. Tests prove that the sample complexity achieved is tight, establishing a lower bound demonstrating the efficiency of the proposed approach.

The algorithm builds upon the Policy Collection, Elimination framework, modifying it to address the unique challenges of constrained reinforcement learning. Researchers define the distance between two constrained Markov Decision Processes, Mi and Mj, as d(Mi, Mj):=max n ∥ri −rj∥∞,∥ci −cj∥∞,∥ρi −ρj∥TV, max (s,a)∈S×A∥Pi(· | s, a) −Pj(· | s, a)∥TV o, capturing the largest difference across all components of the two CMDPs. If d(Mi, Mj) ≤ε0.1, then Mi is considered to be within B(Mj, ε). This metric is crucial for quantifying the proximity of tasks and ensuring the effectiveness of the learned policies.

Optimal Safe Policy Refinement with Guarantees leverages formal

The algorithm operates by iteratively refining policies and verifying updates, converging exponentially fast to a near-optimal solution. This process facilitates a transition from conservative, feasible policies to those with higher rewards, guaranteeing safe exploration throughout the testing phase. However, the authors acknowledge that the regret bound includes a linear term dependent on the number of steps and a parameter from Slater’s condition, typical in constrained reinforcement learning. Future research could focus on mitigating the linear regret term, potentially through improved policy approximation or more efficient estimation of reward and constraint values. The findings are significant as they provide a theoretical foundation for deploying reinforcement learning agents in safety-critical applications, such as robotics and healthcare, where minimising both risk and the amount of training data is crucial. While the current work assumes certain conditions, like Slater’s condition, the established guarantees represent a step towards more reliable and efficient constrained reinforcement learning systems.

👉 More information

🗞 Constrained Meta Reinforcement Learning with Provable Test-Time Safety

🧠 ArXiv: https://arxiv.org/abs/2601.21845