Reinforcement learning increasingly shapes the capabilities of large language models, pushing them beyond simple text generation towards complex reasoning tasks, and a new survey comprehensively examines this rapidly evolving field. Kaiyan Zhang, Yuxin Zuo, and Bingxiang He, from Tsinghua University, lead a team that details how reinforcement learning transforms language models into powerful reasoning engines, particularly excelling in areas like mathematics and coding. The researchers identify key challenges hindering further progress, extending beyond computational demands to encompass algorithm design, data requirements, and necessary infrastructure, as the field aims for increasingly sophisticated artificial intelligence. This review, encompassing foundational components, core problems, training resources, and downstream applications, including recent advances since the release of DeepSeek-R1, highlights opportunities and directions for future research, ultimately aiming to unlock the full potential of reinforcement learning for broader reasoning capabilities.

Reinforcement Learning Refines Large Language Model Reasoning

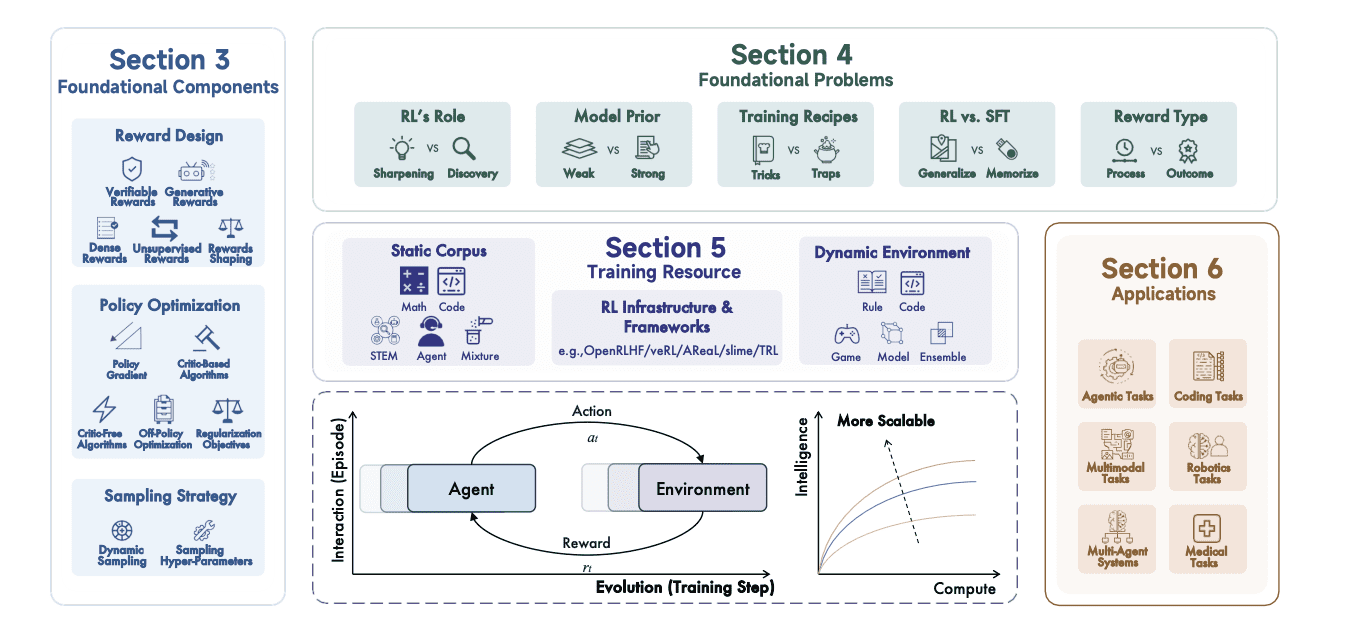

This paper surveys recent advances in Reinforcement Learning (RL) for refining the reasoning abilities of Large Language Models (LLMs). RL addresses limitations of LLMs in tasks requiring sequential decision-making and complex reasoning, where direct supervision is difficult. The research explores how RL techniques can improve performance in areas such as game playing, robotic control, and dialogue systems, investigating methods for defining reward signals that effectively guide LLM learning and strategies for overcoming challenges related to exploration and sample efficiency. The survey details various RL algorithms adapted for use with LLMs, including policy gradient methods, value-based methods, and actor-critic architectures, and examines techniques for addressing the credit assignment problem, where determining which actions contributed to a particular outcome is difficult.

Reinforcement Learning Powers Next-Generation Reasoning Models

Recent work demonstrates the growing impact of reinforcement learning (RL) in advancing the capabilities of large language models (LLMs), transforming them into large reasoning models (LRMs). Scientists have achieved significant milestones, with models like DeepSeek-R1 matching the performance of OpenAI’s o1 series across various benchmarks. These models employ multi-stage training pipelines and, in some cases, operate without supervised finetuning, termed “Zero RL”. Further proprietary models quickly followed, including Claude-3, 7-Sonnet, Gemini 2. 0 and 2.

5, Seed-Thinking 1. 5, and the o3 series, each showcasing increasingly advanced reasoning abilities. OpenAI also released gpt-oss-120b and GPT-5, their most capable AI system to date, which dynamically switches between efficient and deeper reasoning models. Parallel open-source efforts have expanded the landscape, with QwQ-32B matching R1’s performance and the Qwen3 series, including the Qwen3-235B model, further improving benchmark scores. The Skywork-OR1 suite of models, based on R1, achieved scalable RL training through effective data mixtures and algorithmic innovations.

Minimax-M1 introduced hybrid attention to scale RL efficiently, while models like Llama-Nemotron-Ultra and Magistral 24B balanced accuracy and efficiency. Improvements in reasoning have extended use cases in coding and agentic scenarios, with the Claude series and Claude-4. 1-Opus achieving state-of-the-art results on the SWE-bench benchmark. Kimi K2 and models like GLM4. 5 and DeepSeek-V3.

1 specifically optimized for agentic tasks, demonstrate large-scale agentic training data synthesis and general RL procedures. Multimodality is a key component, with most frontier models, including GPT-5, o3, Claude, and Gemini families, natively supporting text, images, video, and audio. Open-source efforts like Kimi 1. 5 and QVQ excel in visual reasoning, while Skywork R1V2 balances reasoning and general abilities through hybrid RL. InternVL3 and InternVL3.

5 adopted unified native multimodal pretraining and two-stage cascade RL frameworks, achieving improved efficiency and versatility. Recent models like Step3 and GLM-4. 5V demonstrate state-of-the-art performance across visual multimodal benchmarks, signifying the continued advancement of reasoning capabilities in AI systems.

Reinforcement Learning Scales Reasoning Capabilities

This review demonstrates the growing importance of reinforcement learning in developing large reasoning models, extending beyond its initial role in aligning language models with human preferences. Recent advances, exemplified by systems like OpenAI o1 and DeepSeek-R1, show that training models with verifiable rewards, such as accuracy in mathematics or successful code execution, effectively enhances reasoning capabilities, including planning, reflection, and self-correction. These models increasingly allocate computational resources during use to evaluate and refine their reasoning processes, revealing a new avenue for improving performance alongside traditional data and parameter scaling. The research highlights that reasoning itself can be explicitly trained and scaled through reinforcement learning, offering a complementary approach to pre-training methods. While significant progress has been made, the authors acknowledge limitations in computational resources and algorithmic design as key challenges for further scaling.

👉 More information

🗞 A Survey of Reinforcement Learning for Large Reasoning Models

🧠 ArXiv: https://arxiv.org/abs/2509.08827