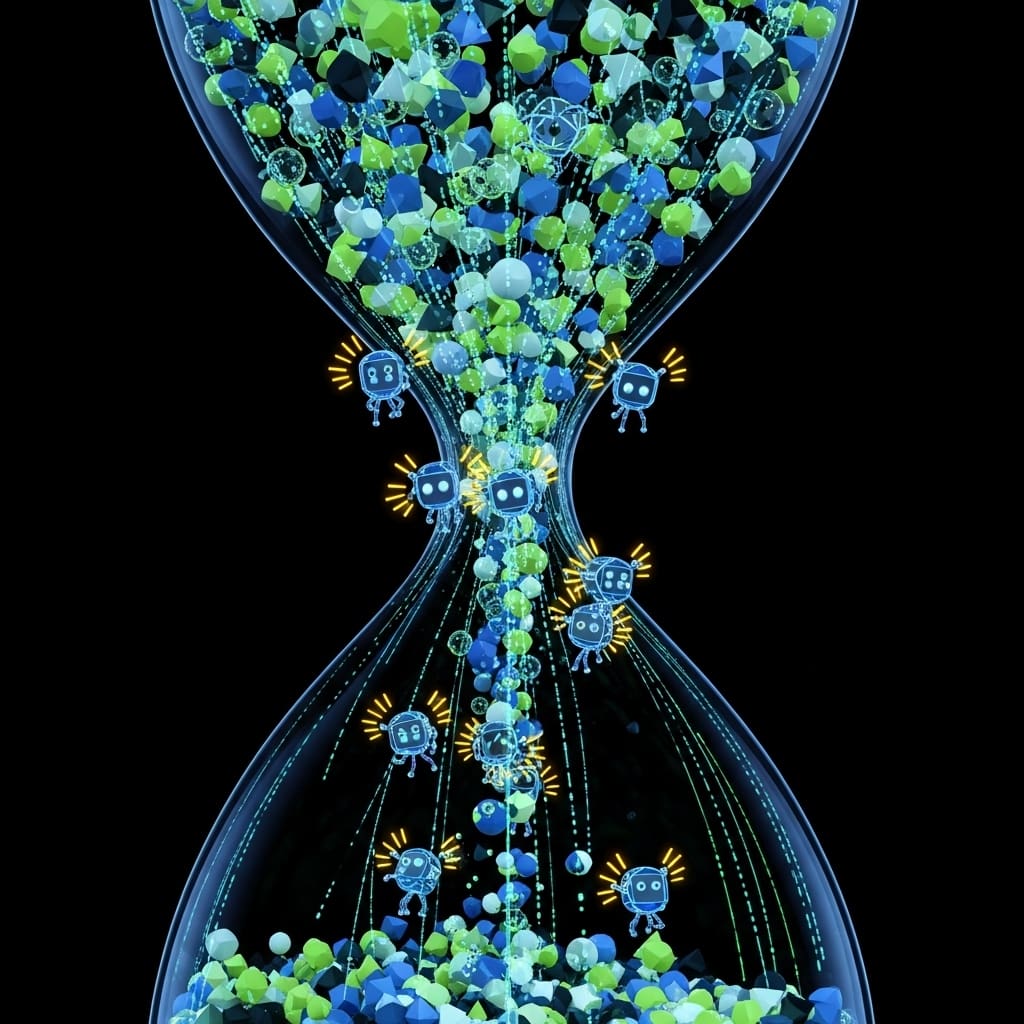

Large Language Models excel at multilingual translation, yet often produce overly verbose outputs, creating challenges for time-sensitive applications such as subtitling and dubbing. Ziang Cui, Mengran Yu, and Tianjiao Li, alongside colleagues from Bilibili Inc., address this critical issue with a new framework designed to balance translation accuracy with strict time constraints. Their research introduces Sand-Glass, a benchmark for evaluating translation under syllable-level duration limits, and HOMURA, a reinforcement learning system that actively manages the trade-off between semantic meaning and temporal feasibility. This innovative approach employs a dynamic reward system to control output length, demonstrably surpassing existing Large Language Model baselines in achieving precise and linguistically appropriate timing without sacrificing translation quality.

Syllable Timing and Semantic Fidelity in Translation

The inherent verbosity bias in many neural machine translation systems renders them unsuitable for strict time-constrained tasks such as subtitling and dubbing. Existing prompt-engineering approaches frequently struggle to resolve the conflict between maintaining semantic fidelity and adhering to rigid temporal feasibility requirements. To address this challenge, researchers introduce Sand-Glass, a new benchmark designed to specifically evaluate translation performance under syllable-level duration constraints. This benchmark allows for a more nuanced assessment of systems operating within tight temporal boundaries.

Furthermore, the study proposes HOMURA, a reinforcement learning framework that explicitly optimises the trade-off between semantic preservation and temporal compliance. HOMURA employs a KL-regularised objective function coupled with a novel dynamic syllable-ratio reward mechanism, effectively controlling output length and encouraging the model to generate translations that are both semantically accurate and temporally feasible. Experimental results demonstrate the efficacy of HOMURA in managing output length while preserving semantic meaning, offering a significant advancement in machine translation for time-sensitive applications.

Constrained Translation via Reinforcement Learning with HOMURA

Recent research details a reinforcement learning approach, HOMURA, for constrained translation, focusing on achieving high compression while maintaining linguistic quality. The paper introduces a method to perform translation with a strict syllable limit without sacrificing the quality of the translated text. It achieves this by removing the typical KL-divergence regularization used in Reinforcement Learning from Human Feedback, arguing that in this constrained scenario, the KL penalty hinders the model’s ability to make necessary structural changes to meet the syllable target. HOMURA utilises Group Relative Policy Optimization, a reinforcement learning framework that stabilizes training without relying on KL regularization, employing group-relative normalization and clipping to control policy updates.

A Centered Clipped Objective, a specific loss function within GRPO, ensures stable learning by centering updates and preventing large policy changes. The Syllable-Ratio Reward acts as the primary reward signal, penalizing translations that exceed the syllable limit and functioning as a form of structural regularization. Results indicate that removing KL improves performance, achieving higher CERR and BLEU-ρ scores, and enabling the model to restructure sentences to meet the syllable limit rather than simply deleting words.

LLMs Exhibit Cross-Lingual Verbosity Bias

Scientists have addressed a critical limitation of large language models in time-constrained translation tasks by tackling a systemic cross-lingual verbosity bias. The research team identified that LLMs consistently produce translations significantly longer than the source material, hindering their use in applications with strict temporal budgets. To quantify this issue, they developed Sand-Glass, a new benchmark designed to evaluate translation performance under syllable-level duration constraints, incorporating Information Density derived from real-world subtitles. Experiments revealed a pervasive tendency for LLMs to expand translated text, with diagnostic analysis establishing the Roundtrip Expansion Ratio to isolate model-induced redundancy.

Data shows that across languages tested, including German, English, and Spanish, over 63% of LLM translations exhibited expansion exceeding a ratio of 1.0, indicating systemic inflation despite consistent semantic content. The team then introduced HOMURA, a reinforcement learning framework specifically engineered to optimise the trade-off between semantic preservation and temporal compliance, effectively regulating translation length. HOMURA employs a KL-regularized objective coupled with a novel dynamic syllable-ratio reward, enabling precise length control while respecting linguistic density hierarchies. Results demonstrate that HOMURA significantly outperforms strong LLM baselines, achieving a substantial reduction in output length without compromising semantic adequacy, and operating near a rate-distortion limit for fidelity compression.

Shorter Translations via Reinforced Learning and Sand-Glass

This research addresses a significant issue in machine translation: a tendency for large language models to produce overly verbose outputs, hindering their application in time-sensitive contexts like subtitling and dubbing. To quantify this problem, the authors introduced Sand-Glass, a new benchmark that evaluates translation quality under strict syllable-level duration constraints, grounded in principles of information efficiency. They then developed HOMURA, a reinforcement learning framework designed to balance semantic accuracy with temporal feasibility, effectively controlling output length through a novel reward system. Experimental results demonstrate that HOMURA substantially outperforms existing language models in achieving precise length control while maintaining semantic integrity. The study also identified an apparent limit to compression, approximately a 0.49 ratio for Chinese to English translation, suggesting a minimum information density required to convey core meaning. While acknowledging that syllable count serves as a textual approximation of actual acoustic duration and that current validation focuses on a limited set of language pairs, the authors propose future work to investigate the generalizability of the observed compression boundary and to extend the framework to encompass end-to-end speech translation, incorporating both semantic and duration optimisation.

👉 More information

🗞 HOMURA: Taming the Sand-Glass for Time-Constrained LLM Translation via Reinforcement Learning

🧠 ArXiv: https://arxiv.org/abs/2601.10187