The increasing size of modern artificial intelligence models presents a significant challenge to efficient training and deployment, prompting researchers to explore methods for compressing key information without sacrificing performance. Nandan Kumar Jha and Brandon Reagen, both from New York University, along with their colleagues, investigate how a compression technique called multi-head latent attention impacts a transformer model’s ability to learn during its initial pretraining phase. Their work employs tools from random matrix theory to analyse the internal workings of these models, revealing that bottlenecks emerge in standard and some compressed versions, limiting the model’s capacity and concentrating its learning into restricted areas. Importantly, the team demonstrates that a specific implementation, sharing key components across different processing heads, successfully avoids these limitations, preserving a broader capacity for learning and offering a promising pathway towards more efficient and powerful artificial intelligence.

Latent Attention Impacts Spectral Dynamics and Learning

Modern large language models (LLMs) continue to grow in scale and capability, but their practical use is limited by increasing inference latency caused by memory demands of key/value (KV) cache operations, rather than computational cost. Recent architectures, such as DeepSeek-V2/V3, have introduced Multi-head Latent Attention (MLA), which compresses queries and keys into lower-dimensional latent representations before attention calculations. This compression reduces KV cache size while maintaining strong performance. However, understanding how this latent compression impacts the internal dynamics of attention, and its implications for learning and generalization, remains an open question.

Random Matrix Theory (RMT) is a powerful tool for studying neural networks, but its application to the spectral behaviour of attention mechanisms under latent-space compression has been largely unexplored. This limits our understanding of MLA’s inherent biases and potential drawbacks. For example, do problematic spectral patterns, such as rank collapse, persist with MLA? Can reducing the attention mechanism size alone prevent the growth of outliers, or are additional design choices necessary? In this work, we investigate how MLA affects the spectrum of attention, focusing on key questions: Where do spectral spikes emerge in MLA?

Are they specific to certain layers or attention heads? Is latent compression alone enough to suppress outliers, or does the way rotary embeddings are applied matter? What impact do these spectral spikes have on rank collapse and the effective use of the latent space? We developed a diagnostic framework based on Random Matrix Theory to analyze the spectrum of attention. This framework uses metrics to assess the squared singular values of the attention mechanism.

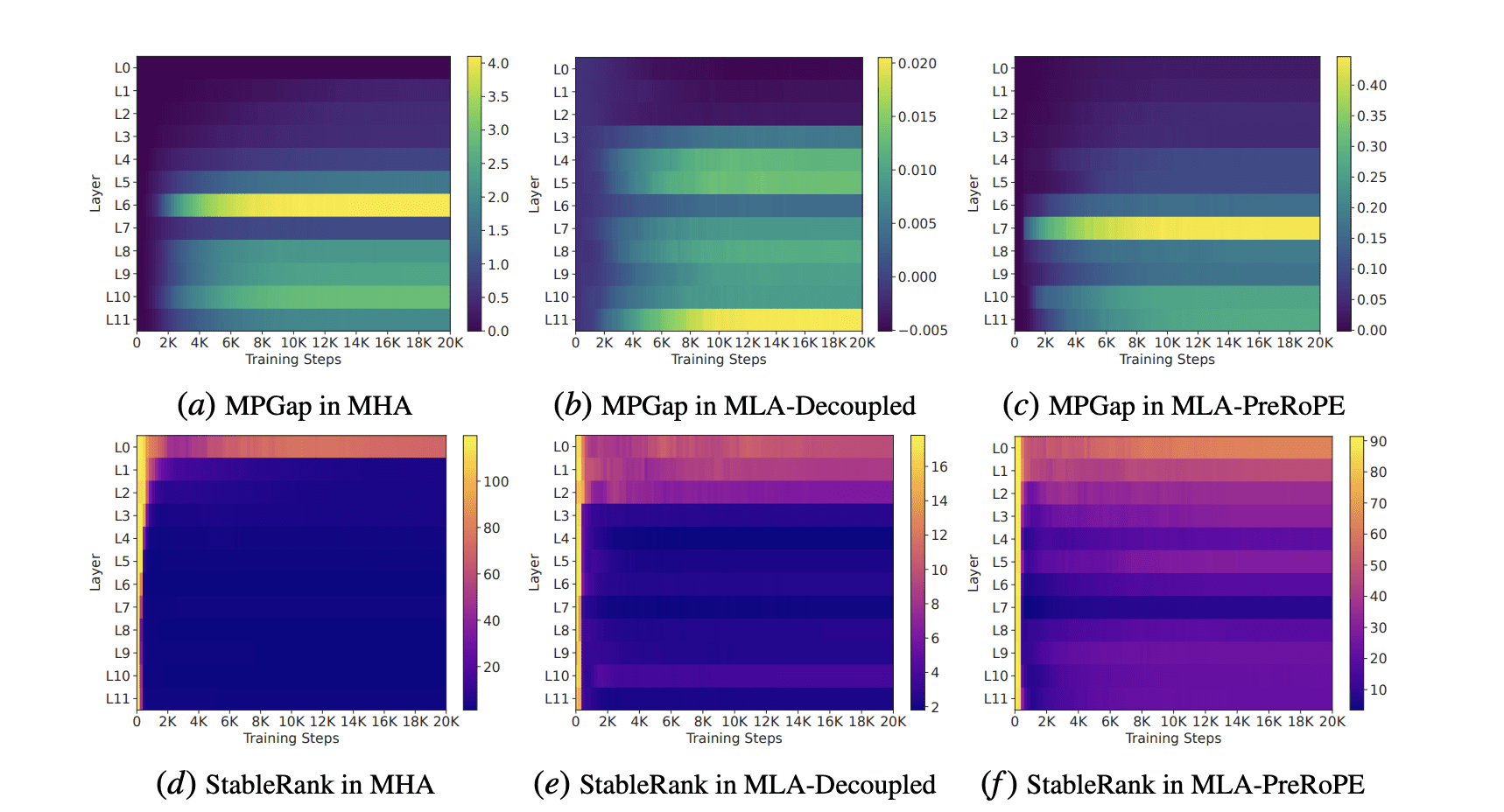

We applied this framework to benchmark classical Multi-Head Attention (MHA) and two MLA variants: MLA-PreRoPE, which applies rotary embeddings before compression, and MLA-Decoupled. Our analysis reveals that MLA-Decoupled effectively suppresses spectral outliers, while MLA-Pre retains higher representational capacity. We also identified a concentration of spectral spikes in the middle layers of MHA.

Representational Stability Across Transformer Layers Demonstrated

Our results show that MLA methods distribute representational changes more evenly across layers, maintaining higher stable ranks throughout the network’s depth. Stable-Rank heat-maps visually confirm this trend. MHA starts with a high rank but collapses after approximately five layers, mirroring the emergence of a spike in a related metric. MLA-Pre partially recovers in deeper layers, retaining some initial rank, but still underperforms. In contrast, MLA-Decoupled consistently sustains over 60% normalized rank across all layers and training steps, indicating stable representational capacity.

Analysis of outlier energy distribution reveals how MHA, MLA-Dec, and MLA-Pre differ in their spectral compression. Both MHA and MLA-Pre exhibit a distribution centered around a value of 0.75, suggesting persistent rank compression. In contrast, MLA-Decoupled exhibits a significantly different trend: its distribution is both shifted downward and narrowed, indicating that a substantial portion of the outlier energy has been redistributed into the main body of the spectrum, preserving a broader and more effective rank across layers. We also investigated how reallocating dimensions between content and rotary embeddings affects the outlier-energy spectrum in MLA-Decoupled.

Deviating from a balanced allocation raises the spectral mass toward the outliers, signaling the reappearance of modest spikes. However, lacking any positional encoding results in a spectrum with most energy collapsed into a few dominant directions, severely reducing representational diversity. Perplexity comparisons summarize the final performance across MHA and MLA variants with different RoPE configurations. The balanced Dec-0.50 matches MHA, while imbalanced settings increase perplexity. The NoPE variant suffers a large degradation, highlighting the significance of rotary embeddings. MLA-Decoupled eliminates the mid-layer spike and sustains more than 60% Stable Rank across depth, demonstrating that positional encoding is essential for MLA, and a 50:50 content-to-position split is key to avoiding spectral bottlenecks that directly impair model quality.

Spectral Robustness via Embedding Sharing

Our results underscore that how rotary embeddings are applied is just as critical as where compression occurs. Sharing rotary components across heads mitigates spectral fragmentation and preserves representational capacity. In conclusion, Random Matrix Theory analysis demonstrates that sharing rotary embeddings across heads eliminates spectral spikes, maintains a stable spectrum, and preserves over 60 percent stable rank in MLA-Decoupled mode. In contrast, classical MHA and MLA-PreRoPE remain spike-dominated and lose around 70 percent of spectral energy to a few dominant directions. This work aims to bridge architectural efficiency with spectral interpretability.

By combining memory-efficient attention mechanisms with Random Matrix Theory-based diagnostics, it uncovers critical design insights for building future Large Language Models that are not only faster but also spectrally robust. These findings are based on a 12-layer LLaMA-130M trained for 20K steps on 2.2B training tokens from the C4 corpus. Heavier models, longer training schedules, or additional spectral metrics may reveal new behaviours. Extending the analysis to billion-scale models and correlating spectral properties with downstream quality remain open directions for future work.

👉 More information

🗞 A Random Matrix Theory Perspective on the Learning Dynamics of Multi-head Latent Attention

🧠 DOI: https://doi.org/10.48550/arXiv.2507.09394