Large language models have become indispensable tools across finance, medicine, and creative industries, yet their reasoning remains fragile. When asked to explain a multi‑step deduction, models often produce chain‑of‑thought strings that are verbose, sometimes contradictory, and difficult to audit. The challenge is to harness a model’s raw pattern‑matching power while ensuring that its conclusions are both trustworthy and efficient. A recent line of research proposes to treat reasoning as a combinatorial optimisation problem, leveraging quantum processors to sift through thousands of candidate “reasons” and assemble a coherent answer. This approach promises not only cleaner explanations but a new benchmark of speed and accuracy that classical algorithms struggle to match.

From fragments to optimisation

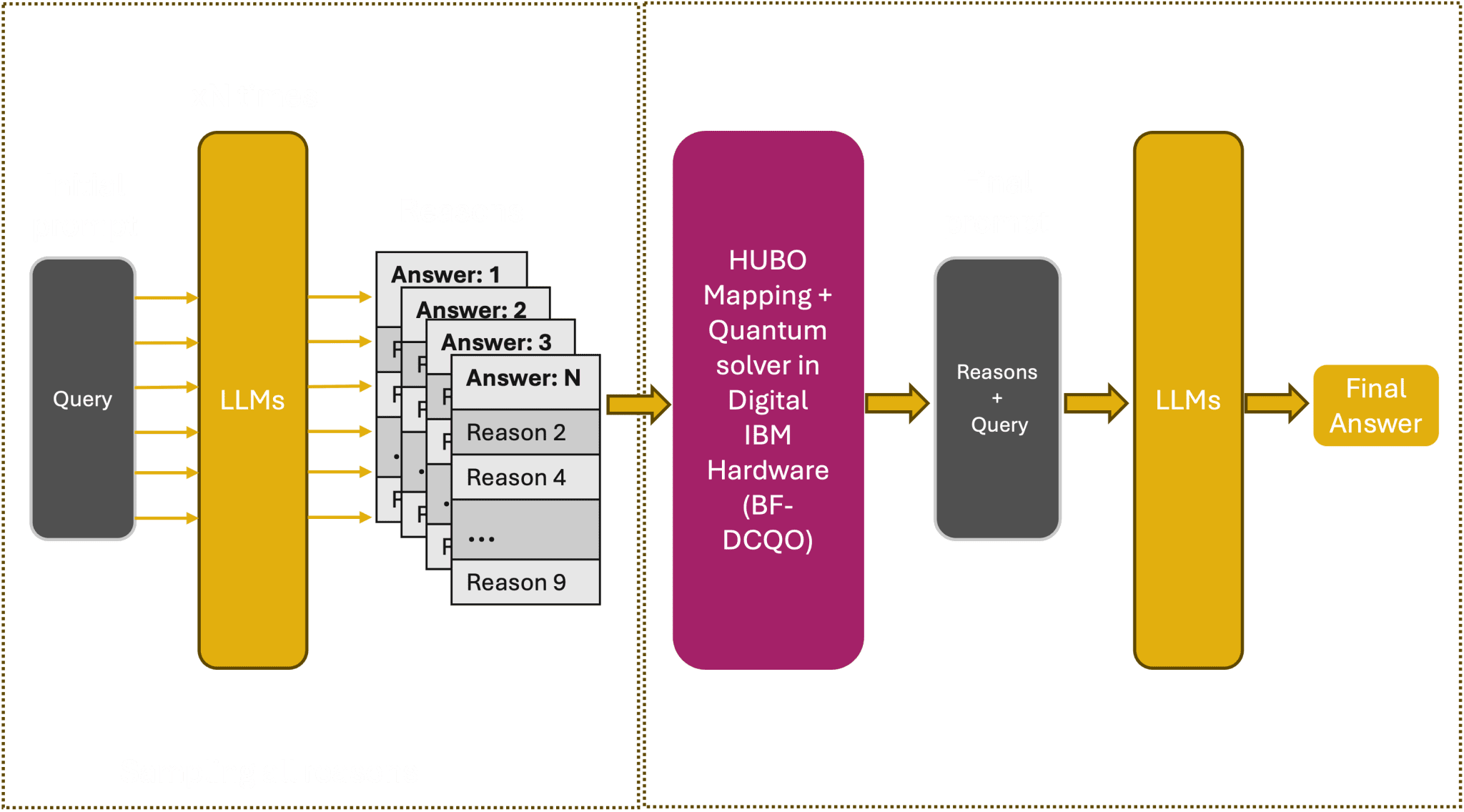

The first step in the pipeline is to generate a pool of candidate explanations. A prompt is sent to a state‑of‑the‑art model such as GPT‑4, and the system extracts every distinct reasoning fragment,sentences or short clauses,from the output. Each fragment is treated as a binary decision variable: it is either selected or discarded. The collection of variables is then encoded into a Higher‑Order Unconstrained Binary Optimization (HUBO) Hamiltonian. The linear terms reward fragments that individually appear important; quadratic terms penalise pairs of fragments that conflict; higher‑order terms enforce coherence among groups of three or more fragments. In practice, a modest set of 120 candidate reasons already generates 7,000 pairwise interactions and 280,000 triplet terms. As the number of fragments grows, the Hamiltonian becomes increasingly dense, making it difficult for classical solvers such as simulated annealing to navigate the flat, degenerate energy landscape.

The HUBO formulation is far richer than simple majority voting or threshold filtering. By explicitly modelling pairwise consistency and higher‑order relationships, the optimisation guarantees that the final answer is built from the most relevant and diverse reasons. This reduces redundancy, improves interpretability, and aligns the model’s output with human expectations of logical coherence. The key insight is that reasoning can be reframed as a search for the lowest‑energy configuration of a binary system, a problem that quantum annealers and gate‑based quantum processors are designed to solve efficiently.

Quantum hardware turns the tide

The bottleneck for classical optimisation is the combinatorial explosion of interactions. Quantum processors, however, can encode and evaluate the entire Hamiltonian in parallel. The new Bias‑Field Digitised Counterdiabatic Quantum Optimisation (BF‑DCQO) algorithm runs smoothly on today’s digital quantum machines, including IBM’s superconducting chips and IonQ’s trapped‑ion devices. Unlike adiabatic methods that require long coherence times, BF‑DCQO applies a carefully engineered counterdiabatic field that suppresses transitions, allowing the system to reach its ground state in fewer steps.

With IBM’s current 127‑qubit architecture, the team has successfully solved HUBO instances involving 156 candidate reasons, mapping each decision variable to a physical qubit. The algorithm scales with the number of qubits, and forthcoming IBM chips,such as the NightHawk 2D array and the modular Flamingo architecture,promise to expand the problem size dramatically. As the hardware matures, the method will be able to tackle reasoning tasks that, until now, have required quantum‑advantage‑level performance.

Benchmark tests on the Big‑Bench Extra‑Hard suite demonstrate the practical impact. On the DisambiguationQA dataset, the quantum‑enhanced model achieved 61 % accuracy, surpassing reasoning‑native baselines such as OpenAI’s o3‑high (58.3 %) and DeepSeek R1 (50.0 %). Similar gains appeared on Causal Understanding and NYCC, where the quantum‑augmented approach consistently outperformed its purely classical counterparts. These results signal a clear quantum‑advantage trajectory for complex, multi‑step inference.

Toward a new era of reasoning

The convergence of large language models and quantum optimisation heralds the dawn of what some call Quantum Intelligence (QI). In this paradigm, a quantum processor does not replace the neural network; it augments its reasoning layer by solving the combinatorial optimisation that underpins logical coherence. The result is a system that can evaluate thousands of candidate explanations in parallel, prune inconsistencies, and assemble an answer that is both accurate and explainable.

The implications extend beyond academic benchmarks. In regulated domains such as finance or healthcare, auditors will demand transparent, verifiable reasoning. A QI‑powered model can supply a concise, logically consistent chain of thought that satisfies both regulatory standards and human scrutiny. In creative fields, writers and designers could use the system to explore alternative narrative paths, with the quantum optimiser selecting the most compelling threads.

The technology is still in its infancy, but the roadmap is clear. As commercial quantum processors grow in qubit count and coherence, the size of the HUBO Hamiltonian that can be handled will increase, enabling the solution of ever more complex reasoning tasks. Eventually, the quantum optimiser could operate in real time, delivering instant, trustworthy explanations for any query. Whether this will reshape our relationship with AI, or simply enhance the tools at our disposal, remains to be seen. What is certain is that the fusion of large language models with quantum optimisation is already reshaping the frontier of artificial reasoning.