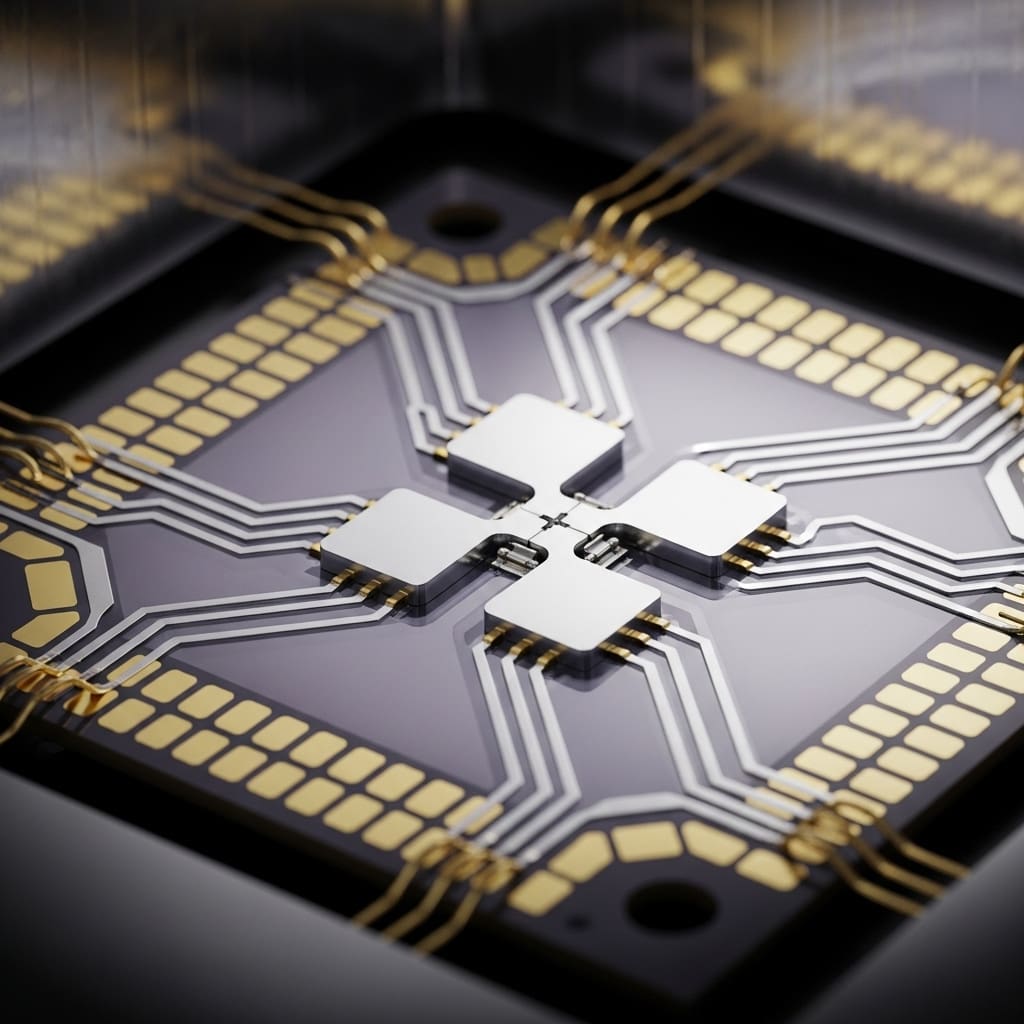

Researchers are increasingly focused on superconducting qubit devices as a leading architecture for scalable quantum computation, owing to their maturity and compatibility with existing semiconductor manufacturing techniques. Hiu Yung Wong from San Jose State University, alongside colleagues, present a comprehensive review of these devices, examining the fundamental principles of superconductivity and Josephson junctions that underpin their operation. This work is significant because it not only details the various qubit designs and entanglement gate schemes currently employed, but also addresses the critical challenges hindering progress, such as two-level system defects that limit coherence. Furthermore, the authors explore strategies for large-scale integration, drawing parallels with established electronic design automation techniques used in conventional semiconductor technology, paving the way for more powerful and practical quantum computers.

Superconducting qubit technology and the path towards scalable quantum processors represent a leading approach to building fault-tolerant quantum computers

Scientists are rapidly approaching the scale necessary for practical quantum computation, with research now focused on the substantial engineering challenges of building systems with sufficient qubits. A recent review details the current state of superconducting qubit technology, highlighting the critical need for large-scale integration to realise a truly useful quantum computer.

The work comprehensively examines the foundational elements, from qubit design and control to error mitigation strategies, paving the way for more robust and scalable quantum processors. This analysis underscores the progress made in superconducting qubits and identifies key areas for future development.

Superconducting qubit computers represent a leading architecture for large-scale quantum integration due to their compatibility with existing semiconductor manufacturing processes. The study meticulously reviews the essential components of these systems, beginning with the fundamental principles of quantum computing, superconductivity, and Josephson junctions.

It then delves into the five DiVincenzo criteria, the necessary conditions for a functional quantum computer, assessing how superconducting qubits meet these requirements. Various qubit types formed with Josephson junctions are discussed, revealing the trade-offs between design parameters and noise immunity.

Furthermore, the research explores advanced techniques for achieving entanglement gate operations, a major hurdle in building efficient, fault-tolerant quantum computers. Detailed analysis of readout engineering, including Purcell filters and quantum-limited amplifiers, demonstrates improvements in qubit measurement fidelity.

The study also addresses the limiting factor of qubit coherence time, focusing on the nature and mitigation of two-level system defects. These investigations reveal significant advancements in overcoming the inherent challenges of maintaining quantum information. Achieving a useful quantum computer necessitates not only fulfilling DiVincenzo’s criteria but also implementing large-scale integration with quantum error correction.

Unlike conventional CMOS technology, qubit states are sensitive to noise and errors, demanding robust error correction schemes. Current estimates suggest that a fault-tolerant logical qubit requires between 100 and 1000 physical qubits, depending on desired error rates. To tackle complex problems and demonstrate quantum advantage, systems will likely require over 1,000 logical qubits, translating to a need for at least 1 million, and potentially up to 100 million, physical qubits.

The review compares the integration of superconducting qubits with the established electronic design automation (EDA) techniques used in semiconductor manufacturing. Applying EDA to superconducting qubit computer design is crucial for achieving the necessary large-scale integration. This work provides a comprehensive overview of the field, bridging theoretical foundations with practical implementation strategies and outlining a clear path towards building powerful, scalable quantum computers.

Superconducting Qubit Characterisation and High Fidelity Gate Implementation are crucial for scalable quantum computing

A 72-qubit superconducting processor forms the foundation of this work, enabling detailed investigations into the requirements for scalable quantum computation. Researchers focused on characterizing the performance of superconducting qubits and the technologies necessary to meet DiVincenzo’s criteria for a functional quantum computer.

The study systematically examined qubit types, entanglement gate operations, readout engineering, and defect analysis to determine the physical qubit count needed for effective quantum error correction. Initially, the research explored various superconducting qubit designs incorporating Josephson junctions, assessing trade-offs between design parameters and noise immunity.

Entanglement gate operations, a critical bottleneck in achieving efficient quantum computing, were then investigated using specific gate sets such as {H, T, S, CNOT}, demonstrating that high gate fidelity exceeding 99.9% is achievable with one-qubit gates implemented via capacitively coupled microwave pulses. The team then turned to readout engineering, implementing Purcell filters and quantum-limited amplifiers to enhance signal detection.

To accurately measure qubit performance, the energy relaxation time (T1) and dephasing time (T2 or Tφ) were determined, with T2* measured experimentally and calculated as 1/T2* = 1/(2T1) + 1/T2. This allowed for quantification of bit-flip and phase-flip errors, crucial parameters in assessing qubit robustness.

Furthermore, the study detailed the implementation of active qubit reset, utilizing readout and subsequent NOT gates to rapidly initialize qubits to the ground state, a process significantly faster than thermal reset methods. Microwave signals generated by room-temperature electronics were attenuated and directed to the quantum chip operating at 10 mK, interacting with qubits to manipulate their state based on the system’s Hamiltonian.

Readout signals were transmitted through a co-planar waveguide and amplified using a quantum-limited amplifier, followed by further amplification at higher temperature stages to overcome the low photon count inherent in the readout process. This methodology facilitated the finding that a useful quantum computer will likely require at least one million, and potentially up to 100 million, physical qubits to operate effectively and implement quantum error correction.

Physical qubit scaling for fault-tolerant quantum error correction remains a significant challenge

Researchers are establishing the substantial physical qubit requirements for fault-tolerant quantum computation. Current investigations suggest that a practical quantum computer will necessitate a minimum of one million physical qubits, with the potential need extending to 100 million qubits to effectively implement quantum error correction protocols.

This finding underscores the significant scalability challenges inherent in realising functional quantum processors. The work details the foundational elements of computing, superconductivity, and Josephson junctions, laying the groundwork for understanding the criteria necessary for superconducting qubits to function as viable computational units.

Josephson junctions, formed by a superconductor-insulator-superconductor stack, exhibit current densities related to the junction phase difference, as described by the Josephson equations. These equations demonstrate that the junction current is dependent on the phase difference between the superconducting condensates in the two electrodes.

The critical current density of a Josephson junction, the current below which the Josephson effect is maintained, is intrinsically linked to the room temperature tunneling resistance through a defined relationship. Josephson junction inductance is predominantly kinetic, stemming from current flow through the weak link, and is influenced by the junction’s critical current and area.

A larger critical current correlates with a larger junction area and capacitance, impacting the inductive and capacitive energies available for qubit design. Fabrication techniques, including the Dolan Bridge and Manhattan processes, rely on double-angle shadow evaporation and in-situ oxidation, though these methods can introduce defects potentially reducing qubit coherence time.

Alternative approaches, such as utilising a trilayer Nb/Al-AlOx/Nb stack or the overlap junction with SiO2 scaffolding, aim to improve uniformity and mitigate defect formation. These advanced fabrication methods have demonstrated promising coherence times, with one study achieving 57 microseconds.

Current limitations and future directions for scalable superconducting qubits include materials science, control complexity, and error correction schemes

Superconducting qubit computers represent a leading architecture for scalable quantum computation, benefiting from maturity and compatibility with existing semiconductor manufacturing processes. This technology leverages the principles of superposition and entanglement to perform calculations, utilising qubits which can exist as a combination of 0 and 1, unlike classical bits.

Key to realising a functional quantum computer is satisfying DiVincenzo’s criteria, encompassing requirements for qubit representation, initialisation, coherence, entanglement, and readout. Significant progress has been made in addressing these criteria, including the development of various qubit designs, entanglement gate operations, and readout engineering techniques.

However, current limitations primarily stem from two-level system defects which reduce qubit coherence times, and the challenges of scaling up these systems. Achieving a useful quantum computer necessitates large-scale integration, prompting exploration of electronic design automation techniques similar to those used in semiconductor manufacturing.

A practical and effective quantum computer will likely require at least one million, and potentially up to 100 million, physical qubits to operate effectively and implement quantum error correction. Researchers acknowledge that qubit coherence remains a primary obstacle, with two-level system defects currently limiting the duration of quantum information storage.

Future work will likely focus on mitigating these defects and improving qubit stability. Further development of electronic design automation tools is also crucial for managing the complexity of large-scale superconducting qubit systems. These advancements are essential steps towards building fault-tolerant quantum computers capable of tackling complex computational problems and unlocking new scientific discoveries.

👉 More information

🗞 Review of Superconducting Qubit Devices and Their Large-Scale Integration

🧠 ArXiv: https://arxiv.org/abs/2602.04831