Researchers have made breakthroughs in quantum machine learning models, paving the way for more efficient and scalable applications. This research from Caltech, NVIDIA and Harvard demonstrates a critical discovery. A specific type of quantum circuit, known as a Quantum Perceptron (QP), can approximate continuous functions with high accuracy. It uses fewer qubits than previously thought. This achievement is crucial for building reliable and efficient quantum neural networks.

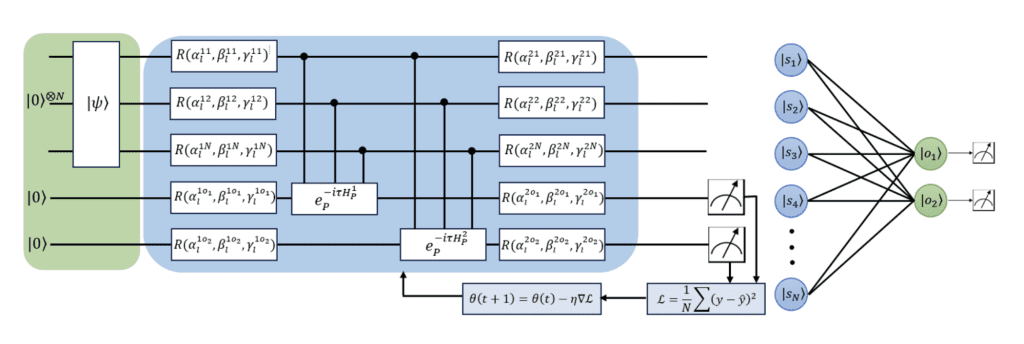

The research team designed the QP architecture. It uses Rydberg atom arrays to implement the perceptron model. This model is a fundamental component of machine learning algorithms. The researchers also showed that the QP can be used with reservoir computing. This is a technique inspired by classical random feature networks. It enhances learning. This work has significant implications for developing practical quantum machine learning applications. It could lead to breakthroughs in areas such as image recognition and natural language processing.

The authors have built upon the foundation laid by Gonon and Jacquier. They demonstrated that parameterized quantum circuits can approximate continuous functions bounded in L1 norm up to an error of order n^{-1/2}. Here, the number of qubits scales logarithmically with n. Specifically, they showed that a quantum neural network with O(ϵ^{-2}) weights and O([log2(ϵ^{-1})]) qubits suffices to achieve accuracy ϵ > 0 when approximating functions with integrable Fourier transform.

The manuscript presents an advancement in the field of Quantum Machine Learning (QML). It introduces the concept of Quantum Perceptrons (QPs). These are implementable using a Quantum Processor (QP). The authors show that QPs can approximate classical functions. The error scales as n^{-1/2}. This ensures that no curse of dimensionality occurs.

The authors also explore the connection between QPs and reservoir computing, drawing parallels with classical random feature networks. This confluence of error bounds in classical and quantum settings strengthens our understanding of quantum neural networks’ computational universality. It also provides a roadmap for their efficient implementation.

The manuscript concludes by highlighting the significance of QPs as reliable building blocks for scalable quantum neural networks. The authors propose experimental strategies for encoding QPs on arrays of Rydberg atoms, including single-species and dual-species approaches. They also discuss potential avenues for future research. These areas include experimental validation. They also look at incorporating multiple output qubits and integrating quantum reservoir computing with QPs.

This work represents a crucial step forward in developing QML models, offering a promising architecture for scalable and efficient quantum neural networks.

External Link: Click Here For More