Quantum neural networks (QNNs) have gained attention as a promising approach to achieving a quantum advantage in noisy intermediate-scale quantum (NISQ) computers. However, calculating expectation values in QNNs introduces fundamental finite-sampling noise even on error-free quantum computers. To address this issue, researchers have introduced variance regularization, a technique that reduces the variance of the expectation value during training. This method requires no additional circuit evaluations and has been shown to speed up training and lower output noise.

The article highlights the challenges of quantum machine learning (QML), including developing algorithms that can efficiently utilize NISQ computers. Two approaches are followed in QML: using the exponentially large Hilbert space accessible by a quantum computer for the kernel trick, or utilizing short and hardware-efficient circuits.

Variance regularization has been demonstrated to effectively reduce the impact of finite-sampling noise in QNNs. This technique adds a term to the loss function that encourages the model to produce outputs with a lower variance, which can help reduce output noise by an order of magnitude on average. The article also discusses benchmarking variance regularization on various tasks and training QNNs on real quantum devices.

Overall, variance regularization is a powerful technique for reducing the impact of finite-sampling noise in QNNs, making it an important step towards realizing the full potential of quantum machine learning.

Can Quantum Neural Networks Overcome Finite Sampling Noise?

In recent years, quantum neural networks (QNNs) have gained significant attention as a promising approach to achieve a quantum advantage in noisy intermediate-scale quantum (NISQ) computers. QNNs use parameterized quantum circuits with data-dependent inputs and generate outputs through the evaluation of expectation values. However, calculating these expectation values necessitates repeated circuit evaluations, introducing fundamental finite-sampling noise even on error-free quantum computers.

To address this issue, researchers have introduced variance regularization, a technique for reducing the variance of the expectation value during the quantum model training. This method requires no additional circuit evaluations if the QNN is properly constructed. Empirical findings demonstrate that the reduced variance speeds up the training and lowers the output noise, as well as decreases the number of necessary evaluations of gradient circuits.

The Challenges of Quantum Machine Learning

Quantum machine learning (QML) offers significant flexibility in the design of quantum circuits, allowing for the use of short and hardware-efficient circuits. These circuits may operate more effectively on noisy hardware compared to problem-driven approaches such as quantum optimization or quantum chemistry. However, QML also faces significant challenges, including the need to develop algorithms that can efficiently utilize the capabilities of NISQ computers.

In this young field, mainly two approaches are followed to develop machine learning algorithms on NISQ hardware. The first approach utilizes the exponentially large Hilbert space accessible by a quantum computer for the so-called kernel trick. Here, the input data is embedded into a high-dimensional space enabling linear regression or classification within this space. On a quantum computer, this is achieved by using quantum circuits to perform operations in this high-dimensional space.

The Role of Variance Regularization

Variance regularization is a technique that can be used to reduce the variance of the expectation value during the quantum model training. This method requires no additional circuit evaluations if the QNN is properly constructed. Empirical findings demonstrate that the reduced variance speeds up the training and lowers the output noise, as well as decreases the number of necessary evaluations of gradient circuits.

In this approach, the variance regularization term is added to the loss function during the training process. This term encourages the model to produce outputs with a lower variance, which can help to reduce the impact of finite-sampling noise. The effectiveness of this approach has been demonstrated through empirical findings, which show that it can significantly improve the performance of QNNs on NISQ hardware.

Benchmarking Variance Regularization

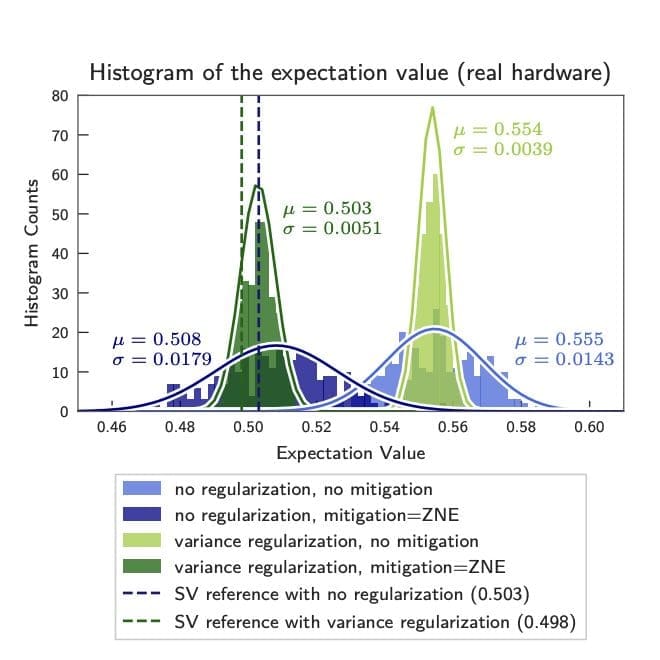

To evaluate the effectiveness of variance regularization, researchers have benchmarked this technique on a range of tasks, including the regression of multiple functions and the potential energy surface of water. In these experiments, the reduced variance has been shown to lower the output noise by an order of magnitude on average.

The impact of variance regularization on the training process has also been evaluated. Empirical findings demonstrate that this technique can significantly speed up the training process, reducing the number of necessary evaluations of gradient circuits. This is particularly important for QNNs, which require a large number of circuit evaluations to produce accurate outputs.

Training QNNs on Real Quantum Devices

In addition to benchmarking variance regularization, researchers have also demonstrated the feasibility of training QNNs on real quantum devices. In these experiments, the optimization process has been shown to be feasible only due to the reduced number of necessary shots in the gradient evaluation resulting from the reduced variance.

This approach has significant implications for the development of practical QNNs. By reducing the impact of finite-sampling noise, researchers can develop more accurate and efficient QNNs that are better suited to real-world applications. This is particularly important for tasks such as quantum chemistry and materials science, where high-accuracy models are essential.

In conclusion, variance regularization is a powerful technique for reducing the impact of finite-sampling noise in QNNs. By adding a term to the loss function that encourages the model to produce outputs with a lower variance, researchers can significantly improve the performance of QNNs on NISQ hardware. This approach has significant implications for the development of practical QNNs and is an important step towards realizing the full potential of quantum machine learning.

Publication details: “Reduction of finite sampling noise in quantum neural networks”

Publication Date: 2024-06-25

Authors: David A. Kreplin and M. Roth

Source: Quantum

DOI: https://doi.org/10.22331/q-2024-06-25-1385