The field of quantum machine learning has made significant strides in recent years, with quantum neural networks (QNNs) emerging as a promising approach. However, training QNNs remains a challenging task due to the complex nature of quantum systems.

One way to improve their performance is by leveraging symmetry, which can be exploited to enhance their performance. In this article, we explore Symmetry-Guided Gradient Descent (SGGD), a novel method that incorporates symmetry constraints into the cost function. SGGD has been demonstrated to accelerate training, improve generalization ability, and remove vanishing gradients in various applications.

Can Quantum Neural Networks Be Improved with Symmetry?

In recent years, quantum machine learning has emerged as a promising field, extending classical machine learning concepts to the realm of quantum superposition. One approach to quantum machine learning is the development of quantum neural networks (QNNs), which can be viewed as quantum generalizations of classical neural networks. QNNs have shown promising results in various applications, including quantum phase recognition and classical classification tasks.

However, training QNNs remains challenging due to the complex nature of quantum systems. One way to improve the performance of QNNs is to leverage symmetry, which can be exploited to enhance their performance. In this context, symmetry refers to intrinsic properties of the data that remain unchanged under certain transformations, such as translational and rotational symmetries in image classification tasks.

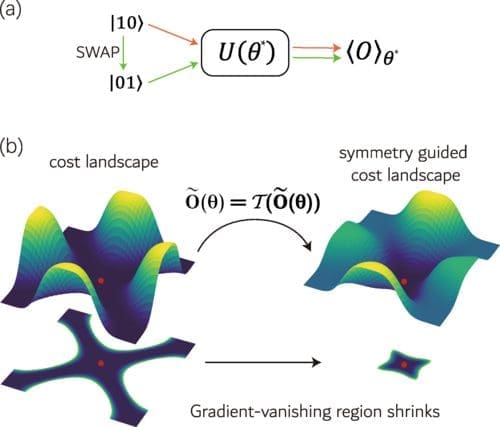

Symmetry constraints can be formulated into a concise mathematical form, allowing for the design of methods that incorporate these constraints into the cost function. This approach shapes the cost landscape in favor of parameter choices that respect the given symmetry. Unlike methods that alter the neural network circuit ansatz to impose symmetry, this method only changes the classical post-processing of gradient descent, making it simpler to implement.

The proposed method is called Symmetry-Guided Gradient Descent (SGGD). SGGD has been illustrated in two case studies: entanglement classification of Werner states and a binary classification task in a 2D feature space. The results show that SGGD can accelerate training, improve generalization ability, and remove vanishing gradients, especially when the training data is biased.

How Does Symmetry-Guided Gradient Descent Work?

Symmetry-Guided Gradient Descent (SGGD) is a novel approach to improving the performance of quantum neural networks. The method formulates symmetry constraints into a concise mathematical form, allowing for the design of methods that incorporate these constraints into the cost function.

The first step in SGGD is to define the symmetry constraints. In the context of image classification tasks, this might involve translational and rotational symmetries. These constraints can be formulated as a set of equations that describe how the data should remain unchanged under certain transformations.

Once the symmetry constraints are defined, they can be incorporated into the cost function using two different approaches. The first approach involves adding a penalty term to the cost function that encourages the model to respect the given symmetry. This penalty term is designed such that it becomes zero when the model’s predictions align with the symmetry constraints.

The second approach involves modifying the gradient descent algorithm to incorporate the symmetry constraints. This can be done by adjusting the learning rate of the optimizer based on the magnitude of the symmetry constraint violations. By doing so, the optimizer is encouraged to move in the direction that respects the given symmetry.

Both approaches have been shown to improve the performance of QNNs, particularly when the training data is biased or noisy. SGGD has also been demonstrated to be more effective than traditional gradient descent methods in certain scenarios.

Applications of Symmetry-Guided Gradient Descent

Symmetry-Guided Gradient Descent (SGGD) has been illustrated in two case studies: entanglement classification of Werner states and a binary classification task in a 2D feature space. In both cases, SGGD was shown to improve the performance of QNNs.

In the first case study, SGGD was used to classify Werner states into different entangled categories. The results showed that SGGD can accelerate training, improve generalization ability, and remove vanishing gradients, especially when the training data is biased.

In the second case study, SGGD was used to perform a binary classification task in a 2D feature space. The results showed that SGGD can improve the performance of QNNs by reducing overfitting and improving generalization ability.

These case studies demonstrate the potential of SGGD to improve the performance of QNNs in various applications. As the field of quantum machine learning continues to evolve, it is likely that SGGD will play an important role in developing more effective and efficient QNNs.

Future Directions for Symmetry-Guided Gradient Descent

While SGGD has shown promising results in improving the performance of QNNs, there are several directions that can be explored to further develop this method. One potential direction is to extend SGGD to other types of symmetry, such as permutation symmetry or Lorentz symmetry.

Another direction is to explore the use of SGGD in more complex applications, such as quantum many-body systems or quantum field theory. This could involve developing new algorithms and techniques that incorporate SGGD into existing methods for solving these problems.

Finally, it would be interesting to investigate the connection between SGGD and other approaches to improving the performance of QNNs, such as regularization techniques or ensemble methods. By exploring these connections, we may be able to develop more effective and efficient methods for training QNNs.

Conclusion

Symmetry-Guided Gradient Descent (SGGD) is a novel approach to improving the performance of quantum neural networks. By leveraging symmetry constraints, SGGD can accelerate training, improve generalization ability, and remove vanishing gradients, especially when the training data is biased or noisy. The method has been illustrated in two case studies: entanglement classification of Werner states and a binary classification task in a 2D feature space.

As the field of quantum machine learning continues to evolve, it is likely that SGGD will play an important role in developing more effective and efficient QNNs. By exploring new directions for SGGD, such as extending the method to other types of symmetry or exploring its connection with other approaches to improving the performance of QNNs, we may be able to develop even more powerful methods for training QNNs.

Publication details: “Symmetry-guided gradient descent for quantum neural networks”

Publication Date: 2024-08-05

Authors: Kaiming Bian, Shitao Zhang, Fei Meng, Wen Zhang, et al.

Source: Physical review. A/Physical review, A

DOI: https://doi.org/10.1103/physreva.110.022406