Quantum machine learning holds immense potential for solving complex computational problems, but realising this promise requires addressing fundamental challenges to ensure reliable performance. Ferhat Ozgur Catak, Jungwon Seo, and Umit Cali, from the University of Stavanger and the University of York, present a comprehensive roadmap for Trustworthy Quantum Machine Learning, tackling the risks introduced by the probabilistic nature of quantum mechanics and the limitations of current quantum hardware. Their work establishes a framework built on three key pillars, uncertainty quantification, adversarial robustness, and privacy preservation, to deliver calibrated, secure, and dependable quantum AI systems. By formalising quantum-specific trust metrics and validating a unified assessment pipeline on existing quantum classifiers, the researchers reveal crucial correlations between uncertainty and prediction risk, highlight vulnerabilities to different types of attacks, and demonstrate the trade-offs between privacy and performance, ultimately defining trustworthiness as a core design principle for the future of quantum artificial intelligence.

A central focus is on developing techniques that allow models to learn from decentralized datasets without compromising individual privacy. Federated learning offers a solution by allowing models to be trained on distributed data sources, addressing concerns that traditional machine learning often requires centralized datasets. Adversarial attacks pose a threat to both classical and quantum systems, while gradient inversion attacks attempt to reconstruct training data from model updates.

Furthermore, the research acknowledges potential data leakage from quantum states and the challenges of training quantum neural networks due to barren plateaus. To address these challenges, the team investigates privacy-enhancing technologies, including federated learning, differential privacy, homomorphic encryption, secure multi-party computation, and secure aggregation. The research also delves into quantum-specific considerations, adapting differential privacy to the quantum realm and exploring quantum homomorphic encryption. Scientists achieved significant results in quantifying uncertainty, demonstrating a strong correlation between predictive uncertainty and prediction correctness. The team measured predictive entropy, variation ratio, and standard deviation, revealing highly significant differences between correct and incorrect predictions. Experiments revealed that misclassified samples exhibit significantly higher entropy than correctly classified samples, enabling the identification of a threshold that allows for the rejection of most errors while retaining the majority of correct predictions.

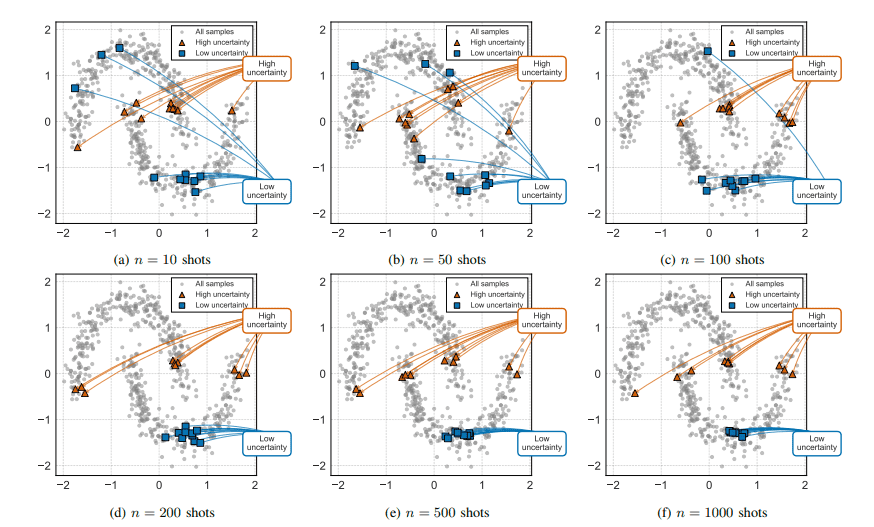

Further analysis of uncertainty localization in feature space demonstrates that high-uncertainty samples consistently cluster in specific regions, while low-uncertainty samples are more dispersed. The team quantified this effect, showing that incorrect predictions exhibit narrower standard deviations compared to correct predictions, validating the hypothesis that well-designed uncertainty metrics should exhibit strong separation between reliable and unreliable predictions. The team successfully demonstrated that predictive entropy reliably distinguishes between correct and incorrect classifications, achieving strong practical significance in their analysis. Furthermore, they found that while classical attacks significantly degrade quantum machine learning performance, quantum-state perturbations prove largely ineffective, highlighting a notable asymmetry in vulnerability. Importantly, the work also shows that differential privacy techniques enable secure distributed quantum learning with acceptable trade-offs between accuracy and privacy, even under current limitations of near-term quantum devices.

While acknowledging that simulated environments cannot fully replicate the complexities of real quantum hardware, the researchers emphasize the measurable and improvable nature of trustworthiness in quantum models. Future work should focus on developing trust metrics that adapt to the dynamic behavior of quantum devices and creating defenses against hardware-level attacks, alongside exploring privacy guarantees rooted in the principles of quantum mechanics itself. This research establishes a strong foundation for building verifiable, privacy-aware intelligence within future hybrid quantum-classical networks.

👉 More information

🗞 Trustworthy Quantum Machine Learning: A Roadmap for Reliability, Robustness, and Security in the NISQ Era

🧠 ArXiv: https://arxiv.org/abs/2511.02602