The challenge of building reliable machine learning algorithms grows increasingly complex when data contains errors, a common problem in real-world applications. Neeshu Rathi and Sanjeev Kumar, both from the Department of Mathematics at the Indian Institute of Technology Roorkee, address this issue by developing a new quantum machine learning approach that excels even with unreliable data. Their research introduces a quantum bagging algorithm, a technique that combines multiple learning models to improve accuracy and robustness, but uniquely employs unsupervised learning at its core. This method uses quantum clustering to reduce prediction errors and demonstrates a significant advantage over traditional supervised techniques when faced with corrupted labels, potentially unlocking new possibilities for machine learning in noisy environments.

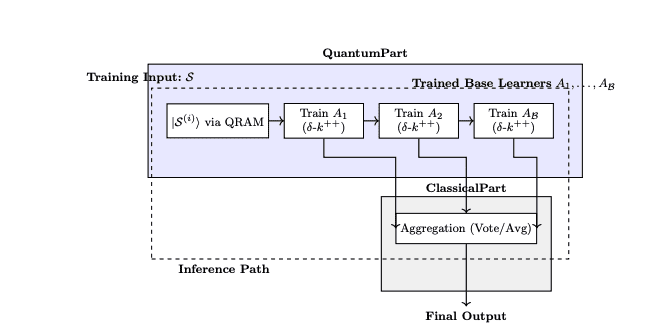

The development of noise-resilient quantum machine learning (QML) algorithms is critical in the noisy intermediate-scale quantum (NISQ) era. This work proposes a quantum bagging framework that uses QMeans clustering as the base learner to reduce prediction variance and enhance robustness to label noise. Unlike bagging frameworks built on supervised learners, this method leverages the unsupervised nature of QMeans, combined with quantum bootstrapping via QRAM-based sampling and bagging aggregation through majority voting. Extensive simulations on both noisy classification and regression tasks demonstrate the framework’s performance.

Quantum Ensemble Methods for Machine Learning

This text provides a comprehensive overview of quantum machine learning (QML), focusing on ensemble methods. It outlines the potential benefits of QML and delves into specific quantum algorithms used for classification, regression, and clustering, with a strong emphasis on quantum adaptations of established machine learning techniques like random forests and k-means clustering. A significant portion explores how ensemble methods, such as bagging and boosting, can be adapted and implemented within a quantum computing context. The text also considers the hardware and software tools used in QML research.

Quantum Machine Learning (QML) is the central topic, exploring the intersection of quantum computing and machine learning. Ensemble Methods are a key focus, examining how techniques like bagging, boosting, and random forests can be translated into the quantum realm. The text discusses specific quantum algorithms used for tasks like classification, regression, clustering, and dimensionality reduction, including quantum support vector machines and quantum principal component analysis. It recognizes that near-term quantum computers will likely operate alongside classical computers, leading to hybrid algorithms.

The text mentions tools like Qiskit and PennyLane, used for developing and simulating quantum algorithms. Quantum Random Access Memory (QRAM) is a crucial component for many QML algorithms, and Shadow Tomography is a technique for efficiently estimating quantum states. The text offers comprehensive coverage of QML, demonstrating a strong understanding of the field. The detailed exploration of ensemble methods is a particular strength, as these techniques are powerful in classical machine learning and hold potential benefits in the quantum realm. The inclusion of recent papers indicates the author’s familiarity with current research.

Quantum Bagging Boosts Machine Learning Robustness

Scientists have developed a new quantum bagging framework that significantly enhances the robustness of machine learning algorithms, particularly when dealing with noisy or corrupted data. This research addresses a critical need in the emerging field of quantum machine learning, where algorithms are vulnerable to errors present in real-world datasets. The team’s approach leverages the principles of classical bagging, but implements it using quantum techniques to improve performance and resilience. The breakthrough centers on a novel use of quantum k-means clustering, or QMeans, as the foundation for each base learner within the ensemble.

Unlike traditional methods that rely on supervised learning and are susceptible to label noise, this framework utilizes an unsupervised approach, avoiding overfitting to inaccurate data. The system generates diverse training subsets using a quantum-inspired technique, effectively creating multiple perspectives on the data without requiring deep or complex quantum circuits, making it suitable for current, intermediate-scale quantum devices. Experiments demonstrate that this quantum bagging algorithm performs comparably to its classical counterpart, KMeans, on clean datasets. However, the key advantage emerges when faced with label corruption; the quantum approach exhibits greater resilience, maintaining accuracy even when a significant portion of the training data is flawed.

Evaluations on benchmark datasets, including Breast Cancer, Iris, Wine, and Real Estate, confirm the method’s robust and competitive performance. The framework supports both classification and regression tasks, aggregating predictions through majority voting or averaging, respectively. This research delivers a practical pathway towards building more reliable quantum machine learning models, paving the way for applications in areas where data integrity is uncertain or compromised. By combining the strengths of quantum computation with a noise-tolerant learning strategy, scientists have taken a significant step towards realizing the full potential of quantum machine learning in real-world scenarios. The method’s shallow circuit design and reliance on unsupervised learning represent a pragmatic approach to overcoming current limitations in quantum hardware and algorithm development.

Quantum Bagging Boosts Noisy Data Learning

This research introduces a quantum bagging framework that leverages quantum k-means clustering to improve the robustness of machine learning algorithms, particularly when dealing with noisy data. The team demonstrates that this approach, which uses quantum techniques for sampling and aggregation, achieves performance comparable to its classical counterpart while exhibiting greater resilience to errors in data labels. This highlights the potential of unsupervised quantum methods for learning from imperfect information, a common challenge in real-world applications. The method relies on a specific quantum random access memory (QRAM) model, allowing algorithms to access data in superposition, and utilizes the swap test to efficiently estimate the similarity between quantum states.

By combining these quantum subroutines, the framework enables coherent sampling and variance reduction, making it well-suited for implementation on near-term quantum devices. While the current work assumes a specific QRAM model and focuses on performance parity with classical methods, the authors acknowledge that the practical realization of this approach depends on advancements in quantum memory technology. Future research directions include exploring the benefits of this framework with different quantum hardware configurations and investigating its application to more complex datasets and machine learning tasks.

👉 More information

🗞 A Quantum Bagging Algorithm with Unsupervised Base Learners for Label Corrupted Datasets

🧠 ArXiv: https://arxiv.org/abs/2509.07040