Federated learning represents a powerful approach to collaborative machine learning, enabling model training without direct data exchange, but classical methods face limitations in computational demands, privacy, and handling diverse datasets. Siva Sai, Abhishek Sawaika, Prabhjot Singh, and Rajkumar Buyya, all from The University of Melbourne, investigate Quantum Federated Learning (QFL) as a potential solution, a hybrid paradigm that leverages quantum computation to overcome these challenges. Their work surveys the emerging field of QFL, detailing architectural elements and classifying existing systems based on factors such as network topology and security mechanisms. By exploring applications in areas like healthcare and wireless networks, the researchers demonstrate how QFL enhances efficiency, security, and performance compared to traditional federated learning, while also outlining key challenges and future research directions for this promising technology.

This emerging area explores how quantum algorithms can enhance machine learning models, particularly in applications like financial fraud detection. The core principle involves training models across multiple decentralized data sources, such as individual banks, without directly exchanging sensitive data, thereby preserving privacy and reducing communication costs. Researchers are investigating how quantum computing can accelerate these processes and improve model accuracy.

Key technologies underpinning this progress include quantum computing, which utilizes quantum-mechanical phenomena like superposition and entanglement, and federated learning, a distributed approach to machine learning. Researchers are also exploring techniques like Blind Quantum Computing, which allows secure computation delegation, and Quantum Neural Networks, which leverage quantum principles to enhance neural network performance. Graph Neural Networks, well-suited for analyzing complex financial relationships, are also being integrated into these systems, alongside techniques like differential privacy and homomorphic encryption to further protect privacy. While financial fraud detection is a primary focus, QFL is also being explored in areas like the Internet of Things, healthcare, cybersecurity, and vehicular networks. This work addresses challenges such as high computational demands, privacy vulnerabilities, and communication inefficiencies, particularly with low-resource clients. The study details how QFL leverages quantum computing’s unique capabilities to enhance both the speed and security of collaborative model training without requiring data to leave individual data silos. The core innovation lies in employing quantum algorithms, specifically Quantum Approximate Optimization (QAOA) and Variational Quantum Eigensolver (VQE), to tackle complex optimization problems frequently encountered in deep learning.

These algorithms harness quantum parallelism to efficiently explore vast solution spaces, potentially identifying superior minima in high-dimensional loss landscapes. Researchers demonstrate how this approach improves both the accuracy and convergence rates of model training, especially with large-scale datasets. Furthermore, the study explores quantum techniques to address privacy concerns, investigating quantum key distribution, quantum homomorphic encryption, quantum differential privacy, and blind quantum computing as potential security mechanisms. The team also investigates quantum data encoding, quantum feature mapping, and quantum feature selection and dimensionality reduction to improve data representation and model performance. Researchers classify QFL systems based on architecture, data processing methods, network topology, and security mechanisms, revealing a diverse landscape of approaches. Pure QFL systems enable training of global quantum models, maintaining data privacy through techniques like Variational Quantum Eoptimization (VQE), utilizing quantum properties like superposition, inference, and entanglement to enhance learning efficiency and security. Recent studies demonstrate Pure QFL’s adaptability, with teams introducing entangable slimmable quantum neural networks (esQNNs) that maintain performance under changing conditions, and showcasing a communication-efficient Variational Quantum Algorithm.

Hybrid QFL models combine classical neural network layers with quantum layers, initially processing data with convolutional or fully connected layers to reduce computational load before encoding features into quantum states. These systems employ classical optimization algorithms to train quantum circuits iteratively. Data processing methods fall into three categories: quantum data encoding, quantum feature mapping, and quantum feature selection and dimensionality reduction. Researchers developed systems using quantum encoding to efficiently transform classical data into quantum states, with one study utilizing variational quantum circuits for quantum encoding of textual data.

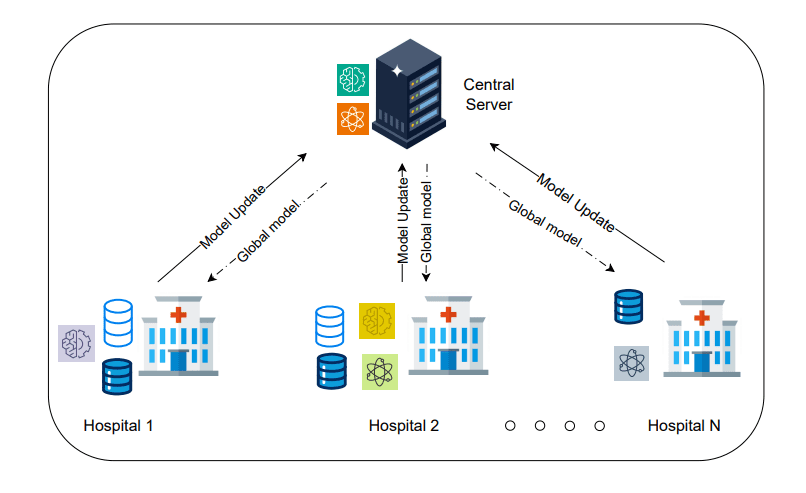

Quantum feature mapping techniques exploit quantum properties to create high-dimensional representations of data. Network topology in QFL systems is categorized as centralized, hierarchical, or decentralized, with the centralized approach being the most common. The team demonstrates how integrating quantum computation into the learning process can improve efficiency, security, and performance, particularly in scenarios with limited resources or non-identical data distributions. A key achievement is the development of a flexible QFL architecture, categorized by its system design, data processing methods, network topology, and security mechanisms, allowing for adaptation to diverse applications. The researchers successfully implemented and tested this framework within the context of Low Earth Orbit (LEO) satellite constellations, showcasing its feasibility in a challenging communication environment.

Through simulations using datasets such as Statlog and EuroSAT, they compared different scheduling modes, sequential, simultaneous, and asynchronous, alongside various security enhancements including quantum key distribution and quantum teleportation. Results indicate that while fully synchronized approaches may appear faster in ideal conditions, the sat-QFL modes maintain competitive model quality and are better suited to the realities of intermittent connectivity in orbit. The authors acknowledge that current research primarily focuses on classification tasks, and expanding QFL to areas like time-series analysis, object detection, and optimization represents a crucial next step. They also highlight the need for clearer justification of quantum resource utilization, and further investigation into the system’s variability and the potential for adversarial attacks.

👉 More information

🗞 Quantum Federated Learning: Architectural Elements and Future Directions

🧠 ArXiv: https://arxiv.org/abs/2510.17642