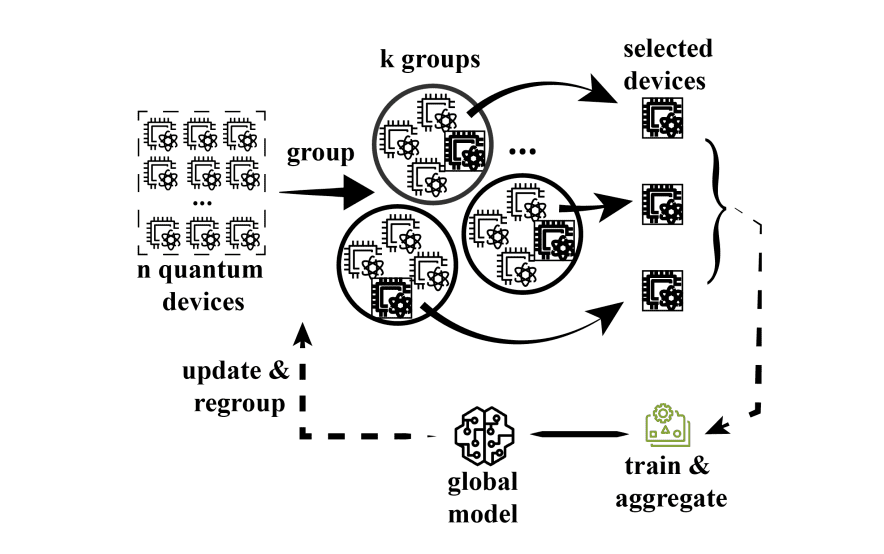

A novel model-driven quantum federated learning algorithm (mdQFL) addresses limitations in scalability and data heterogeneity. Experiments demonstrate mdQFL reduces communication costs by nearly 50% while maintaining or improving model accuracy and local training performance compared to standard quantum federated learning baselines.

The increasing volume of data and the proliferation of connected devices present significant challenges to distributed machine learning techniques such as federated learning (FL). FL enables collaborative model training without direct data exchange, but can suffer from communication bottlenecks and performance degradation due to variations in data distribution across participating devices. Researchers are now exploring the potential of quantum computation to address these limitations. A new study by Gurung et al. introduces a model-driven quantum federated learning (mdQFL) algorithm designed to improve communication efficiency and adaptability in heterogeneous quantum federated learning systems. Their work, titled ‘Communication Efficient Adaptive Model-Driven Quantum Federated Learning’, details a framework that demonstrably reduces communication costs – by almost 50% in their experiments – while maintaining or improving model accuracy compared to existing quantum federated learning approaches.

Model-Driven Quantum Federated Learning for Enhanced Efficiency

Conventional federated learning (FL) systems face challenges when dealing with large datasets, a high number of participating devices, and data that is not independently and identically distributed (non-IID). This research details a model-driven quantum federated learning (mdQFL) algorithm designed to address these limitations and improve both efficiency and accuracy in distributed machine learning.

The mdQFL algorithm actively mitigates training bottlenecks and enhances scalability within quantum federated learning environments. It achieves this by integrating quantum computing into the FL framework, reducing communication costs while maintaining, and often improving, model accuracy. The framework demonstrably enhances local model training compared to existing baseline methods, indicating a more robust and adaptable learning process.

Theoretical analysis underpins the empirical findings. Researchers establish bounds on computational complexity and performance, relying on assumptions of Lipschitz continuity – a mathematical condition ensuring stability – and employing a trust region approach to optimisation. This involves defining a region around a solution where the model is known to behave predictably. Specifically, they define a regret bound for the COBALA optimisation algorithm, relating it to the trust region radius. Regret, in this context, measures the difference between the algorithm’s performance and the optimal solution. They also derive a convergence rate for the qFedAvg algorithm – a quantum analogue of the widely used Federated Averaging algorithm – demonstrating a rate of $O(\frac{1}{T})$, where T represents the number of iterations. This indicates that the algorithm’s error decreases proportionally to the inverse of the number of iterations.

Experimental results, presented through figures and tables, illustrate the performance gains achieved by mdQFL, providing a clear comparison with existing methods. Researchers meticulously evaluate the algorithm’s performance across diverse datasets and configurations, ensuring the robustness and generalisability of the results. The availability of source code and experimental data promotes reproducibility and encourages further research.

A comprehensive analysis of the algorithm’s computational complexity provides insights into its scalability and feasibility for deployment in resource-constrained environments. Researchers acknowledge potential challenges and limitations, and outline areas for future investigation. The study concludes by highlighting the potential of quantum federated learning to revolutionise applications in healthcare, finance, and autonomous systems.

Researchers further explore training and update personalisation alongside test generalisation within the quantum federated learning (QFL) context. This offers potential benefits applicable to broader federated learning scenarios and paves the way for more robust and adaptable models.

👉 More information

🗞 Communication Efficient Adaptive Model-Driven Quantum Federated Learning

🧠 DOI: https://doi.org/10.48550/arXiv.2506.04548