Quantum computers promise revolutionary computational power, but current devices are prone to errors that limit their potential. Yibin Guo, Yi Fan, and Pei Liu, along with colleagues at their respective institutions, investigate a critical flaw in commonly used error mitigation techniques. Their work reveals that methods designed to correct readout errors, while improving initial results, inadvertently introduce escalating state preparation errors that grow exponentially as the number of qubits increases. This research demonstrates that these overlooked errors significantly overestimate the fidelity of complex quantum states and distort the outcomes of important algorithms, such as those used for eigensolving and simulating time evolution, demanding a more careful approach to benchmarking and managing state preparation errors in future quantum processors.

Quantum Chemistry Algorithms And Near-Term Implementation

Researchers are actively developing quantum algorithms and strategies to overcome limitations in current quantum hardware for quantum chemistry applications. The work focuses on algorithms for calculating molecular energy, methods for representing molecular structure, and techniques for minimizing computational errors, including the Variational Quantum Eigensolver (VQE) and Unitary Coupled Cluster (UCC). Implementing UCC on near-term quantum computers requires approximations, such as Trotterization, which introduces errors that researchers are actively minimizing through higher-order decompositions and optimal ordering techniques. Scientists are employing Zero-Noise Extrapolation (ZNE) and Probabilistic Error Cancellation (PEC) to estimate ideal, noise-free results, and developing methods to correct for errors in measuring qubit states and identify dominant error sources.

These efforts rely on mathematical tools like Fock space and transformations like Jordan-Wigner and Bravyi-Kitaev, facilitated by software packages such as PySCF and Q2Chemistry, and refined using derivative-free optimization algorithms. Researchers are also investigating techniques like symmetry adaptation, which reduces qubit requirements, and higher-order Trotter decompositions, which improve accuracy. By exploiting point group symmetry, they can simplify calculations and develop error-resilient algorithms, highlighting a rapidly evolving field where theoretical advancements and robust software tools are crucial for overcoming hardware limitations.

Quantifying Readout Errors in Noisy Quantum Systems

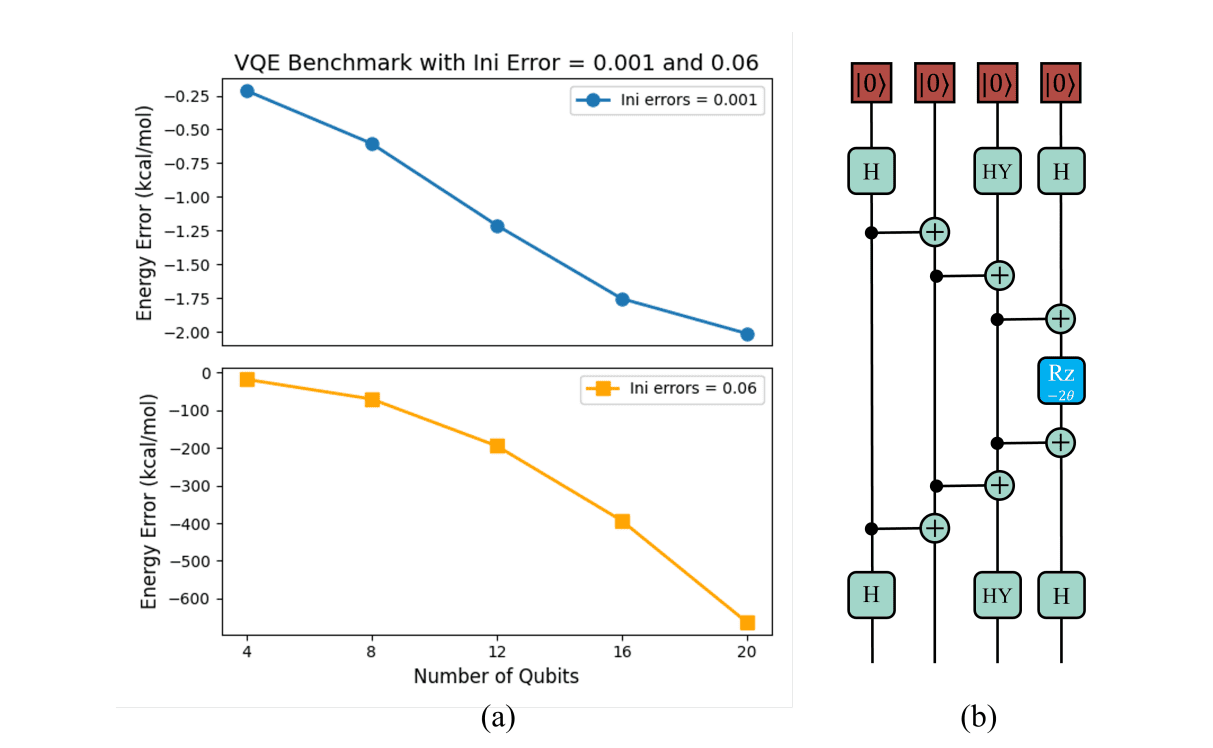

Scientists have developed a rigorous methodology to investigate the impact of errors in noisy quantum devices, focusing on state preparation errors. By modeling experimentally measured probability distributions as vectors affected by measurement error, they mathematically relate noisy and ideal distributions, establishing a foundation for quantifying and mitigating readout errors. The research demonstrates that conventional quantum readout error mitigation (QREM) techniques introduce systematic errors that escalate with increasing qubit number. Focusing on large-scale entangled state preparation and measurement, scientists revealed that state preparation error leads to a significant overestimation of fidelity, masking hardware limitations, and affects prevalent quantum algorithms like the variational quantum eigensolver (VQE) and quantum time evolution (QTE) methods. This work pioneered a detailed analysis of how state preparation and measurement (SPAM) errors affect quantum computation accuracy, calculating an upper bound for acceptable state preparation error rates, and establishing a critical constraint for reliable results as quantum systems grow in complexity. This methodology provides a crucial framework for benchmarking and managing state preparation errors, paving the way for more accurate and reliable quantum computations.

State Preparation Errors Limit Quantum Fidelity

Scientists have demonstrated that conventional methods for mitigating readout errors introduce systematic errors that grow exponentially with increasing qubit number. This work focuses on the impact of state preparation and measurement (SPAM) errors, revealing that current techniques, while correcting for readout inaccuracies, simultaneously introduce errors from the initial state preparation, leading to an overestimation of fidelity in large-scale entangled states. The team showed that the mixture of SPAM errors leads to a biased overestimation that scales exponentially with system size when preparing and characterizing large entangled states, and affects widely used quantum algorithms like the variational quantum eigensolver (VQE) and quantum time evolution (QTE) methods. These deviations arise because the error mitigation process accumulates errors as the number of qubits increases. To quantify the acceptable level of state preparation error, scientists calculated an upper bound for the initialization error rate, ensuring that the deviation of outcomes remains bounded and enabling more reliable results from quantum computers. The research highlights that the mitigation matrix is affected by the initialization error of each qubit, and emphasizes the need to carefully benchmark and treat state preparation errors as quantum systems scale up.

State Preparation Errors Limit Fidelity Scaling

This research demonstrates that conventional methods for mitigating readout errors introduce systematic errors that grow exponentially with the number of qubits. The team discovered that widely used error mitigation techniques, while addressing measurement inaccuracies, simultaneously introduce errors in the initial state preparation, leading to an overestimation of fidelity, particularly in large-scale entangled states, and masking genuine gate operation errors. The researchers illustrated this effect by applying their analysis to quantum chemistry algorithms, revealing significant deviations from ideal outcomes as the system scale increases, and established a quantifiable upper bound for acceptable state preparation error rates, highlighting the critical need for more precise qubit reset techniques as quantum systems grow in complexity. They suggest that self-consistent characterization methods may offer a viable solution for near-term quantum applications, and note that independent work corroborates their finding that state preparation errors can bias estimations of observable expectation values, reinforcing the significance of this previously overlooked source of error in quantum computation.

👉 More information

🗞 The Exponential Deviation Induced by Quantum Readout Error Mitigation

🧠 ArXiv: https://arxiv.org/abs/2510.08687