Controlling quantum bits with sufficient accuracy remains a major hurdle in building practical quantum computers, and achieving high-fidelity operations is critical for overcoming the limitations of current technology. Mohammad Abedi and Markus Schmitt, both from the Quantum Control group at Forschungszentrum Jülich, present a new method for designing the complex sequences of operations needed to link, or entangle, quantum bits based on electron spins in semiconductor structures. Their work demonstrates that a technique called reinforcement learning can discover effective control protocols, even when accounting for realistic experimental imperfections and noise, and importantly, avoids the limitations of more traditional optimisation approaches. This research represents a significant step towards scalable quantum computation by offering a robust and adaptable method for achieving the precise control needed for complex quantum algorithms.

Deep Reinforcement Learning for Qubit Control

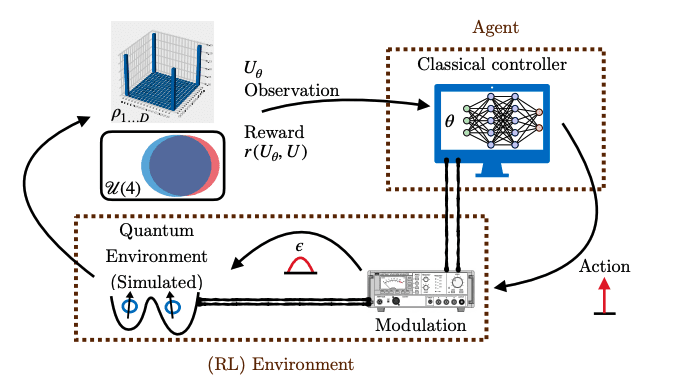

This research details a new approach to controlling quantum systems, employing deep reinforcement learning (DRL) to design optimal control sequences for qubits, the fundamental building blocks of quantum computers. The work focuses on achieving high-fidelity control, crucial for building scalable quantum computers, and addresses the challenges posed by qubit susceptibility to noise. Traditional methods for quantum control often require complex manual tuning and can struggle with noise, so researchers turned to DRL to automate the process and potentially overcome these limitations. The team used a sophisticated DRL algorithm, Soft Actor-Critic, which learns from past experiences and prioritises both reward and exploration.

To improve the algorithm’s robustness and ability to generalise, they incorporated techniques like dropout Q-functions and truncated mixture of distributional quantile critics, which help to avoid overestimation bias. The DRL agent learns by interacting with a simulated quantum system, receiving rewards based on the fidelity of the implemented quantum gates. The research focuses on spin qubits, specifically those based on singlet-triplet states in GaAs heterostructures, and incorporates realistic noise models, particularly charge noise, which significantly impacts qubit coherence and gate fidelity. The team developed a software package, qopt, for simulating the quantum system and evaluating the performance of the learned control pulses, utilising the Julia programming language and standard machine learning libraries.

This simulation environment allows the agent to learn without the need for extensive physical experimentation. The results demonstrate the ability to design quantum gates with fidelities exceeding 99. 9% using DRL, showcasing a significant improvement over traditional methods. The learned control pulses are also shown to be more robust to noise, offering a pathway towards more reliable quantum computations. This automation of the gate design and calibration process reduces the need for manual tuning, streamlining the development of quantum hardware.

Reinforcement Learning Optimises Qubit Control Sequences

Researchers have developed a novel approach to controlling quantum systems, employing reinforcement learning to design optimal control sequences for qubits. Unlike traditional methods that rely on detailed models of the quantum system and its noise, this technique allows an intelligent agent to learn directly from interactions with a simulated quantum environment. This avoids potential biases introduced by inaccurate models, which is particularly advantageous as building precise models of complex quantum systems is often challenging. Crucially, the team addressed the practical limitations of real quantum hardware by incorporating realistic constraints into the simulation, including the finite speed of control signals and the presence of various noise sources.

This ensures that the learned control protocols are not merely theoretical optima but are also feasible for implementation on actual quantum devices. A key innovation lies in how the agent interacts with the simulated quantum environment; instead of requiring extensive measurements to characterise the system’s state, the researchers designed the system to provide feedback based on the overall performance of the control sequence, reducing the experimental resources needed for training. Furthermore, the team accounted for the inherent disturbance caused by observing a quantum system, a fundamental challenge in quantum control, by carefully designing the feedback mechanism. To bridge the gap between simulation and reality, the researchers incorporated a detailed model of the control hardware into the simulation, accurately capturing the dynamics of the control signals and their impact on the qubits. The agent learns to generate continuous control signals, which are then convolved with the measured impulse response of the hardware, effectively modelling the time-dependent behaviour of the system. This level of realism is crucial for translating the learned control strategies into practical implementations on real quantum devices.

Reinforcement Learning Optimises Qubit Control Pulses

Researchers have achieved high-fidelity control of qubits using a novel approach to optimising the pulses that manipulate them. The team focused on semiconductor-based qubits, specifically those encoded using electron spins in a system of quantum dots, and developed a reinforcement learning (RL) agent to design the optimal control sequences. This agent learns to create the precise pulses needed to entangle qubits, a crucial step for performing complex quantum computations. The research addresses a significant challenge in quantum computing: the inherent noise and imperfections in physical qubits. Traditional methods for optimising control pulses often rely on models of the system, which can introduce inaccuracies and limit performance.

Instead, the team’s RL agent learns directly from the simulated quantum dot environment, allowing it to discover control strategies that are robust to realistic experimental constraints like noise and limited pulse speeds. This avoids the biases inherent in model-based optimisation techniques and can potentially achieve higher fidelity. The team demonstrated the effectiveness of their approach by optimising the creation of a CNOT gate, a fundamental building block for quantum algorithms. The resulting control pulses achieved performance exceeding that of traditional gradient-based methods, even when subjected to various sources of noise, including fluctuations in magnetic fields, charge noise affecting the qubit energy levels, and limitations in the speed of the control hardware.

Specifically, the optimised pulses were designed to minimise the impact of these imperfections, resulting in more reliable and accurate qubit manipulation. Importantly, the researchers incorporated realistic details of the quantum dot system into their simulations, modelling the finite rise time of the control pulses and the effects of a convolution filter. They also accounted for slow fluctuations in magnetic field gradients and detuning voltages, as well as fast, time-correlated charge noise. By accurately modelling these effects, the team ensured that their optimised control pulses would perform well in a real experimental setting.

Reinforcement Learning Achieves High Fidelity Quantum Control

This research demonstrates the feasibility of using reinforcement learning to control quantum hardware, specifically semiconductor-based singlet-triplet qubits in a double dot, even under realistic experimental constraints. The team successfully trained an agent to optimise entangling protocols while accounting for factors such as finite rise-time effects and various noise contributions, achieving gate fidelities of 99. 9% in certain regimes. Importantly, the approach avoids the model-biases inherent in traditional gradient-based methods and, with sufficient measurements, performance is limited only by system and shot noise, not by confusion in the learning process itself.

The study highlights the importance of careful agent design, as even small changes to the observed state significantly impacted performance, and demonstrates that the agent can adapt to correct for noise and minimise power consumption. Future research directions include investigating the use of autoregressive neural network structures to enhance agent capabilities when limited information is available, and integrating reinforcement learning into existing quantum control frameworks. The authors acknowledge that performance ultimately remains constrained by system noise and the number of measurements taken during process tomography.

👉 More information

🗞 Reinforcement learning entangling operations on spin qubits

🧠 ArXiv: https://arxiv.org/abs/2508.14761