The promise of quantum computers hinges on their ability to solve problems intractable for even the most powerful classical machines, but building these devices is incredibly challenging. Researchers are now exploring “partially fault-tolerant” architectures, a middle ground offering improved performance without the full complexity of complete error correction, and a new study published in PRX Quantum details a significant step forward in this area. By optimizing how calculations are compiled for a novel architecture—designed to maximize precision with limited resources—scientists have achieved over a ten-fold speedup in simulating the behavior of complex materials. This advance brings practical quantum simulation, and ultimately, materials discovery, closer to reality.

STAR Architecture and Optimization

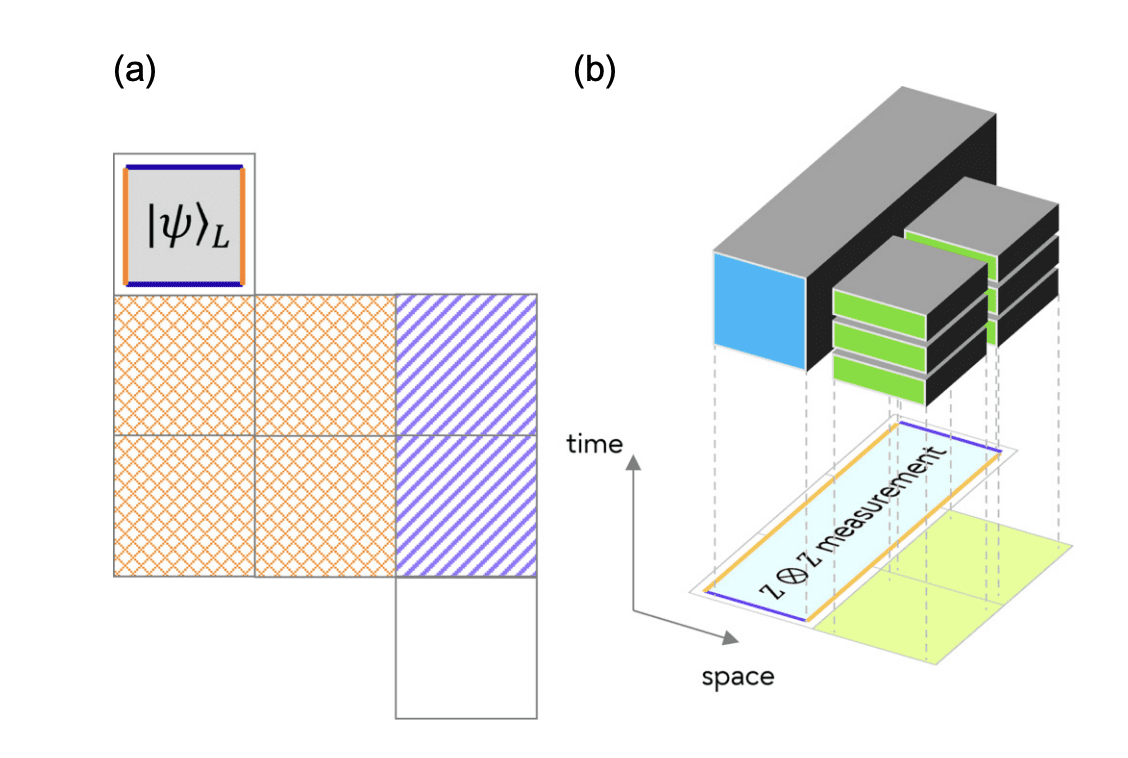

A novel approach to partially fault-tolerant quantum computing centers on the “space-time efficient analog rotation quantum computing architecture,” or STAR architecture, designed to minimize resource demands while maximizing the precision of non-Clifford gates crucial for universal computation. However, inherent processes like repeat-until-success (RUS) protocols and state injection introduce computational overhead, necessitating circuit optimization. Researchers have addressed this by developing efficient compilation methods, specifically for simulating the time evolution of the two-dimensional Hubbard model, a promising application for the STAR architecture. This work introduces techniques like the parallel-injection protocol and adaptive injection-region updating, integrated with fermionic SWAP (fSWAP), to significantly reduce time overhead, achieving over a 10x acceleration compared to serial compilation. Estimates suggest that, for devices with a physical error rate of 10−4, approximately 6.2 x 104 physical qubits would be required to surpass classical computation in estimating the ground-state energy of an 8×8 Hubbard model, highlighting the potential of this optimized architecture.

Hubbard Model Time Evolution

Researchers are actively exploring methods to efficiently simulate the time evolution of the Hubbard model, a key application for partially fault-tolerant quantum architectures like the “space-time efficient analog rotation” (STAR) system. This work details an optimized compilation technique leveraging Trotter-based time evolution for the two-dimensional Hubbard Hamiltonian, aiming to minimize computational overhead inherent in non-deterministic processes such as repeat-until-success protocols and state injection. Specifically, the authors introduce a parallel-injection protocol and adaptive injection-region updating, integrated with the fermionic SWAP (fSWAP) technique, to streamline the simulation. This approach yields a significant acceleration—over ten times faster than naive serial compilation—and enables estimations of resource requirements for quantum phase estimation of the 2D Hubbard model; approximately 6.2 x 10⁴ physical qubits are estimated to be necessary for an 8×8 model to outperform classical computation with a physical error rate of 10⁻⁴.

Resource Estimation and Scalability

Resource estimation and scalability are central challenges in realizing practical quantum advantage, particularly with current noisy intermediate-scale quantum (NISQ) devices. Research detailed in this compilation focuses on optimizing resource allocation within a partially fault-tolerant “space-time efficient analog rotation quantum computing architecture” (STAR architecture) designed to minimize qubit requirements while maintaining precision. The authors address computational overhead introduced by non-deterministic processes like repeat-until-success protocols, proposing techniques – a parallel-injection protocol and adaptive injection-region updating – to streamline time evolution simulations. These optimizations, integrated with a fermionic SWAP (fSWAP) technique, achieved over a 10x acceleration in simulating the 2D Hubbard model, a promising application for the architecture. Crucially, resource estimation for quantum phase estimation of the 8×8 Hubbard model suggests approximately 6.2 x 10⁴ physical qubits are needed, assuming a physical error rate of 10⁻⁴, to surpass classical computation speeds. This analysis highlights a pathway towards scaling quantum algorithms with limited, yet increasingly sophisticated, hardware.